Authors: Alexey Perminov, Tatiana Khanova, Grigory Serebryakov

In previous posts, we explored how a Pytorch model may be converted and run on OpenVINO as well as what deep learning model optimization tools are available within the OpenVINO toolkit.

Today let’s take a look at how TensorFlow trained model can be used and deployed to run with OpenVINO.

Overview

- Setting up the environment

- Preparing the TensorFlow model

- Converting the model to Intermediate Representation format

- Running model inference in OpenVINO

- Conclusions

Setting up the environment

First of all, we need to prepare a python environment: Python 3.5 or higher (according to the system requirements) and virtualenv is what we need:

python3 -m venv ~/venv/tf_openvino source ~/venv/tf_openvino/bin/activate

Let’s then install the desired packages:

pip3 install --upgrade pip setuptools pip3 install -r requirements.txt

Here requirements.txt contains the following packages:

numpy tqdm tensorflow-cpu==1.15 argparse scipy imageio moviepy

The second thing we need is to install the latest version of the OpenVINO toolkit using official installation instructions, (Linux in my case). Remember to set the required environment variables:

source /opt/intel/openvino/bin/setupvars.sh

It may be useful to add this action to the shell initialization script or virtual environment activation script so that it is triggered by default.

Alternatively, here you can find a Google Colab notebook representing environment setup and the main reproduction steps from the article.

Preparing the TensorFlow model

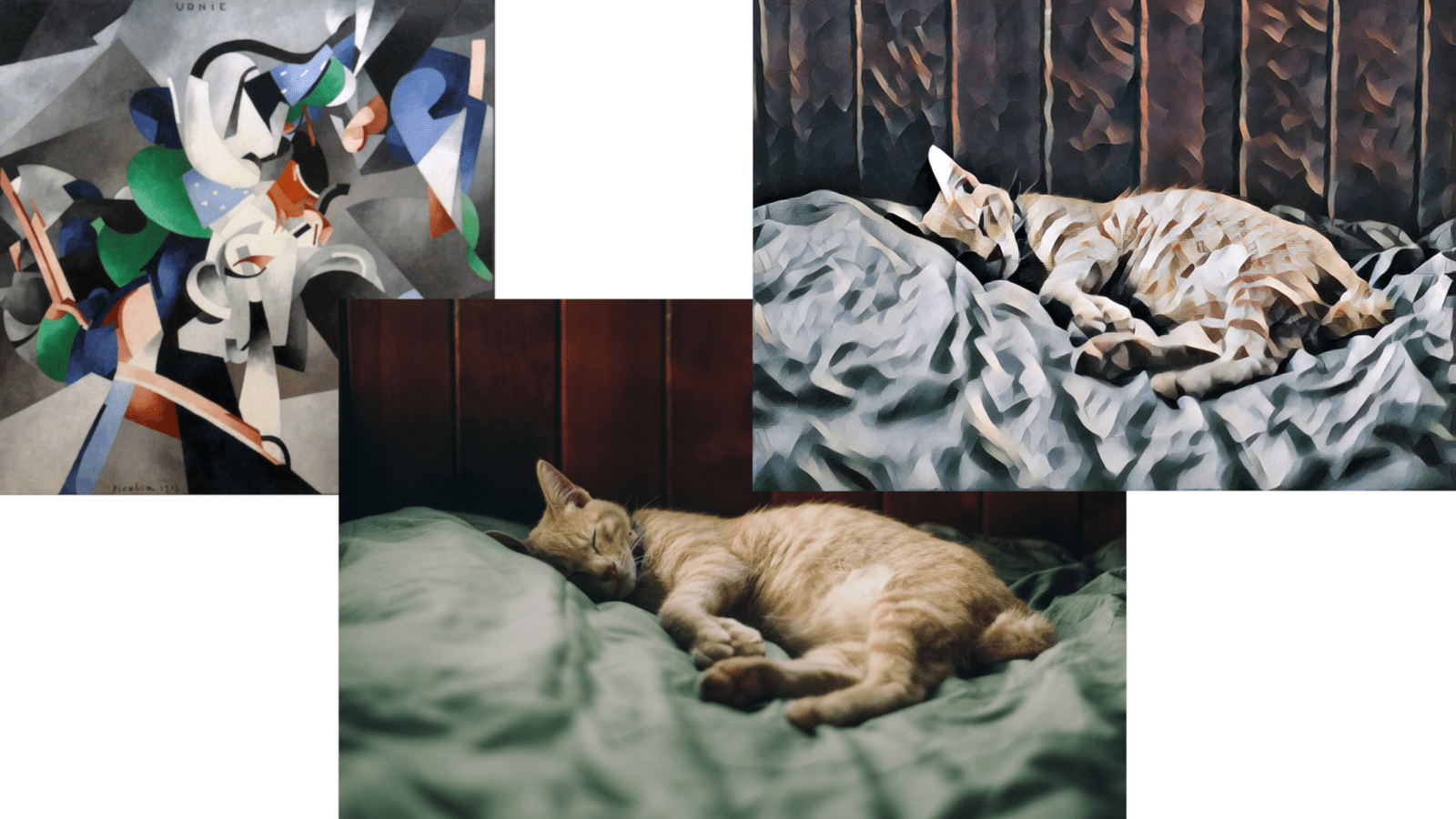

Ever wished a famous artist would paint a picture of your beloved kitty? With the evolution of the tools in deep neural networks – you’re good. The Neural Style Transfer algorithm initially proposed in A Neural Algorithm of Artistic Style can make your wish come true. The idea is to extract the image content and separate it from the used representation (style) with the help of a neural network. Then we will use the extracted style and combine it with arbitrary images to get impressive results with artificial images made from the original image’s content and desired artistic style.

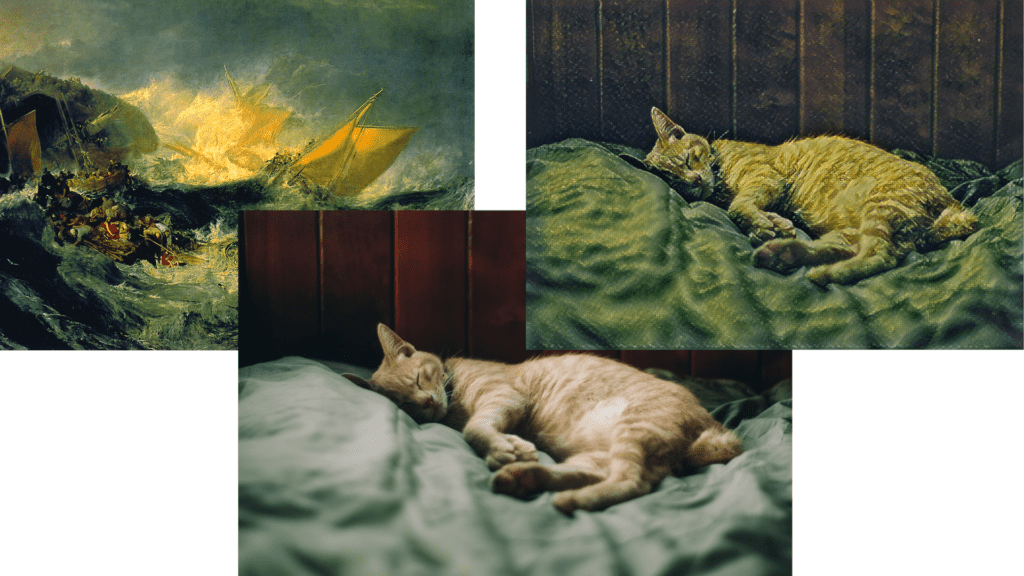

Image style transfer example

In the example above you can see how the artistic style of J. M. W. Turner’s “The Shipwreck of the Minotaur” painting is transferred to a reference kitty image (by Dương Nhân). To perform such a trick we need a trained TensorFlow model. We could prepare and train a model from scratch but let’s take a pre-trained one and use the Fast Style Transfer model from this repo. It fits pretty well with our purpose.

The referenced repository provides several model checkpoints, each is trained on different artistic style images and can be downloaded here. Typically, TensorFlow checkpoints contain model weights and computational graph metadata used during training. To deploy a model into production and use it for inference we need to fetch the frozen graph. Let’s clone the repo and use a simple python script to do that:

def main(checkpoint_path, input_shape, out_graph_name):

# Init graph and session to be used

g = tf.Graph()

soft_config = tf.compat.v1.ConfigProto(allow_soft_placement=True)

with g.as_default(), g.device('/cpu'), tf.compat.v1.Session(config=soft_config) as sess:

# Placeholder variable for graph input

img_placeholder = tf.compat.v1.placeholder(tf.float32, shape=input_shape, name='img_placeholder')

# The model from the repo

transform.net(img_placeholder)

# Restore model from checkpoint

saver = tf.compat.v1.train.Saver()

saver.restore(sess, checkpoint_path)

# Freeze graph from the session.

# "add_37" is the actual last operation of graph

frozen = tf.compat.v1.graph_util.convert_variables_to_constants(sess, sess.graph_def, ["add_37"])

# Write frozen graph to a file

graph_io.write_graph(frozen, './', out_graph_name, as_text=False)

print(f'Frozen graph {out_graph_name} is saved!')

The full code of the script may be downloaded here.

Just put the script in the root folder of the model repo and execute it with the following parameters:

python get_frozen_graph.py --checkpoint models/wreck.ckpt

In the code snippet above, we perform the required TensorFlow graph pre-initializations and use tf.Saver() to restore the model data from the checkpoint. Then we write the converted frozen graph to a file.

Note that frozen graph conversion needs the name of the output graph operation, that is “add_37” in our case. For the arbitrary model, you may find the output operation by simply printing the network nodes or exploring the model in a tool like Netron.

Converting the model to Intermediate Representation format

Ok, now we have the frozen graph, what’s next? As for the PyTorch model, to run inference in OpenVINO Inference Engine, we have to convert the model to Intermediate Representation (IR) format. Fortunately, OpenVINO Model Optimizer has built-in support for TensorFlow model conversion. You can check the currently supported TensorFlow operation set on this OpenVINO page.

Before we start, we should configure the Model Optimizer (if you skipped this step during the OpenVINO installation). You can find the configuration instructions here.

Since our prepared frozen graph already contains all the required information about the model like the desired input shape and output node name we can run Model Optimizer with the default parameters:

mo_tf.py --input_model inference_graph.pb

In case of a successful conversion, we will get `inference_graph.xml` containing the resulting IR model description and `inference_graph.bin` containing the model weights data. If the conversion isn’t successful, it is good to start from checking supported layers of the target framework, TensorFlow in our case. Probably it may require to slightly change the model architecture or to implement your custom layer for the OpenVINO.

Running model inference in OpenVINO

Let’s prepare a simple python script with OpenVINO Inference Engine initialization, IR model loading, and inference on provided images. The full version of this script is also available here.

First of all, we import the required packages and define a function for argument parsing.

import os

import cv2

import argparse

import time

import numpy as np

from openvino.inference_engine import IECore

from tqdm import tqdm

IMG_EXT = ('.png', '.jpg', '.jpeg', '.JPG', '.JPEG')

def parse_args():

"""Parses arguments."""

parser = argparse.ArgumentParser(description='OpenVINO inference script')

parser.add_argument('-i', '--input', type=str, default='',

help='Directory to load input images, path to a video or '

'skip to get stream from the camera (default).')

parser.add_argument('-m', '--model', type=str, default='./models/inference_graph.xml',

help='Path to IR model')

return parser.parse_args()

The next function is for OpenVINO inference initialization and Intermediate Representation model loading. We get paths to .xml and .bin model files, read them, and load the model into the Inference Engine. After that, we can use it for inference.

def load_to_IE(model):

# Getting the *.bin file location

model_bin = model[:-3] + "bin"

# Loading the Inference Engine API

ie = IECore()

# Loading IR files

net = ie.read_network(model=model, weights=model_bin)

input_shape = net.inputs["img_placeholder"].shape

# Loading the network to the inference engine

exec_net = ie.load_network(network=net, device_name="CPU")

print("IR successfully loaded into Inference Engine.")

return exec_net, input_shape

OpenVINO allows us to use two kinds of inference requests: synchronous – every next request is executed only when the previous one is finished, and asynchronous – several requests are executed simultaneously by several executors. In this example we use synchronous inference requests interface:

def sync_inference(exec_net, image):

input_blob = next(iter(exec_net.inputs))

return exec_net.infer({input_blob: image})

In the main function we first prepare the list of images that will be used for style transfer and then initialize Inference Engine:

def main(args):

if os.path.isdir(args.input):

# Create a list of test images

image_filenames = [os.path.join(args.input, f) for f in os.listdir(args.input) if

os.path.isfile(os.path.join(args.input, f)) and f.endswith(IMG_EXT)]

image_filenames.sort()

else:

image_filenames = [args.input]

exec_net, net_input_shape = load_to_IE(args.model)

# We need dynamically generated key for fetching output tensor

output_key = list(exec_net.outputs.keys())[0]

For each input image, we resize it to the network’s input size and convert it to the required tensor format with cv2.dnn.blobFromImage. Then, the most interesting part – inference request, followed by simple post-processing.

for image_num in tqdm(range(len(image_filenames))):

image = cv2.imread(image_filenames[image_num])

image = cv2.resize(image, (net_input_shape[3], net_input_shape[2]))

X = cv2.dnn.blobFromImage(image, swapRB=True)

out = sync_inference(exec_net, image=X)

result_image = np.squeeze(np.clip(out[output_key], 0, 255).astype(np.uint8), axis=0).transpose((1, 2, 0))

Finally, we show the resulting image.

cv2.imshow("Out", cv2.cvtColor(result_image, cv2.COLOR_RGB2BGR))

cv2.waitKey(0)

cv2.destroyWindow("Out")

if __name__ == "__main__":

main(parse_args())

Let’s run the script specifying input directory with images to apply for style transfer and converted IR model:

python openvino_inference.py -i ./images/ -m ./models/inference_graph.xml

The resulting images with transferred style will be shown in a pop-up window.

Performance comparison

As a final step let’s compare inference time in both original TensorFlow and converted to OpenVINO inference pipelines. For that purpose, I added a timestamp measuring around inference calls: session run call in case of Tensorflow and synch inference request in case of OpenVINO. Averaged over 100 images with the same size (720x1024x3), performance results per image are pretty similar (in seconds, CPU: Intel® Core™ i5-8265U CPU @ 1.60GHz x 8):

OpenVINO_CPU Inference time: Mean: 1.554 Min: 1.444 Max: 1.979 TensorFlow_Cpu Inference time: Mean: 1.933 Min: 1.827 Max: 2.080

Conclusions

In this short article, we looked at how the Tensorflow model may be easily converted and run in the OpenVINO Inference Engine environment. Moreover, we took a look at the Neural Style Transfer algorithm application and tried it on arbitrary images. We’ve shown that OpenVINO provides a considerable speed up and allows us to run even the most demanding DL algorithms on a pretty average hardware. We hope it was an interesting and useful journey.

________________________________________

Get the Intel® Distribution of OpenVINO™ toolkit

Contribute – If you have any ideas in ways we can improve the product, we welcome contributions to the open-sourced OpenVINO™ toolkit.

Want to learn more? Join the conversation to discuss all things Deep Learning and OpenVINO™ toolkit in Intel’s community forum.

Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries.

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.

________________________________________

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Testing date: December 23, 2020

Complete system configuration details: Ubuntu 18.04, Intel® Core™ i5-8265U CPU @ 1.60GHz x 8

Setup details: OpenVINO™ toolkit version 2020.4

Who did testing: Alexey Perminov, OpenCV.AI

Intel technologies may require enabled hardware, software or service activation.

________________________________________

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries.