Introduction

Deep learning tools are extensively used in vision applications across most industries, ranging from facial recognition on mobile devices to Tesla’s self-driving cars. However, using the right tools is paramount when working on these applications, as it requires in-depth knowledge and specialization.

After reading this article, you’ll understand what deep learning tools are, why they are used, and explore the most common types you can use in your projects and applications.

What are deep learning tools?

Generally, deep learning models are trained on hundreds or even thousands of images, videos, or other digital mediums. However, this data can be voluminous and unstructured. To sort this complex data in a structured manner, we use deep learning tools.

These tools play a key role in analyzing and processing visual data and deriving meaningful information from them. At its core, these deep learning tools use complex algorithms from machine learning, artificial intelligence, pattern recognition, and digital signal processing. Advancements in computing power, optimizations in algorithms and neural network architectures, and the availability of large datasets have catapulted the demand for deep learning tools.

Must know Deep Learning Tools

There are several tools available to work with images and videos in computer vision. In this section, we’ll discuss some of the most common deep learning tools. They can vary in complexity and area of application. They can be a straightforward library used to perform a basic image processing operation or a more advanced system that identifies objects, understands scenes, or recognizes facial recognition.

TensorFlow

TensorFlow is an open-source library for numerical computations, statistical and predictive analysis, and large-scale deep learning. It was released in 2015 by Google under the Apache 2.0 license. DistBelief, a closed-source Google framework, was TensorFlow’s predecessor. It offered a testbed for deep learning implementations. Some of Google’s applications and online services were powered by TensorFlow and its first TPU (Tensor Processing Unit). As of writing this, TensorFlow 2.15 is the most recent version, which was released in 2024.

It is one of the most popular frameworks for deep learning projects. Unlike other numerical libraries used for deep learning, TensorFlow was designed for both research and development.

With TensorFlow, developers can create dataflow graphs. These structures describe how the data moves through the graph or series of nodes. Each node represents a mathematical operation, and a connection between two nodes is a multidimensional data array or tensor. A Tensor can be defined as a container that can be used for storing, representing, or changing data.

A little side note: Since tensors form an integral part of the framework, Google’s framework is called TensorFlow.

TensorFlow can train and run deep neural networks for tasks like image recognition, word embeddings, handwritten digital classification, image segmentation, object detection, and many more. Although TensorFlow uses Python for front-end API to build applications, much like OpenCV, we can use the framework in different languages like C++ or Java. So, one can train and deploy deep learning models quickly, regardless of the language or platform they use.

TensorFlow includes both high-level and low-level APIs. Google recommends that low-level APIs can be used for debugging applications, and high-level APIs are good for simplifying data pipeline development and application programming.

TensorFlow holds the highest share of the Data Science and Machine Learning market, standing at 37.28%, followed by OpenCV. With close to 22,000 brands leveraging TensorFlow, we can say it is one of the most commonly used deep learning tools, and mastering it is paramount to staying relevant in the realm of computer vision.

PyTorch

PyTorch is yet another big name in the realm of deep learning frameworks. It is an open-source deep learning library to develop and train neural network-based models. Officially launched as Torch back in 2002 by a few individuals, it was one of the earliest Frameworks, which was later picked up by Facebook’s Research Lab and launched as PyTorch in 2016. It is primarily developed to train and implement deep learning models in a more accurate and efficient manner. PyTorch merged with Caffe2, another Python framework, in 2018.

Developed in C++ with a Python API, PyTorch is much more intuitive to understand, meaning developers could feel more comfortable using this framework than other deep learning frameworks. Due to its deep integration with Python, we can also use various Python debugging tools. Also, PyTorch’s documentation is organized and handy for novices. This makes it apt for academic and research purposes.

Unlike its counterparts like TensorFlow, which leverages computation graphs, PyTorch uses dynamic computation, allowing greater flexibility in building complex architectures. This means features can be changed during runtime, and the gradient calculations also dynamically vary with them. It uses reverse mode automatic differentiation. Simply put, it is a form of a tape recorder that records all the operations, which it then replays backward to compute gradients. This makes it easy to debug and adapt to certain applications, making it popular for prototyping.PyTorch is up there, with TensorFlow and OpenCV taking the third spot with 21.39% of the total Data Science and Machine Learning market. With nearly 13,000 brands leveraging PyTorch, it is another important deep learning tool one must have in their arsenal.

OpenCV

Next on the list of deep learning tools is OpenCV, one of the biggest open-source computer vision libraries. Officially launched in 1999, OpenCV was initially a part of Intel Research for advanced CPU-intensive applications. Some of its primary goals include

- Offering a common infrastructure for developers to build on, with a more readily readable and transferable code

- Providing not only open but also optimized code for basic vision infrastructure

- Offering free performance-optimized code for advanced vision-based commercial applications

OpenCV was originally written in C++, serving as its primary interface. Wrapper libraries are available in various languages to encourage their usage by a broader audience, the most common being the Python Wrapper, more commonly known as OpenCV-Python.

It has over 2,500 optimized algorithms, including classic and state-of-the-art computer vision and machine learning algorithms. These algorithms can be used for a wide range of tasks like object recognition & detection, face detection, or tracking camera movements. Tech giants like Google, Microsoft, Intel, and Yahoo use the OpenCV library extensively.

It supports Windows, Linux, Mac OS, and Android and offers interfaces for C++, Python, Java, and MATLAB.

With over 13,000 brands leveraging OpenCV as a data science and machine learning tool and over 18 million downloads, it is one of the most commonly used deep learning tools, standing at 21.68% market share in the data science and machine learning market.

CUDA

The Compute Unified Device Architecture, or CUDA, is a high-level language for writing code that runs on NVIDIA GPUs in parallel. CUDA enhances the Graphical Processing Units or GPUs and is based on C/C++. We can write and execute code using the GPU with CUDA. NVIDIA launched CUDA in 2006 as a parallel computing platform and as a model for enhancing the parallel computing engine already present in NVIDIA GPUs. It better addresses intricate computational challenges more efficiently than CPUs. This is because GPUs have smaller ALUs (Arithmetic Logic Units) than CPUs, enabling them to handle not one but multiple parallel calculations simultaneously.

In addition, CUDA is available in C, C++, and Fortran so that developers can implement parallel programming much more easily. All we need to do is add a few basic keywords to these languages to access the GPU’s virtual instruction set and parallel computational elements.

In 2003, a group of Stanford researchers originally developed CUDA as a general-purpose programming platform. NVIDIA funded CUDA at the time, and the lead researcher subsequently moved to NVIDIA to develop CUDA as a commercial GPU-based parallel computing project.

But what’s the need for CUDA in deep learning?

As we know, GPUs are one of the most important hardware when it comes to training and building deep learning models. GPUs are designed for high-speed parallel computations. And to put these fast computations into action, GPUs need CUDA.

CUDA is free, easy to use, and available for various operating systems like Windows and Linux. It also offers a wide range of parallel computing libraries and is significantly faster than competing products like OpenCL.With over 40 million downloads and over 4 million developers, CUDA is a go-to platform for GPU acceleration in computer vision and deep learning and a must-know deep learning tool for Computer Vision engineers.

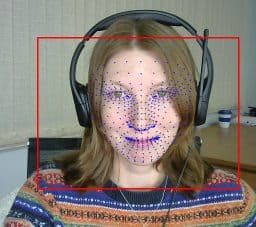

CVAT

The Computer Vision Annotation Tool, or CVAT, is a free, open-source platform for annotating images and videos for machine learning and deep learning projects. It supports different annotations like polygons, key points, and bounding boxes. Ideally, CVAT is deployed on cloud platforms for large projects, and it is installed locally for personal or small projects.

Originally introduced by Intel in 2017, CVAT was developed for internal use to offer a better method for large-scale image annotation of thousands of images. CVAT is now an independent brand based in California, United States.

Vision Engineers and Data Scientists heavily rely on large volumes of annotated data to train deep neural networks. But to get these annotated images it requires thousands of hours.

CVAT accelerates this annotation process and makes it less time-consuming. It offers automatic labeling and semi-automated image annotation to accelerate the annotation process and expedite annotation services.

Large businesses leverage CVAT for image annotation, which is combined with tools for DevOps, application development, or operations.

Using CVAT is as simple as

- uploading the images or videos to the platform

- pick the image or video we wish to annotate

- choose the tool we wish to use, say keypoints

- apply the annotations precisely to the object of interest

- save the annotation and repeat for the remaining data

Interested in exploring CVAT? Check out the full CVAT video series on YouTube.

CVAT is a secure, well-maintained data annotation tool with frequent updates and active community support. It is a powerful and versatile annotation tool for image annotations, offering various annotation types and flexibility. It is a good deep learning tool for one to have in their arsenal to streamline AI data labeling projects or optimize image annotation.

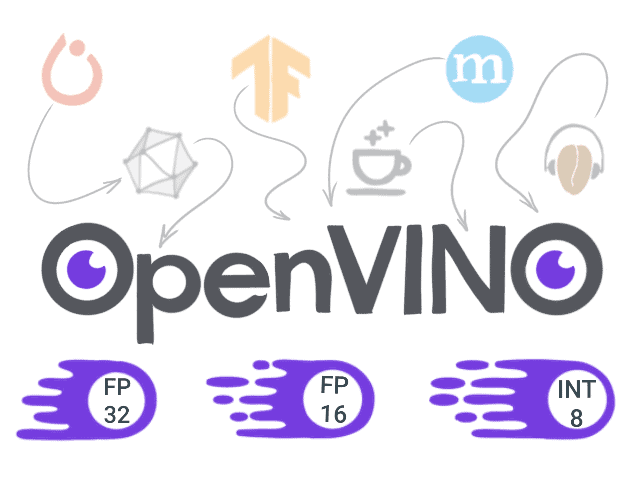

OpenVino

OpenVino, developed by Intel in 2018, is an open-source toolkit designed to optimize neural network inference, accelerating the deployment of deep learning applications across Intel hardware, such as CPUs and GPUs. It supports a wide range of deep learning models out of the box and offers functionalities for applications that use Computer Vision, Natural Language Processing (NLP), or Speech Recognition. By leveraging advanced optimization techniques like Fusion and Freezing, OpenVINO enhances AI workloads, including audio and recommendation systems, making models smaller and faster.

This toolkit streamlines model optimizations to ensure optimal execution, addressing the challenge of attaining high accuracy in computer vision algorithms, which requires hardware and computational methods adaptations. OpenVINO’s library of predetermined functions and pre-optimized kernels, along with a streamlined intermediate representation, accelerates AI workloads and time to market by efficiently distributing workloads across different processors and accelerators.

Developers can deploy pre-trained deep learning models using a high-level C++ Inference Engine API integrated with application logic, allowing for seamless customization and extension of AI workloads to the cloud. OpenVINO also facilitates the customization of deep learning model layers and the parallel programming of different accelerators without adding framework overheads through tools like OpenCL kernels for custom code integration directly into the workload pipeline.

With the Deep Learning Deployment Toolkit, OpenVINO not only runs deep learning models outside of computer vision but also imports and optimizes models from different frameworks, implementing vision inference on diverse hardware. This comprehensive approach ensures accelerated performance and a streamlined pathway for developers to bring their AI-driven applications to market more efficiently.

OpenVINO offers a powerful toolkit to optimize and accelerate deep learning models across multiple hardware platforms, helping developers deploy AI applications faster and more efficiently. In addition to supporting a wide range of AI workloads, it is also customizable, making it an effective solution for advancing artificial intelligence.

TensorRT

TensorRT is a machine learning framework used to run inferences on hardware. Developed by NVIDIA, it was built on the CUDA parallel programming model and offers about 5 times faster inference than baseline models.

TensroRT makes inferences based on algorithms learned from deep learning systems or a knowledge base. The inference engine in TensorRT is responsible for compilation and runtime.

Compilation refers to the process of optimizing and converting a model into a TensorRT engine. This is done through processes like model parsing, layer, and tensor fusion, or precision calibration.

Runtime is executing the optimized TensorRT engine to perform inference. This includes loading the model, allocating the GPU, executing fast predictions, and collecting the output.

TensorRT optimizes deep learning models through a series of complex processes. In the initial phase, TensorRT parses trained models from various frameworks, such as TensorFlow, PyTorch, and ONNX. Several key techniques are involved in optimizing the representation for use on GPUs.

Layer and Tensor Fusion

By combining operations and layers, TensorRT can reduce the need for memory access between operations, decreasing latency and increasing throughput.

Precision Calibration

In TensorRT, mixed-precision computing is supported, which means that lower precision arithmetic can be used for computations (FP16 or INT8) without significantly affecting the model’s accuracy. Precision calibration is used to accomplish this by carefully selecting the precision for each operation to minimize memory consumption and computational demands while maintaining model accuracy overall.

Kernel Auto-Tuning

Using TensorRT’s Kernel Auto-Tuning feature, TensorRT determines the fastest execution paths based on the specific architecture of the model and the GPU hardware target based on benchmarking various implementations.

The TensorRT GPU can divide the workload among multiple GPUs or across multiple streams within a single GPU for applications that require multiple data streams to be processed simultaneously. As a result of this parallel execution capability, large-scale deployments can benefit from scalable performance improvements.

TensorRT also offers comprehensive tools for analyzing and profiling model performance. Engineers can gain insights into execution time, memory usage, and throughput for each layer or operation within the model. This level of analysis is invaluable for identifying bottlenecks and further optimizing model performance.

Weights and Biases

Weights and Biases is an MLOps developer tool that streamlines machine learning workflows from start to finish. If required, weights and biases can be used across frameworks, environments, or workflows to help developers optimize, visualize, or standardize their models.

A variety of features are offered, including interactive data visualizations, hyperparameter optimizations, and experimental tracking, as well as real-time CPU and GPU monitoring, which visualizes datasets, logs, and process statistics in real-time.

The platform is free to use for personal or academic purposes. Using a hosted notebook, one can run their first experiment in just 30 seconds.

It is widely used in the field of deep learning for several key purposes.

Experiment Tracking

Weights and biases offer users the ability to log hyperparameters and output metrics so they can compare their models between runs. This can be helpful for determining what changes have improved model performances and reproducing experiments consistently.

Visualization

With the platform, you can visualize metrics like loss and accuracy curves over training epochs, which are crucial for diagnosing model performance. In addition to confusion matrices and ROC curves, it supports raw images for analyzing model outputs as well.

Data Versioning

This feature helps track which data version was used for training a particular model, ensuring reproducibility, and is important for machine learning workflows where the data changes over time.

Model Saving and Sharing

W&B allows users to save their model checkpoints directly to the application. This allows team members from different locations to collaborate and share.

Hyperparameter Optimization

Hyperparameter optimization tools provided by W&B allow users to search for the best model configuration automatically, saving time and resources when manually tuning parameters.

Integration with Deep Learning Frameworks

Weights and biases allow developers to track their experiments with minimal changes to their existing codebase since W&B is framework agnostic, integrating with popular deep learning frameworks like TensorFlow, PyTorch, Keras, and more.

Collaboration and Reporting

The platform facilitates collaboration between team members by allowing them to share results and insights easily. It also enables stakeholders to generate reports that can be shared with other stakeholders.

Weights and biases are great deep learning tools one can have in their arsenal, allowing developers to build and streamline deep learning workflows incrementally and boost overall productivity.

Conclusion

That’s a wrap of this fun read. We’ve explored what deep learning tools are, shedding light on the basics and checking out some of the most common tools used in the field, like OpenCV, the largest open-source computer vision library, and CVAT, an image annotation tool.

We’ve more fun blogs coming your way; stay tuned. See you guys in the next one!

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning