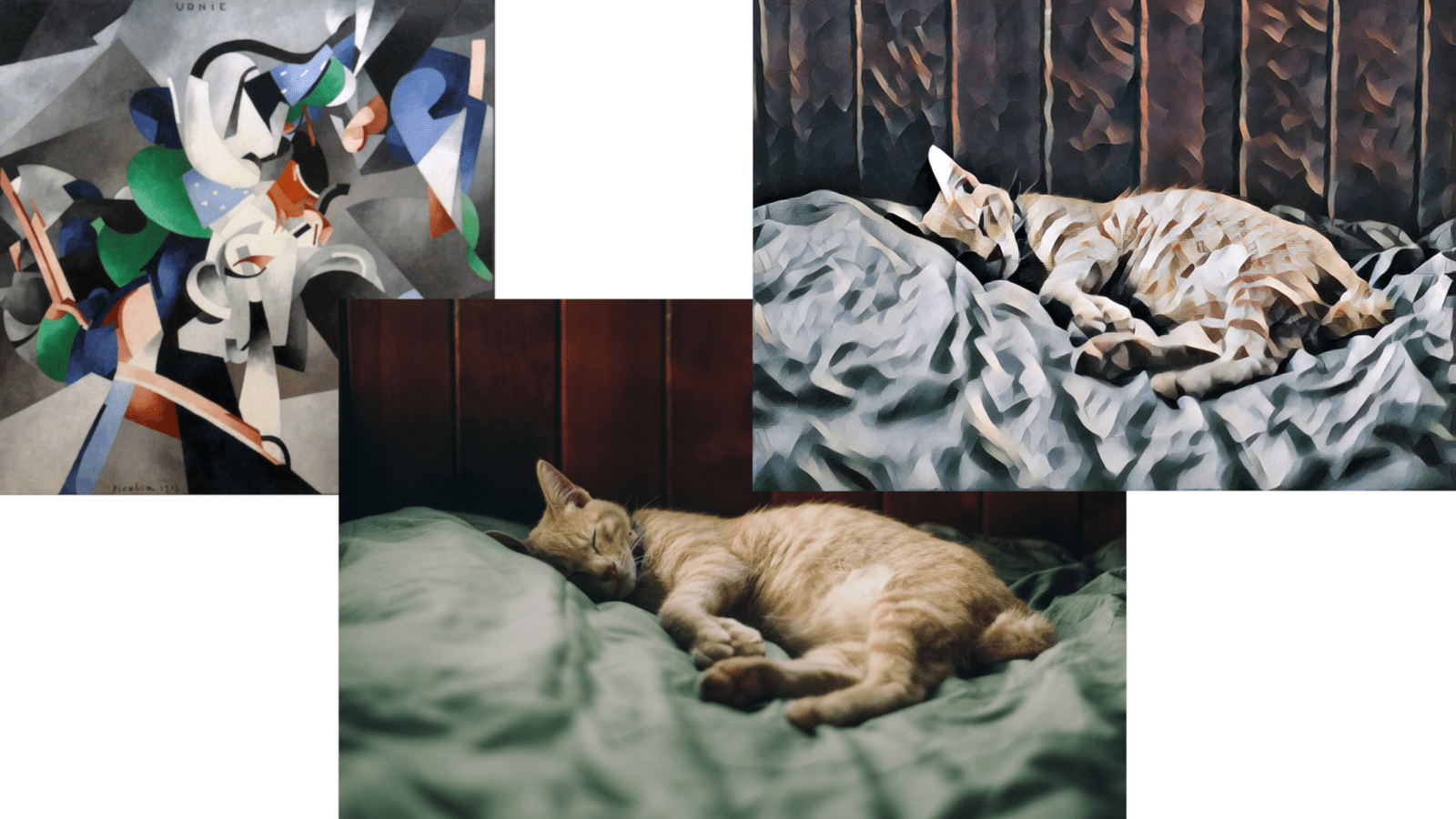

With applications such as object detection, segmentation, and captioning, the COCO dataset is widely understood by state-of-the-art neural networks. Its versatility and multi-purpose scene variation serve best to train a computer vision model and benchmark its performance.

In this post, we will dive deeper into COCO fundamentals, covering the following:

- What is COCO?

- COCO classes

- What is it used for and what can you do with COCO?

- Dataset formats

- Key points

What is COCO?

The Common Object in Context (COCO) is one of the most popular large-scale labeled image datasets available for public use. It represents a handful of objects we encounter on a daily basis and contains image annotations in 80 categories, with over 1.5 million object instances. You can explore COCO dataset by visiting SuperAnnotate’s respective dataset section.

Modern-day AI-driven solutions are still not capable of producing absolute accuracy in results, which comes down to the fact that the COCO dataset is a major benchmark for CV to train, test, polish, and refine models for faster scaling of the annotation pipeline.

On top of that, the COCO dataset is a supplement to transfer learning, where the data used for one model serves as a starting point for another.

COCO Classes

What is COCO used for and what can you do with it?

The COCO dataset is used for multiple CV tasks:

- Object detection and instance segmentation: COCO’s bounding boxes and per-instance segmentation extend through 80 categories providing enough flexibility to play with scene variations and annotation types.

- Image captioning: the dataset contains around a half-million captions that describe over 330,000 images.

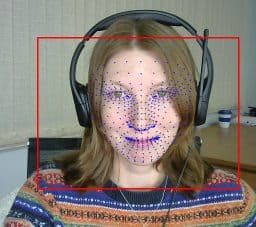

- Keypoints detection: COCO provides accessibility to over 200,000 images and 250,000 person instances labeled with keypoints.

- Panoptic segmentation: COCO’s panoptic segmentation covers 91 stuff, and 80 thing classes to create coherent and complete scene segmentations that benefit the autonomous driving industry, augmented reality, and so on.

- Dense pose: it offers more than 39,000 images and 56,000 person instances labeled with manually annotated correspondences.

- Stuff image segmentation: per-pixel segmentation masks with 91 stuff categories are also provided by the dataset.

COCO Dataset Formats

COCO stores data in a JSON file formatted by info, licenses, categories, images, and annotations. You can create a separate JSON file for training, testing, and validation purposes.

Info: Provides a high-level description of the dataset.

“info”: {

“year”: int,

“version”: str,

“description:” str,

“contributor”: str,

“url”: str,

“date_created”: datetime

}

“info”: {

“year”: 2021,

“version”: 1.2,

“description:” “Pets dataset”,

“contributor”: “Pets inc.”,

“url”: “https://sampledomain.org”,

“date_created”: “2021/07/19”

}Licenses: Provides a list of image licenses that apply to images in the dataset.

“licenses”: [{

“id”: int,

“name”: str,

“url:” str

}]

“licenses”: [{

“id”: 1,

“name”: “Free license”,

“url:” “https://sampledomain.org”

}]Categories: Provides a list of categories and supercategories.

“categories”: [{

“id”: int,

“name”: str,

“supercategory”: str,

“isthing”: int,

“color”: list

}]

“categories”: [

{“id”: 1,

“name”: ”poodle”,

“supercategory”: “dog”,

“isthing”: 1,

“color”: [1,0,0]},

{“id”: 2,

“name”: ”ragdoll”,

“supercategory”: “cat”,

“isthing”: 1,

“color”: [2,0,0]}

]Images: Provides all the image information in the dataset without bounding box or segmentation information.

“image”: {

“id”: int,

“width”: int,

“height”: int,

“file_name: str,

“license”: int,

“flickr_url”: str,

“coco_url”: str,

“date_captured”: datetime

}

“image”: [{

“id”: 122214,

“width”: 640,

“height”: 640,

“file_name: “84.jpg”,

“license”: 1,

“date_captured”: “2021-07-19 17:49”

}]Annotations: Provides a list of every individual object annotation from each image in the dataset.

“annotations”: {

“id”: int,

“image_id: int”,

“category_id”: int

“segmentation”: RLE or [polygon],

“area”: float,

“bbox”: [x,y,width,height],

“iscrowd”: 0 or 1

}

“annotations”: [{

”segmentation”:

{

“counts”: [34, 55, 10, 71]

“size”: [240, 480]

},

“area”: 600.4,

“iscrowd”: 1,

“Image_id:” 122214,

“bbox”: [473.05, 395.45, 38.65, 28.92],

“category_id”: 15,

“id”: 934

}]

“annotations”: [{

”segmentation”: [[34, 55, 10, 71, 76, 23, 98, 43, 11, 8]],

“area”: 600.4,

“iscrowd”: 1,

“Image_id:” 122214,

“bbox”: [473.05, 395.45, 38.65, 28.92],

“category_id”: 15,

“id”: 934

}]The Key Points

Machines’ ability to stimulate the human eye is not as far-fetched as it used to be. In fact, the CV industry is expected to exceed $48.6 billion by 2022. The success of CV is credited to the training data that is fed to the model. The COCO dataset, in particular, holds a special place among AI accomplishments, which makes it worthy of exploring and potentially embedding into your model. We hope this article expands your understanding of COCO and fosters effective decision-making for your final model rollout.

About SuperAnnotate

SuperAnnotate is helping companies build the next generation of computer vision products with its end-to-end platform and integrated marketplace of managed annotation service teams. SuperAnnotate provides comprehensive annotation tooling, robust collaboration, and quality management systems, NoCode Neural Network training and automation, as well as a data review and curation system to successfully develop and scale computer vision projects. Everyone from researchers to startups to enterprises all over the world trust SuperAnnotate to build higher-quality training datasets up to 10x faster while significantly improving model performance. SuperAnnotate was recognized as one of the world’s top 100 AI companies in 2021 by CB Insights.

This article was initially published on SuperAnnotate Blog.

About the Author:

Tigran Petrosyan

Co-founder & CEO at SuperAnnotate

Physicist turned tech enthusiast and entrepreneur. After earning his master’s degree in Physics from ETH Zurich in Switzerland, Tigran pursued his Ph.D. in Biomedical Imaging and Photonics. Just before graduating, Tigran dropped out of his Ph.D. program to start SuperAnnotate with his brother, following his passion for building comprehensive teams and making products people love.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning