Introduction

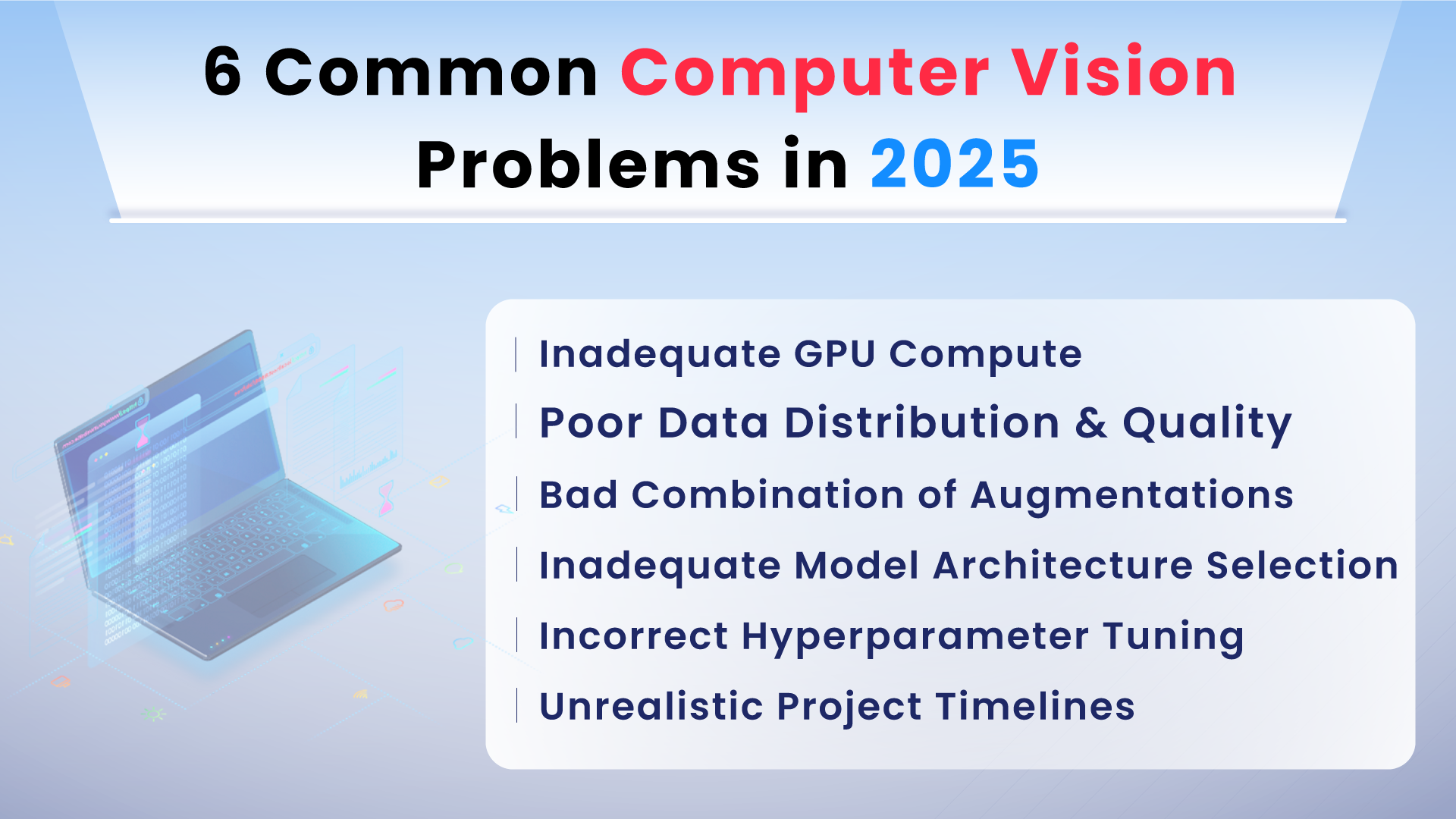

Computer Vision is a recent subset of Artificial Intelligence that has seen a huge surge in demand in recent years. We can owe this to the incredible computing power we have today and the vast availability of data. We’ve all used a Computer Vision application in some form or another in our daily lives, say the face unlock on our mobile devices or even the filters we use in Instagram and Snapchat. But with such awesome capabilities, there are numerous factors constraining its implementation.

In this read, we discuss the common Computer Vision problems, why they arise, and how they can be tackled.

Table of Contents

Introduction

Why do problems arise in Computer Vision?

Common Computer Vision Problems

Conclusion

Why do problems arise in Computer Vision?

When working with Computer Vision systems, they pose many technical problems that could arise, for instance, the inherent complexity of interpreting visual data. Overcoming such issues can help develop robust and adaptable vision systems. In this section, we’ll delve into why computer vision problems arise.

Visual Data Diversity

The diversity in visual representation, say illumination, perspective, or occlusion in objects, poses a big challenge. These variations need to be overcome to eliminate any visual discrepancies.

Dimensional Complexity

With every image composed of millions of pixels, dimensional complexity becomes another barrier one needs to cross. This could be done by adopting different techniques and methodologies.

Dataset Integrity

The integrity of visual data could be breached in the form of compression anomalies or sensor noise. The balance between noise reduction and preservation of features needs to be achieved.

Internal Class Variations

Then, there is variability within the same classes. What does that mean? Well, the diversity of object categories poses a challenge for algorithms to identify unifying characteristics amongst a ton of variations. This requires distilling the quintessential attributes that define a category while disregarding superficial differences.

Real-time Decision Making

Real-time processing can be aggravating. This comes into play when making decisions for autonomous navigation or interactive augmented realities needing optimal performance of computational frameworks and algorithms for swift and accurate analysis.

Perception in Three Dimensions

This is not a problem per se but rather a crucial task which is inferring three dimensionality. This involves extracting three-dimensional insights from two-dimensional images. Here, algorithms must traverse the ambiguity of depth and spatial relationships.

Labeled Dataset Scarcity

The scarcity of annotated data or extensively labeled datasets poses another problem while training state-of-the-art models. This can be overcome using unsupervised and semi-supervised learning. Another reason why a computer vision problem could arise is that vision systems are susceptible to making wrong predictions, which can go unnoticed by researchers.

While we are on the topic of labeled datatset scarcity, we must also be familiar with improper labeling. This occurs when a label attached to an object is mislabeled. It can result in inaccurate predictions during model deployment.

Ethical Considerations

Ethical considerations are paramount in Artificial Intelligence, and it is no different in Computer Vision. This could be biases in deep learning models or any discriminatory outcomes. This emphasizes the need for a proper approach to dataset curation or algorithm development.

Multi-modal Implementation

Coming to integrating computer vision into broader technological ecosystems like NLP or Robotics requires not just technical compatibility but also a shared understanding.

We’ve only scratched the surface of the causes of different machine vision issues. Now, we will move into the common computer vision problems and their solutions.

Common Computer Vision Problems

When working with deep learning algorithms and models, one tends to run into multiple problems before robust and efficient systems can be brought to life. In this section, we’ll discuss the common computer vision problems one encounters and their solutions.

Inadequate GPU Compute

GPUs or Graphic Processing Units were initially designed for accelerated graphical processing. Nvidia has been at the top of the leaderboard in the GPU scene. So what’s GPU to do with Computer Vision? Well, this past decade has seen a surge in demand for GPUs to accelerate machine learning and deep learning training.

Finding the right GPU can be a daunting task. Big GPUs come at a premium price, and if you are thinking of moving to the cloud, it sees frequent shortages. GPUs need to be optimized since most of us do not have access to clusters of machines.

Memory is one of the most crucial aspects when choosing the proper GPU. Low vRAM (Low memory GPUs) can severely hinder the progress of big computer vision and deep learning projects.

Another way around this memory conundrum is GPU utilization. GPU utilization is the percentage of graphics card used at a particular point in time.

So, what are some of the causes of poor GPU utilization?

- Some vision applications may need large amounts of memory bandwidth, meaning the GPU may have a long wait time for the data to be transferred to or from the memory. This can be sorted by leveraging memory access patterns.

- A few computational tasks can be less intensive, meaning the GPU may not be used to the fullest. This could be conditional logic or other operations which are not apt for parallel processing.

- Another issue is the CPU not being able to supply data fast to the GPU, resulting in GPU idling. By using asynchronous data transferring, this can be fixed.

- Some operations like memory allocation or explicit synchronization can stop the GPU altogether and cause it to idle, which is, again, poor GPU utilization.

- Another cause of poor GPU utilization is inefficient parallelization of threads where the workload is not evenly distributed across all the cores of the GPU.

We need to effectively monitor and control the GPU utilization as it can significantly better the model’s performance. This can be made possible using tools like NVIDIA System Management Interface that offers real-time data on multiple aspects of the GPU, like memory consumption, power usage, and temperature. Let us look at how we can leverage these tools to better optimize GPU usage.

- Batch size adjustments: Larger batch sizes would consume more memory but can also improve overall throughput. One step to boost GPU utilization is modifying the batch size while training the model. The batch size can be modified by testing various batch sizes and help us strike the right balance between memory usage and performance.

- Mixed precision training: Another solution to enhance the efficiency of the GPU is mixed precision training. It uses lower-precision data types when performing calculations on Tensor Cores. This method not only reduces computation time and memory demands but does not compromise on accuracy.

- Distributed Training: Another way around high GPU usage can be distributing the workload across multiple GPUs. By leveraging frameworks like MirroredStrategy from TensorFlow or DistributedDataParallel from PyTorch, the implementation of distributed training approaches can be simplified.

Two standard series of GPUs are the RTX and the GTX series, where RTX is the newer, more powerful graphics card while the GTX is the older series. Before investing in any of them, it is essential to research on them. A few factors to note when choosing the right GPU include analyzing the project requirements and the memory needed for the computations. A good starting point is to have at least 8GB of video RAM for seamless deep learning model training.

If you are on a budget, then there are alternatives like Google Colab or Azure that offer free access to GPUs for a limited time period. So you can complete your vision projects without needing to invest in a GPU.

As seen, hardware issues like GPUs are pretty common when training models, but there are loads of ways one can work their way around it.

Poor Data Distribution and Quality

The quality of the dataset being fed into your vision model is essential. Every change made to the annotations must translate to better performance in the project. Rectifying all these inaccuracies can drastically improve the overall accuracy of the production models and drastically improve the quality of the labels and annotations.

Poor quality data within image or video datasets can pose a big problem to researchers. Another issue can be not having access to quality data, which will cause us to be unable to produce the desired output.

Although there are AI-assisted automation tools for labeling data, improving the quality of these datasets can be time-consuming. Add that to having thousands of images and videos in a dataset and looking through each of them on a granular level; looking for inaccuracies can be a painstaking task.

Suboptimal data distribution can significantly undermine the performance and generalization capabilities of these models. Let us look at some causes of poor data distribution or errors and their solutions.

Mislabeled Images

Mislabeled images occur when there exists a conflict between the assigned categorical or continuous label and the actual visual content depicted within the image. This could stem from human error during

- Manual annotation processes

- Algorithmic misclassifications in automated labeling systems, or

- Ambiguous visual representations susceptible to subjective interpretations

If mislabeled images exist within training datasets, it can lead to incorrect feature-label associations within the learning algorithms. This could cause degradation in model accuracy and a diminished capacity for the model to generalize from the training data to novel, unseen datasets.

To overcome mislabeled images

- We can implement rigorous dataset auditing protocols

- Leverage consensus labeling through multiple annotators to ensure label accuracy

- Implement advanced machine learning algorithms that can identify and correct mislabeled instances through iterative refinement processes

Missing Labels

Another issue one can face is when a subset of images within a dataset does not have any labels. This could be due to

- oversight in the annotation process

- the prohibitive scale of manual labeling efforts, or

- failures in automated detection algorithms to identify relevant features within the images

Missing labels can create biased training processes when a portion of a dataset is void of labels. Here, deep learning models are exposed to an incomplete representation of the data distribution, resulting in models performing poorly when applied to unlabeled data.

By leveraging semi-supervised learning techniques, we can eliminate missing labels. By utilizing both labeled and unlabeled data in model training, we can enhance the model’s exposure to the underlying data distribution. Also, by deploying more efficient detection algorithms, we can reduce the incidence of missing labels.

Unbalanced Data

Unbalanced data can take the form of certain classes that are significantly more prevalent than others, resulting in the disproportionate representation of classes.

Much like missing labels, unbalanced training on unbalanced datasets can lead to the development of biases by machine learning models towards the more frequently represented classes. This can drastically affect the model’s ability to accurately recognize and classify instances of underrepresented classes and can severely limit its applicability in scenarios requiring equitable performance across various classes.

Unbalanced data can be counteracted through techniques like

- Oversampling of minority classes

- Undersampling of majority classes

- Synthetic data generation via techniques such as Generative Adversarial Networks (GANs), or

- Implementation of custom loss functions

It is paramount that we address any complex challenges associated with poor data distribution or lack thereof, as it can lead to inefficient model performance or biases. One can develop robust, accurate, and fair computer vision models by incorporating advanced algorithmic strategies and continuous model evaluation.

Bad Combination of Augmentations

A huge limiting factor while training deep learning models is the lack of large-scale labeled datasets. This is where Data Augmentation comes into the picture.

What is Data Augmentation?

Data augmentation is the process of using image processing-based algorithms to distort data within certain limits and increase the number of available data points. It aids not only in increasing the data size but also in the model generalization for images it has not seen before. By leveraging Data Augmentation, we can limit data issues to some extent. A few data augmentation techniques include

- Image Shifts

- Cropping

- Horizontal Flips

- Translation

- Vertical Flips

- Gaussian noise

- Rotations

Data augmentation is done to generate a synthetic dataset, which is more vast than the original dataset. If the model encounters any issues in production, then augmenting the images to create a more extensive dataset will help generalize it in a better way.

Let us explore some of the reasons why bad combinations of augmentations in computer vision occur based on tasks.

Excessive Rotation

Excessive rotation can pose a problem for the model to learn the correct orientation of objects. This can mainly be seen with tasks like object detection when the objects are typically found in standard orientations (e.g., street signs) or some orientations are unrealistic.

Heavy Noise

Excessive addition of noise to images can be counterproductive for tasks that require recognizing subtle differences between classes, for instance, the classification of species in biology. The noise can conceal essential features.

Random Cropping

Random cropping can lead to the removal of some essential parts of the image that are critical for correct classification or detection. For instance, randomly cropping parts of medical images might remove pathological features critical for diagnosis.

Excessive Brightness

Making extreme adjustments to brightness or contrast can alter the appearance of critical diagnostic features, leading to misinterpretation made by the model.

Aggressive Distortion

Suppose we are to apply aggressive geometric distortions (like extreme skewing or warping) aggressively. In that case, it can significantly alter the appearance of text in images, making it difficult for models to recognize the characters accurately in optical character recognition (OCR) tasks.

Color Jittering

Color jittering is another issue one can come across when dealing with data augmentation. For any task where the key distinguishing feature is color, excessive modifications to color, like brightness, contrast, or saturation, can distort the natural color distribution of the objects and mislead the model.

Avoiding such excessive augmentations needs a good understanding of the needs and limitations of the models. Let us explore some standard guidelines to help avoid bad augmentation practices.

Understand the Task and Data

First, we need to understand what the task is at hand, for instance, if it is classification or detection, and also the nature of the images. Then, we need to pick the apt form of augmentation. It is also good to understand the characteristics of your dataset. If your dataset includes images from various orientations, excessive rotation might not be necessary.

Use of Appropriate Augmentation Libraries

Try utilizing libraries like Albumentations, imgaug, or TensorFlow’s and PyTorch’s built-in augmentation functionalities. They offer extensive control over the augmentation process, allowing us to specify the degree of augmentation that is applied.

Implement Conditional Augmentation

Use augmentations based on the image’s content or metadata. For example, avoid unnecessary cropping on images where essential features are likely to be near the edges.

Dynamically adjust the intensity of augmentations based on the model’s performance or during different training phases.

Augmentation Parameters Fine-tuning

Find the right balance that improves model robustness without distorting the data beyond recognition. This can be achieved by carefully tuning the parameters.

Make incremental changes, start with minor augmentations, and gradually increase their intensity, monitoring the impact on model performance.

Optimize Augmentation Pipelines

Any multiple augmentations in a pipeline must be optimized. We must also ensure that combining any augmentations does not lead to unrealistic images.

Use random parameters within reasonable bounds to ensure diversity without extreme distortion.

Validation and Experimentation

Regularly validate the model on a non-augmented validation set to ensure that augmentations are improving the model’s ability to generalize rather than memorize noise.

Experiment with different augmentation strategies in parallel to compare their impact on model performance.

As seen above, a ton of issues arise when dealing with data augmentation, like excessive brightness, color jittering, or heavy noise. But by leveraging techniques like cropping, image shifts, horizontal flips, and Gaussian noise, we can curb bad combinations of augmentations.

Inadequate Model Architecture Selection

Selecting an inadequate model architecture is another common computer vision problem that can be attributed to many factors. They affect the overall performance, efficiency, and applicability of the model for specific computational tasks.

Let us discuss some of the common causes of poor model architecture selection.

Deep Neural Network Model Architecture Selection

Lack of Domain Understanding

A common issue is the lack of knowledge of the problem space or the requirements for the task. Diverse architectures require proficiency across different fields. For instance, Convolutional Neural Networks (CNNs) are essential for image data, whereas Recurrent Neural Networks (RNNs) are needed for sequential data. Having a superficial understanding of the task nuances can lead to the selection of an architecture that is not aligned with the task requirements.

Computational Limitations

We must always keep in mind the computational resources we have available. Models that require high computational power and memory cannot be viable for deployment. This could lead to the selection of simpler and less efficient models.

Data Constraints

Choosing the right architecture heavily depends on the volume and integrity of available data. Intricate models require voluminous datasets of high-quality, labeled data for effective training. In scenarios that have data paucity, noise, imbalance, or a model with greater sophistication might not yield superior performance and could cause overfitting.

Limited Familiarity with Architectural Paradigms

A lot of novel architectures and models are emerging with the huge strides made in deep learning. However, researchers default to utilizing models they are familiar with, which may not be optimal for their desired outcomes. One must always be updated with the latest contributions in the realm of deep learning and computer vision to analyze the advantages and limitations of the new architectures.

Task Complexity Underestimation

Another cause for poor architecture selection is failing to accurately assess the complexity of the task. This may result in adopting simpler models that lack the ability to capture the essential features within the data. This can be attributed to incomplete or not conducting a comprehensive exploratory data analysis or not fully acknowledging the data’s subtleties and variances.

Overlooking Deployment Constraints

The deployment environment has a significant influence on the architecture selection process. For real-time applications or deployment on devices with limited processing capabilities (e.g., smartphones, IoT devices), architectures optimized for memory and computation efficiency are necessary.

Managing these poor architectural selections requires being updated on the latest architectures, as well as a thorough understanding of the problem domain and data characteristics and a careful consideration of the pragmatic constraints associated with model deployment and functionality.

Now that we’ve explored the possible causes for inadequate model architecture let us see how to avoid them.

Balanced Model

Two common challenges one could face are having an overfitting model, which is too complex and overfits the data, or having an underfitting model, which is too simple and fails to infer patterns from the data. We can leverage techniques like regularization or cross-validation to optimize the models’ performance to avoid overfitting or underfitting.

Understanding Model Limitations

Next, we need to be well aware of the limitations and assumptions of the different algorithms and models. Different models have different strengths and weaknesses. They all require different conditions or properties of the data for optimal performance. For instance, some models are sensitive noise or outliers, some are more viable for different tasks like detection, segmentation, or classification. We must know the theory and logic behind every model and check if the data fulfills the desired conditions.

Curbing Data Leakage

Data leakage occurs when information from the test dataset is used to train the model. This can result in biased estimates of the model’s accuracy and performance. A good rule of thumb is to split the data into training and test datasets before moving to any of the steps like preprocessing or feature engineering. One can also avoid using features that are influenced by the target variable.

Continual Assessment

A common misunderstanding is when researchers assume that deployment is the last stage of the project. We need to continually monitor, analyze, and improve on the deployed models. The accuracy of vision models can decline over time as they generalize based on a subset of data. Additionally, they can struggle to adapt to complex user inputs. These reasons further emphasize the need to monitor models post-deployment.

A few steps for continual assessment and improvement include

- Implementation of a robust monitoring system

- Gathering user feedback

- Leveraging the right tools for optimal monitoring

- Refer real-world scenarios

- Addressing underlying issues by analyzing the root cause of loss of model efficiency or accuracy

Much like other computer vision problems, one must be diligent in selecting the right model architecture by assessing the computing resources one has at his disposal, the data constraints, possessing good domain expertise, and finding the optimal model that is not overfitting or underfitting. Following all these steps will curb poor selections in model architecture.

Incorrect Hyperparameter Tuning

Before we delve into the reasons behind poor hyperparameter tuning and its solutions, let us look at what it is.

What is Hyperparameter?

Hyperparameters are the configurations of the model where the model does not learn from the data but rather from the inputs provided before training. They provide a pathway for the learning process and affect how the model behaves during training and prediction. Learning rate, batch size, and number of layers are a few instances of hyperparameters. They can be set based on the computational resources, the complexity of the task and also the characteristics of the datasets.

Incorrect hyperparameter tuning in deep learning can adversely affect model performance, training efficiency, and generalization ability. Hyperparameters are configurations external to the model that cannot be directly learned from the data. Hyperparameters are critical to the performance of the trained model and the behavior of the training algorithm. Here are some of the downsides of incorrect hyperparameter tuning.

Overfitting or Underfitting

If hyperparameters are not tuned correctly, a model may capture noise in training data as a legitimate pattern. Examples include too many layers or neurons without appropriate regularization or too high a capacity.

Underfitting, on the other hand, can result when the model is too simple to capture the underlying structure of the data due to incorrect tuning. Alternatively, the training process might halt before the model has learned enough from the data due to a low model capacity or a low learning rate.

Poor Generalization

Incorrectly tuned hyperparameters can lead to a model that performs well on the training data but poorly on unseen data. This indicates that the model has not generalized well, which is often a result of overfitting.

Inefficient Training

A number of hyperparameters control the efficiency of the training process, including batch size and learning rate. If these parameters are not adjusted appropriately, the model will take much longer to train, requiring more computational resources than necessary. If the learning rate is too small, convergence might be slowed down, but if it is too large, the training process may oscillate or diverge.

Difficulty in Convergence

An incorrect setting of the hyperparameters can make convergence difficult. For example, an excessively high learning rate can cause the model’s loss to fluctuate rather than decrease steadily.

Resource Wastage

It takes considerable computational power and time to train deep learning models. Incorrect hyperparameter tuning can lead to a number of unnecessary training runs.

Model Instability

In some cases, hyperparameter configurations can lead to model instability, where small changes in the data or initialization of the model can lead to large variations in performance.

The use of systematic hyperparameter optimization strategies is crucial to mitigate these issues.

It is crucial to finetune these hyperparameters as they significantly affect the performance and the accuracy of the model.

Let us explore some of the common hyperparameter optimization methods.

- Learning Rate: To prevent underfitting or overfitting, finding an optimal learning rate is crucial in order to prevent the model from updating its parameters too fast or too slowly during training.

- Batch Size: During model training, batch size determines how many samples are processed during each iteration. This influences the training dynamics, memory requirements, and generalization capability of the model. The batch size should be selected in accordance with the computational resources and the characteristics of the dataset on which the model will be trained.

- Network Architecture: Network architecture outlines the blueprint of a neural network, detailing the arrangement and connection of its layers. This includes specifying the total number of layers, identifying the variety of layers (like convolutional, pooling, or fully connected layers), and how they’re set up. The choice of network architecture is crucial and should be tailored to the task’s complexity and the computational resources at hand.

- Kernel Size: In the realm of convolutional neural networks (CNNs), kernel size is pivotal as it defines the scope of the receptive field for extracting features. This choice influences how well the model can discern detailed and spatial information. Adjusting the kernel size is a balancing act to ensure the model effectively captures both local and broader features.

- Dropout Rate: Dropout is a strategy to prevent overfitting by randomly omitting a proportion of the neural network’s units during the training phase. The dropout rate is the likelihood of each unit being omitted. By doing this, it pushes the network to learn more generalized features and lessens its reliance on any single unit.

- Activation Functions: These functions bring non-linearity into the neural network, deciding the output for each node. Popular options include ReLU (Rectified Linear Unit), sigmoid, and tanh. The selection of an activation function is critical as it influences the network’s ability to learn complex patterns and affects the stability of its training.

- Data Augmentation Techniques: Techniques like rotation, scaling, and flipping are used to introduce more diversity to the training data, enhancing its range. Adjusting hyperparameters related to data augmentation, such as the range of rotation angles, scaling factors, and the probability of flipping, can fine-tune the augmentation process. This, in turn, aids the model in generalizing better to new, unseen data.

- Optimization Algorithm: The selection of an optimization algorithm affects how quickly and smoothly the model learns during training. Popular algorithms include stochastic gradient descent (SGD), ADAM, and RMSprop. Adjusting hyperparameters associated with these algorithms, such as momentum, learning rate decay, and weight decay, plays a significant role in optimizing the training dynamics.

The use of systematic hyperparameter optimization strategies is crucial to mitigate these issues.

Unrealistic Project Timelines

This is rather a broader topic that affects all fields of study and does not pertain only to Computer Vision and Deep Learning. It not only affects our psychological state of mind but also destroys our morale. One main reason could be the individual setting up unrealistic deadlines, often not able to gauge the time or effort needed to complete the project or task at hand. As mentioned earlier, this can lead to low morale or lowering one’s self-esteem.

Now, bringing our attention to the realm of Computer Vision, deadlines could range from time taken for collecting the data to deploying models. How do we tackle this? Let us look at a few steps we can take not only to keep us on time but also to deploy robust and accurate vision systems.

Define your Goals

Before we get into the nitty gritty of a Computer Vision project, we need to have a clear understanding of what we wish to achieve through it. This means identifying and defining the end goal, objectives, and milestones. This also needs to be communicated to the concerned team, which could be our colleagues, clients, and sponsors. This will eliminate any unrealistic timelines or misalignments.

Planning

Once we set our objectives, we come to our second step, planning, and prioritizations. This involves understanding and visualizing our workflow, leveraging the appropriate tools, cost estimations, and timelines, and analyzing the available resources, be they hardware or software. We must allocate them optimally, curbing any dependencies or risks and eradicating any assumptions that may affect the project.

Testing

Once we’ve got our workflow down, we begin the implementation and testing phase, where we code, debug, and validate the inferences made. One must remember the best practices of model development, documentation, code review, and framework testing. This could involve the appropriate usage of tools and libraries like OpenCV, PyTorch, TensorFlow, or Keras to facilitate the models to perform the tasks we trained them for, which could be segmentation, detection, or classification, model evaluation and the accuracy of the models.

Review

This brings us to our final step, project review. We make inferences from the results, analyze the feedback, and make improvements to them. We also need to check how aligned it is with the suggestions given by sponsors or users and make iterations, if any.

Keeping up with project deadlines can be a daunting task at first, but with more experience and the right mindset, we’ll have better time management and greater success in every upcoming project.

Conclusion

We’ve come to the end of this fun read. We’ve covered the six most common computer vision problems one encounters on their journey, ranging from the inadequacies of GPU computing all the way to incorrect hyperparameter tuning. We’ve comprehensively delved into their causes and how they can all be overcome by leveraging different methods and techniques. More fun reads in the realm of Artificial Intelligence, Deep Learning, and Computer Vision are coming your way. See you guys in the next one!

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning