OpenVINO

Introduction Deep learning tools are extensively used in vision applications across most industries, ranging from facial recognition on mobile devices to Tesla’s self-driving cars. However, using the right tools is

Guest post by Aleksandr Voron To keep OpenVINO™ Toolkit focused on optimizing and deploying inference, we no longer include OpenCV and DL Streamer in our distribution packages. But not to

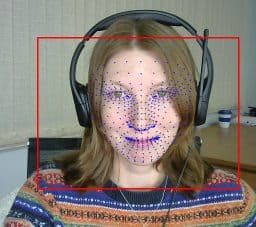

Deep Learning Inference Engine backend from the Intel OpenVINO toolkit is one of the supported OpenCV DNN backends. It was mentioned in the previous post that ARM CPUs support has

We are pleased to announce that Deep Learning Inference Engine backend in the OpenCV DNN module can run inference on ARM CPUs nowadays. Previously Inference Engine supported Intel hardware only:

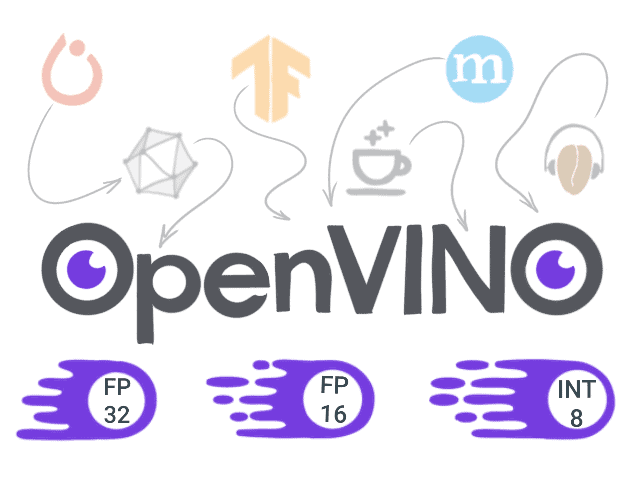

Are you looking for a fast way to run neural network inferences on Intel platforms? Then OpenVINO toolkit is exactly what you need. It provides a large number of optimizations

Nowadays, many ground-breaking solutions based on neural network are developed daily and more people are adopting this technique for solving problems such as voice recognitions in their life. Because of