Alexey Perminov

We have already discussed several ways to convert your DL model into OpenVINO in previous blogs (PyTorch and TensorFlow). Let’s try something more advanced now.

How TensorFlow trained model may be used and deployed to run with OpenVINO Inference Engine

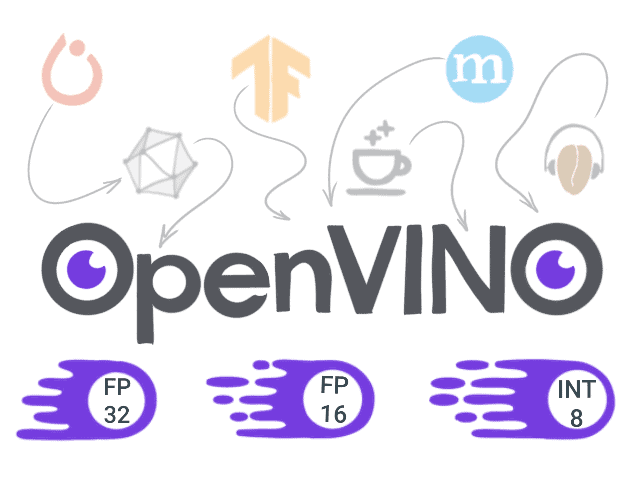

Are you looking for a fast way to run neural network inferences on Intel platforms? Then OpenVINO toolkit is exactly what you need. It provides a large number of optimizations

How TensorFlow trained model may be used and deployed to run with OpenVINO Inference Engine