About the author

Igor Murzov is a M.S. in computer science. He is currently a software engineer at Xperience.AI working on OpenCV and IoT software.

Introduction

In the last decade, 3D technology has become a part of normal life – we watch movies in 3D, play VR games, use 3D printers to create volumetric details, and so on. Nowadays, even smartphones are getting equipped with depth sensors to provide advanced 3D features. So it’s safe to say that in the near future it would be absolutely common to have a 3D camera just lying around. 3D cameras can be used anywhere: robotics, gaming, augmented reality, virtual reality, smart homes, healthcare, retail, automation, and so on. And I’m sure that 3D cameras will be used even more in the future.

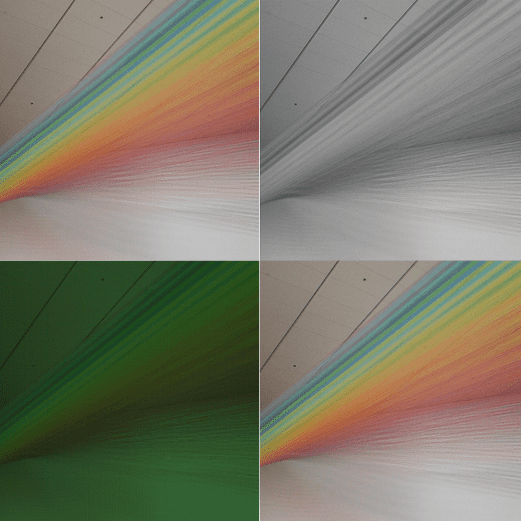

3D cameras enable much more advanced use cases. In contrast to traditional computer vision algorithms that work on color pixmaps, a 3D camera adds another dimension of data, which allows to fundamentally expand the range of computer vision algorithms that can be applied to that data, as in addition to a color pixmap there is depth information. In the sample images below you can see the color frame and the depth frame showing the same scene (depth frame is colored so that the darker the color the closer the object on the scene). Looking at the color frame it’s hard to distinguish plant leaves from leaves painted on a wall, but the depth data makes it easy.

In this post, we’ll take a look at how to work with Orbbec Astra Pro camera using the open source OpenNI API. Orbbec is one of the leading manufacturers of 3D cameras. The Astra Pro camera provides high-end responsiveness, depth measurement, smooth gradients, and precise contours, as well as the ability to filter out low-quality depth pixels. The camera can stream HD video and VGA depth images at 30 frames per second.

So let’s learn how to work with the Astra Pro camera using OpenCV library.

Installation

In order to use the Astra camera’s depth sensor with OpenCV you should install Orbbec OpenNI2 SDK first and build OpenCV with that SDK support enabled. There is no prebuilt OpenCV with OpenNI2 SDK support available, so you should do that by yourself.

- Download the latest version of Orbbec OpenNI2 SDK from here

- Unzip the archive, choose the build according to your operating system and follow installation steps provided in the Readme file.

- Then configure OpenCV with OpenNI2 support enabled by setting the WITH_OPENNI2 flag to ON in CMake. You may also like to enable the BUILD_EXAMPLES flag to get a code sample working with your Astra camera.

More detailed instructions can be found in the following tutorial.

Video streams setup

The Astra Pro camera has two sensors — a depth sensor and a color sensor. The depth sensor can be read using the OpenNI interface with cv::VideoCapture class. Video stream from the color sensor is not available through OpenNI API and is only provided via the regular camera interface. To get both depth and color frames, two cv::VideoCapture objects should be created:

// Open depth stream VideoCapture depthStream(CAP_OPENNI2_ASTRA); // Open color stream VideoCapture colorStream(0, CAP_V4L2);

The first object will use the OpenNI2 API to retrieve depth data. The second one uses the Video4Linux2 interface to access the color sensor. Note that the example above assumes that the Astra camera is the first camera in the system. If you have more than one camera connected, you may need to explicitly set the proper camera number.

Before using the created VideoCapture objects we need to set up streams’ parameters, i.e. VideoCapture properties. The most important parameters are frame width, frame height and fps. For this example, we’ll configure width and height of both streams to VGA resolution, which is the maximum resolution available for both sensors, and we’d like both stream parameters to be the same for easier color-to-depth data registration:

// Set color and depth stream parameters

colorStream.set(CAP_PROP_FRAME_WIDTH, 640);

colorStream.set(CAP_PROP_FRAME_HEIGHT, 480);

depthStream.set(CAP_PROP_FRAME_WIDTH, 640);

depthStream.set(CAP_PROP_FRAME_HEIGHT, 480);

depthStream.set(CAP_PROP_OPENNI2_MIRROR, 0);

For setting and retrieving some property of sensor data generators use cv::VideoCapture::set and cv::VideoCapture::get methods respectively. The following properties of 3D cameras available through OpenNI interface are supported for the depth generator:

- cv::CAP_PROP_FRAME_WIDTH – Frame width in pixels.

- cv::CAP_PROP_FRAME_HEIGHT – Frame height in pixels.

- cv::CAP_PROP_FPS – Frame rate in FPS.

- cv::CAP_PROP_OPENNI_REGISTRATION – Flag that registers the remapping depth map to image map by changing the depth generator’s viewpoint (if the flag is “on”) or sets this viewpoint to its normal one (if the flag is “off”). The registration process’ resulting images are pixel-aligned, which means that every pixel in the image is aligned to a pixel in the depth image.

- cv::CAP_PROP_OPENNI2_MIRROR – Flag to enable or disable mirroring for this stream. Set to 0 to disable mirroring

Next properties are available for getting only:

- cv::CAP_PROP_OPENNI_FRAME_MAX_DEPTH – A maximum supported depth of the camera in mm.

- cv::CAP_PROP_OPENNI_BASELINE – Baseline value in mm.

Reading from video streams

After the VideoCapture objects have been set up, you can start reading frames. OpenCV’s VideoCapture provides synchronous API, so you have to grab frames in a new thread to avoid a stream blocking while another one is being read. As there are two video sources that should be read simultaneously, it’s necessary to create two “reader” threads to avoid blocking. Example implementation that gets frames from each sensor in a new thread and stores them in a list along with their timestamps:

// Create two lists to store frames

std::list<Frame> depthFrames, colorFrames;

const std::size_t maxFrames = 64;

// Synchronization objects

std::mutex mtx;

std::condition_variable dataReady;

std::atomic<bool> isFinish;

isFinish = false;

// Start depth reading thread

std::thread depthReader([&]

{

while (!isFinish)

{

// Grab and decode new frame

if (depthStream.grab())

{

Frame f;

f.timestamp = cv::getTickCount();

depthStream.retrieve(f.frame, CAP_OPENNI_DEPTH_MAP);

if (f.frame.empty())

{

cerr << "ERROR: Failed to decode frame from depth stream\n";

break;

}

{

std::lock_guard<std::mutex> lk(mtx);

if (depthFrames.size() >= maxFrames)

depthFrames.pop_front();

depthFrames.push_back(f);

}

dataReady.notify_one();

}

}

});

// Start color reading thread

std::thread colorReader([&]

{

while (!isFinish)

{

// Grab and decode new frame

if (colorStream.grab())

{

Frame f;

f.timestamp = cv::getTickCount();

colorStream.retrieve(f.frame);

if (f.frame.empty())

{

cerr << "ERROR: Failed to decode frame from color stream\n";

break;

}

{

std::lock_guard<std::mutex> lk(mtx);

if (colorFrames.size() >= maxFrames)

colorFrames.pop_front();

colorFrames.push_back(f);

}

dataReady.notify_one();

}

}

});

VideoCapture can retrieve the following data:

- data given from the depth generator:

- cv::CAP_OPENNI_DEPTH_MAP – depth values in mm (CV_16UC1)

- cv::CAP_OPENNI_POINT_CLOUD_MAP – XYZ in meters (CV_32FC3)

- cv::CAP_OPENNI_DISPARITY_MAP – disparity in pixels (CV_8UC1)

- cv::CAP_OPENNI_DISPARITY_MAP_32F – disparity in pixels (CV_32FC1)

- cv::CAP_OPENNI_VALID_DEPTH_MASK – mask of valid pixels (not occluded, not shaded, etc.) (CV_8UC1)

- data given from the color sensor is a regular BGR image (CV_8UC3).

When new data is available, each “reader” thread notifies the main thread using a condition variable. A frame is stored in the ordered list — the first frame in the list is the earliest captured, the last frame is the latest captured.

Video streams synchronization

As depth and color frames are read from independent sources two video streams may become out of sync even when both streams are set up for the same frame rate. A post-synchronization procedure can be applied to the streams to combine depth and color frames into pairs. The sample code below demonstrates this procedure:

std::list<std::pair<Frame, Frame>> dcFrames;

std::mutex dcFramesMtx;

std::condition_variable dcReady;

// Pair depth and color frames

std::thread framePairing([&]

{

// Half of frame period is a maximum time diff between frames

const int64 maxTdiff = 1000000000 / (2 * colorStream.get(CAP_PROP_FPS));

while (!isFinish)

{

std::unique_lock<std::mutex> lk(mtx);

while (!isFinish && (depthFrames.empty() || colorFrames.empty()))

dataReady.wait(lk);

while (!depthFrames.empty() && !colorFrames.empty())

{

// Get a frame from the list

Frame depthFrame = depthFrames.front();

int64 depthT = depthFrame.timestamp;

// Get a frame from the list

Frame colorFrame = colorFrames.front();

int64 colorT = colorFrame.timestamp;

if (depthT + maxTdiff < colorT)

{

depthFrames.pop_front();

}

else if (colorT + maxTdiff < depthT)

{

colorFrames.pop_front();

}

else

{

dcFramesMtx.lock();

dcFrames.push_back(std::make_pair(depthFrame, colorFrame));

dcFramesMtx.unlock();

dcReady.notify_one();

depthFrames.pop_front();

colorFrames.pop_front();

}

}

}

});

In the code snippet above the execution is blocked until there are some frames in both frames lists. When there are new frames, their timestamps are being checked — if they differ more than a half of the frame period then one of the frames is dropped. If timestamps are close enough, the two frames are placed into the dcFrames list and the processing thread is notified about new data available.

Video processing

The processing thread function may look like that:

// Processing thread

std::thread process([&]

{

while (!isFinish)

{

std::unique_lock<std::mutex> lk(dcFramesMtx);

while (!isFinish && dcFrames.empty())

dcReady.wait(lk);

while (!dcFrames.empty())

{

if (!lk.owns_lock())

lk.lock();

Frame depthFrame = dcFrames.front().first;

Frame colorFrame = dcFrames.front().second;

dcFrames.pop_front();

lk.unlock();

// >>> Do something here <<<

}

}

});

In the processing thread, you’ll have two frames: one containing color information and another one — depth information. Insert your code after >>> Do something here <<< mark — the data is ready to be processed using OpenCV or your custom algorithm..

The complete implementation can be found here.

Summary

3D cameras play an important role in modern industry. They give us a great opportunity to solve computer vision tasks that couldn’t be completed with 2D data. And in this post we’ve learnt how to work with 3D cameras in OpenCV using OpenNI2 interface and how to solve synchronization issues that may arise.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning