In the complex world of modern medicine, two forms of data reign supreme: the visual and the textual. On one side, a deluge of images, X-rays, MRIs, and pathology slides. On the other, an ocean of text, clinical notes, patient histories, and research papers. For centuries, the bridge between these two worlds existed only within the brilliant, but burdened, minds of skilled clinicians.

What if we could digitize that bridge? What if an AI could not only see the subtle shadow on a lung scan but also understand its clinical significance, correlate it with the patient’s notes, and articulate a detailed analysis? This is no longer science fiction. This is the reality being built today with Vision Language Models (VLMs), a transformative technology poised to become the new digital heartbeat of healthcare.

This deep dive will explore the foundational tasks VLMs perform in medicine, examine their specific applications across healthcare, and address the critical challenges on the path to their adoption.

Key VLM Capabilities in Medicine

VLMs are a versatile platform capable of performing a symphony of interconnected functions that mimic and augment the cognitive processes of a medical expert.

- Classification and Zero-Shot Classification: Beyond basic classification (e.g., “pneumonia present”), VLMs provide context-aware analysis. A VLM can read a note, “65-year-old male, heavy smoker”, and analyze the corresponding X-ray with a higher degree of suspicion. Its true power is zero-shot classification: by understanding textual descriptions, it can identify rare diseases it has never seen an image of before, a feat previously impossible for standard AI.

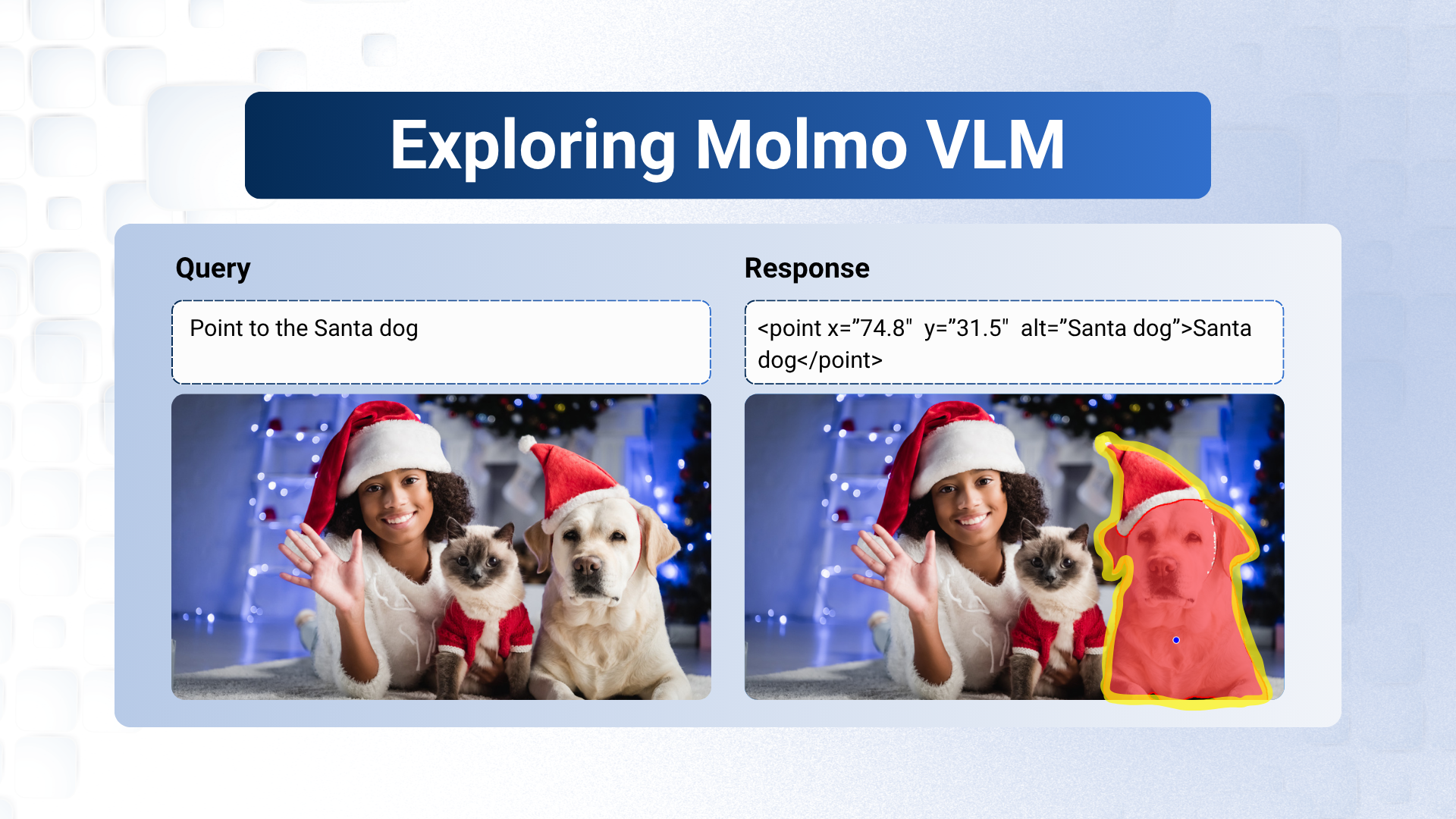

- Segmentation: This task involves precisely outlining anatomical structures like organs or tumors, which is critical for surgical planning and radiation therapy. VLMs can automate this, allowing a surgeon to simply request, “Outline the primary tumor in the left lobe of the liver,” and receive an accurate 3D model in seconds.

- Detection: Acting as a tireless second pair of eyes, a VLM can scan a high-resolution image and detect every instance of a specific abnormality. This could mean flagging tiny pulmonary nodules on a CT scan or identifying every mitotic figure on a pathology slide, enhancing the accuracy and speed of diagnosis.

- Retrieval: VLMs enable a “Google for medical images.” A clinician facing a complex case can upload an image and ask, “Find similar cases in patients under 30 with a history of osteoporosis and show their treatment outcomes.” This ability to retrieve not just similar images but also their associated clinical data provides invaluable decision support.

- Visual Question Answering (VQA): VQA turns static images into an interactive dialogue. A doctor can upload an MRI and have a conversation with it:

- Clinician: “Is there evidence of an ACL tear?”

- VLM: “Yes, a high-grade tear is visible at the femoral insertion point.”

- Clinician: “Compare the joint effusion to the scan from six months ago.”

- VLM: “The effusion has decreased in volume by approximately 20%.”

- Image Captioning & Automated Report Generation: At a basic level, VLMs can provide a concise caption for an image. But their advanced capability is generating complete, structured radiology reports. By analyzing a scan and the patient’s history, a VLM can draft a preliminary report with findings and impressions, which the radiologist then reviews, verifies, and finalizes, dramatically reducing their documentation burden.

Transforming Healthcare Domains with VLM Applications

These core capabilities are being applied across numerous medical fields to create tangible, real-world solutions.

- Revolutionizing Medical Imaging (X-ray, CT, MRI, Fundus):

- In emergency rooms, VLMs triage X-rays and CT scans, flagging critical findings like brain bleeds for immediate attention.

- For complex MRI scans, they can segment brain tumors or quantify cartilage loss in a joint, automatically tracking disease progression over time.

- In ophthalmology, they analyze retinal Fundus Images to screen for diabetic retinopathy or glaucoma, enabling early intervention to prevent blindness.

- Real-time Surgical Assistance: Inside the operating room, VLMs analyze live video from endoscopic cameras. They can provide augmented reality overlays on the surgeon’s monitor, highlighting critical structures like nerves to avoid or identifying tumor margins to ensure complete removal.

- Automated Radiology Reporting: Integrated directly into radiology workflows, VLMs are significantly reducing reporting turnaround times, allowing radiologists to focus their expertise on complex analysis rather than descriptive dictation.

- Remote Patient Monitoring & Telemedicine Enhancement: A VLM can analyze a photo of a post-surgical wound sent from a patient’s home and check for signs of infection. During a telemedicine call, it can help a GP assess a skin condition from a patient-submitted photo, providing a preliminary differential diagnosis.

- Virtual Health Assistants: The next generation of healthcare chatbots will be powered by VLMs. A patient can not only describe their symptoms but also show them. An AI assistant could analyze a photo of a swollen ankle, ask targeted questions, and then intelligently recommend the appropriate level of care, from home treatment to an urgent clinic visit.

Challenges and Ethical Considerations

The promise of VLMs is immense, but so is the responsibility. For successful and equitable integration, we must address critical challenges:

- Data Privacy and Security: Patient data is sacrosanct. The use of techniques like federated learning (where the model trains on local data without it ever leaving the hospital) and robust anonymization is non-negotiable.

- Algorithmic Bias: An AI is only as good as its training data. If a dataset lacks diversity, the VLM could be less accurate for underrepresented populations, widening existing health disparities.

- The ‘Black Box’ Problem: For a clinician to trust an AI, they need to understand its reasoning. The development of “Explainable AI” (XAI) that can highlight why it made a certain recommendation is crucial for safe adoption.

- Regulatory Hurdles and the Human in the Loop: These models must undergo rigorous validation and regulatory approval (e.g., from the FDA) to be classified as medical devices. Crucially, they must be designed as tools to augment, not replace, the clinician. The final decision and responsibility must always rest with the human expert.

Conclusion

Vision Language Models will not replace doctors. They will empower them. By taking on the burdensome tasks of data analysis and documentation, they will free clinicians to focus on what matters most: complex problem-solving, patient communication, and compassionate care. By creating a seamless bridge between sight and language, VLMs are becoming the indispensable co-pilot in the cockpit of modern medicine, charting a course toward a future that is more precise, efficient, and profoundly human.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning