We are thrilled to announce the OpenCV Spatial Al competition Sponsored by Intel results. We believe the people who won the competition are not just some talented Al engineers but also trailblazers who are leading the way in making the world a better place. Congrats to all of them!

It was never easy to select the best out of the best, thus we have decided on two winners each for the Gold, Silver, and Bronze category.

Our Six Grand Prize Winners

$3,000

Universal Hand Control

$3,000

$2,000

$2,000

At Home Workout Assistant

$1,000

Automatic Lawn Mower Navigation

$1,000

We are overwhelmed to see numerous submission from all

the Artificial Intelligence and Machine Learning Pundits. We are glad to introduce the finest projects submitted by the participants as given below:

Vision System for visually impaired

Team: Jagadish Mahendran

Mission

Here the focus is to provide a reliable smart perception system to assist blind and visually impaired people to safely ambulate in a variety of indoor and outdoor environments using an OAK-D sensor.

Solution

In this project, the team has developed a comprehensive vision system for visually impaired people for indoor and outdoor navigation, along with scene understanding. The developed system is simple, fashionable and is not noticeable as an assistive device. Common challenges such as traffic signs, hanging obstacles, crosswalk detection, moving obstacles, and elevation changes (e.g., staircase detection, curb entry/exits), and localization are addressed using advanced perception tasks that can be run on low computing power. A convenient, user-friendly voice interface allows users to control and interact with the system. After conducting hours of testing in Monrovia, California, downtown and neighboring areas, we are confident that this project addresses common challenges faced by visually impaired people.

"I would like to thank Daniel T. Barry for his help, support and advice throughout the project, Breean Cox for her continuous valuable inputs, labelling and educating me on challenges faced by visually impaired people, my wife Anita Nivedha for her encouragement and walking tirelessly with me for collecting the dataset and helping with testing,"

Notes from Author

Motivation for the project

Back in 2013 as I started my Master’s in Artificial Intelligence, the idea of developing a visual assistance system first occured to me. I had even shared a proposal with a professor. The infeasibility of using smart sensors then, combined with deep learning techniques and edge AI not being mainstream in computer vision made it difficult to make any progress on this project. I have been an AI Engineer for the past 5 years. Earlier this year when I met my visually impaired friend Breean Cox, I was struck by the irony that while I have been teaching robots to see, there are many people who cannot see and need help. This further motivated me to build the visual assistance system. The timing of the OpenCV Spatial AI competition could not have been better, it was the perfect channel for me to build this system and bring this idea to life.

Note: We are working on an updated Research Paper which will give more insights about our mission and the solution we created, we will publish it here very soon on the Blog.

Universal Hand Control

Team: Pierre Mangeot

Mission

Solution

Today technologies are capable of doing hand pose estimation (neural networks) or to measure the position of the hand in space (depth cameras). A device like the OAK-D even offers the possibility to combine the two techniques into one device. Using a hand to control connected devices (includes smart speaker, smart plugs, TV, … an ever-growing market) or to interact with applications running on computers without touching a keyboard or a mouse, becomes possible. More than that, with Universal Hand Control, it gets straightforward.

Universal Hand Control is a framework (dedicated hardware + python library) that helps to easily integrate the « hand controlling » capability into programs.

Currently, the OAK-D is used as a RGBD sensor but the models are run on the host. Once the Gen2 pipeline is available the models would be able to run on the device itself. Details and status about the Gen2 pipeline can be followed from the given GitHub link below.

We are grateful of PINTO’s Model Zoo Repository in developing this project which helped us in tuning the results of trained models effieciently.

Link to Model Zoo : https://github.com/PINTO0309/PINTO_model_zoo

Parcel Classification & Dimensioning

Team: Abhijeet Bhatikar, Daphne Tsatsoulis, Nils Heyman-Dewitte, William Diggin

Mission

- Many cargo companies do not measure their Cargo accurately at a large scale using the newest technology. They describe their Cargo with width, height and depth at best. In many cases, a manual measuring tape is being used to make these guesstimates.

- Even when they do measure their Cargo on a more granular level, they mostly always fit cuboids on top of irregularly shaped objects. For example, a cylindrical barrel’s dimensions are up to the nearest 3D cube that fits this barrel (width x length x height).

Solution

Team has built an end-to-end proof of concept for measuring and loading packages into cargo containers. This solution leverages the DepthAI USB3 (OAK-D) camera to determine the shape accurately, then calculates the width, length and height of packages for cargo shipment. All of this could be managed by a software tool (3D CONTAINER STACKING SOFTWARE) that finds the optimal arrangement of the batch of packages given the container’s size.

Real Time Perception for Autonomous Forklifts

Team: Kunal Tyagi, Kota Mogami, Francesco Savarese, Bhuvanchandra DV

Mission

The disadvantage of using a modern Autonomous Forklift is the failure of any technology part due to the lack of clear view while loading or unloading cargo. Many times, the technology break down in Autonomous Forklift will include a lot of waiting period.

Solution

The team has come up with a fabulous solution to this with Real-Time Perception for Autonomous Forklifts which will help to address the issue. It will enhance the functionality of advanced sensors, as well as vision and geo guidance technology. Many people outside the industry don’t know that autonomous vehicles in logistics have already taken on a significant part of the logistics work process. Autonomous Forklifts load, unload and transport goods within the warehouse area, by connecting to one another and forming flexible conveyor belts. Thus, it is imperative to have a solution which could enhance the overall performance of Autonomous Forklifts avoiding frequent breakdown and make it functional at full potential.

"Special thanks to Ayush Gaud, Luong Pham, Kousuke Yunoki, and Yu Okamoto

for helping with the project."Notes from Author

At Home Workout Assistant

Team: Daniel Rodrigues Perazzo, Gustavo Camargo Rocha Lima,

Natalia Souza Soares, Victor Gouveia de Menezes Lyra

Mission

We observed that due to the closure of gyms and public spaces this year, doing physical activities while maintaining social distancing became a challenge. However, exercising alone can also be complicated and even dangerous, sometimes due to possible muscle injuries.

Solution

The team have developed a Motion Analysis for Home Gym System (MAHGS) to assist users during at-home workouts. In this solution, it estimates the person’s 3D human pose and analyzes the skeleton’s movements, returning the appropriate feedback to help the user to perform an exercise correctly.

The system is composed of two main modules:

- 3D Human Pose Estimation – It was developed using the neural inference capabilities of the OAK-D and the DepthAI library. The human pose estimation module will run on a PC/Raspberry Pi 4 connected with the OAK-D since it does not have WiFi nor Bluetooth yet.

- Motion Analysis – This has been implemented using a technique called Ikapp. The movement analysis module will be running on a smartphone using a TCP/IP protocol with the ZeroMQ framework to perform the communication between these devices.

Automatic Lawn Mower Navigation

Team: Jan Lukas Augustin

Mission

- Killing small animals such as hedgehogs.

- Hurting children, cats, dogs, etc.

- Driving into molehills and crashing into “unwired” obstacles such as trees.

Solution

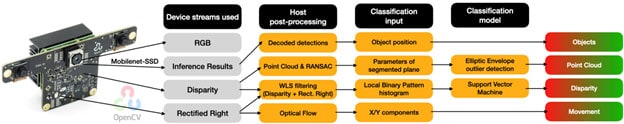

- Neural inference for object detection on Intel Movidius Myriad X and 4K RGB camera.

- Point cloud classification based both mono cameras for disparity/depth streams.

- Disparity image classification based on disparity and rectified right streams

- Motion estimation using rectified right stream.

- Point Cloud (Elliptic Envelope for Outlier Detection)

- Disparity (Support Vector Machine)

- Objects (Mobilenet-SSD for Object Detection)

Contest Details

We started this competition back in July this year, and unexpectedly it got so popular that we received more than 235 submissions in just a few days. Initially, we had shortlisted 32 Winners in Phase I Result, and we had to increase our deadline also after looking at so many high-quality submission that it became very challenging for us to declare the best out of best.

Finally, we congratulate all the winners as well as participants who showed interest in contesting. However, we have the more upcoming contest in future next being in January 2021 with 10X times rewards. To know more about the forthcoming contests, please click here.

We are thankful to Intel for its technology leadership in edge AI and for sponsoring this competition.

OpenCV

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning