The OpenCV Spatial AI Contest, where 50 teams are building LEGO-based versions of industrial manufacturing solutions with the artificial intelligence power of OpenCV AI Kit, is in full-swing as teams work on their projects with an eye toward the April 1st build deadline. In this post we’ll cover some of the recent highlights on social media and learn about a few of the participating teams: Team B-AROL-O and Team WahWahTron. Let’s go!

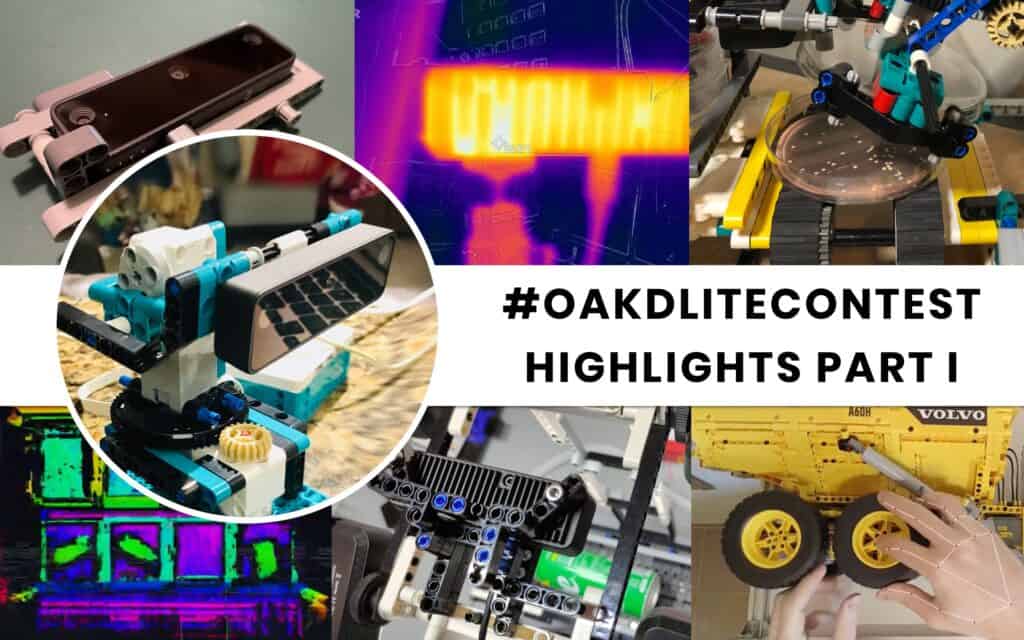

#OAKDLiteContest Hashtag Highlights

- Team Getafe Poka-Yokers took things up a level and ran MediaPipe hand detection on his assembly of the LEGO kit for their project

- Team B-AROL-O and have been keeping detailed weekly notes for their project “ARNEIS: Automated Recognizer, Network-Enabled, Items Sorter” – read the latest updated on their website

- EyeCan showed off the first results from their ScaNERF project, using LEGO to create Neural Twins

- Evtek is working on a recyclable classification and sorting with conveyer belts, and it looks to be coming along great

- Keep and eye on Team Sourdoughers the BreadBot, which will use YOLOv5 to monitor sourdough bread as it ferments and becomes delicious [Editor’s note: Phil loves bread]

Today’s (2/17/22) edition of OpenCV Weekly Webinar featured Team LA Inoculum with their Petri dish scanner project combining LEGO, OAK-D-LITE and bacterial science. Watch the episode on LinkedIn Live, Twitter, Twitch and YouTube.

Team Profile: Team WahWahTron

What is your project? Briefly describe your problem statement and proposed solution

Our team’s project is attempting to address the problem of recycling electronic parts.

Each year brand new electronic products are launched, be them the latest smartphone, laptop etc.

Due to planned obsolescence there’s an ever growing amount of e-waste piling up, when ironically, there’s an ever dwindling supply of metals and minerals used to manufacture these devices.

In the spirit of reusing and recombining LEGO ad infinitum, team WahWahTron will highlight this critical environmental issue to educate through play.

There are parts recycling solutions out there, most famously Apple’s Daisy, however this is a brand specific solution. Our project is a study in how a generic electronics parts salvage system could be built for real-world integration, using small, affordable and scalable components, such as the OAK-D-Lite. The proposed solution involves building a system that attempts to take apart a LEGO assembly (illustrating a discarded electronic product) and place each salvageable component in its respective sorted container for reuse. OAK-D-Lite’s fast inference will be used to classify the LEGO parts, and the depth information will be used to locate the part in 3D.

The crane arm with a motorised gripper and disassembly mechanism will be made using the M.V.P components of a LEGO Mindstorms Robot Inventor Kit. (The part’s 3D position will be converted from the camera’s coordinate system to a global coordinate system to drive the arm’s end effector.)

What is your team origin story? How did you get together?

Jessica and I met in the nerdiest of circumstances: at a Machine Learning for Artists workshop in Belgrade. We’ve immediately started collaborating on projects. One of these projects was for the puppet theatre in Bucharest. The original idea was to use Kinect to track puppets in real time, allowing kids learning to puppeteer to put on a show that is both physical and digital. The built-in Kinect skeleton tracking didn’t work at that small scale: turns out the existing models were never training anything smaller than 1.2m. At that time OpenPose just came out: I’ve managed to compile from source and run on a current GPU but couldn’t squeeze more than 12fps.

With time ticking and many computer vision options tested (tracking LEDS, tracking coloured bands, etc.) and discarded I landed on something that was fast and accurate enough: using inertial measurement units (IMUs) to track join orientations: this solved occlusions problems such as one character carrying another in their arms. James McVay, a brilliant and creative electrical engineer, joined the team and helped move from my basic two joint POC to the full 10 joints system we were aiming for.

How did you decide what problem to solve?

We were brainstorming ideas and it was Jessica’s suggestion that connected the pieces for brief so nicely together. Given the environmental situation we’re in, the concept is great, even at toy scale:

it’s still valuable to build prototypes that can be scaled and reveal challenges / opportunities for solutions/innovation in the process. Conceptually, it’s interesting to design a system that can take apart another system. In practice, as we’re developing the project and making ourselves familiar with LEGO again (after too many decades to admit 🙂 ) and also discovering the physical constraints when it comes to servos precision, torque and strength of plastic. Overall, doing as much as possible with as little as possible seems like a good idea for the environment, but this also applies nicely to the competition.

What is the most exciting part of #OAKDLiteContest to you?

Getting to play with LEGOs as adults is a lot of fun indeed, however the most exciting part

is seeing what we can achieve with the OAK-D-Lite device.

Since it’s open source it’s a great opportunity to understand stereo vision at a lower level, make use of it for depth information and have a play with state of the art models available.

Speaking of machine learning, it’s also an opportunity to understand a full machine learning pipeline from dataset creation all the way to optimisation and deployment to an edge device.

What do you think / feel upon learning you were selected as a Finalist?

How fortunate to be selected as a Finalist!

We get to be kids again with the Lego setup and take that further with the serious engineering behind the OAK-D-Lite. So much fun to be done!

What if anything has surprised you so far about the other projects you’ve seen?

Having a quick look at #OAKDLiteContest it’s great to see creativity and playfulness shine.

I already see a couple of projects that give me that good kind of “I wish I thought of that first” type of motivation.

Do you have any words for your fellow competitors?

We wish our fellow competitors the best of luck and keep going! Only a few weeks to go!

Looking forward to seeing not only the final results, but equally the process and the ideas that didn’t make the cut.

Where should readers follow you, to best keep up with your progress? (Twitter, LinkedIn, etc)

We’ve been quite secretive with our updates: hopefully we can improve on that soon.

In the meantime, for anyone interested in creative uses of computers you can follow us here:

- https://twitter.com/innjesst

- https://www.instagram.com/shedrawswithcode/

- https://www.instagram.com/jhm.nz/

- https://www.youtube.com/c/JamesMcVayNZ/videos

- https://twitter.com/orgicus

- https://www.linkedin.com/in/georgeprofenza

Team Profile: Team B-AROL-O

What is your project? Briefly describe your problem statement and proposed solution

On an industrial production line, items (in our expertise, bottles) follow a predefined process where every kind of object has its own path from start to the end of line packaging.

Imagine a production line where different items need to be mixed in the final package. Imagine that this mix is different for each final customer who made his order via web.

ARNEIS (short for Automated Recognizer, Network-Enabled, Items Sorter) is our proposed solution to the problem just described.

We imagine that those items, coming from different production lines, are mixed in a final conveyor belt where the ARNEIS system can recognize the requested product and sort it into the correct package.

Of course a full-blown solution to the described problem cannot be done completely in the scope of the OpenCV Spatial AI Contest, given the costraints we have on BOM and time; however we believe that the basic function of recognizing and sorting can be tested with simple embedded electronic components, plastic bricks and Open Source code.

The main components of the ARNEIS system are the following:

The eye and the mind: A crucial component in this project is the vision system and the intelligence which analyzes the images to match to known patterns.

The camera can be installed in proximity of a conveyor belt where items are in transit. Camera images have to be analyzed by a dedicated CPU. From the integration standpoint, the best solution for industrial projects is when this CPU may be integrated inside the camera case.

The arm: There are several solutions for sorting items from a running conveyor belt; each of them is optimized for different non-functional requirements such as flexibility, speed and cost.

The simplest solution is to use an actuator (in Italian, “espulsore”) that synchronously ejects the item onto either a new conveyor or an external conveyor based on a command sent by the PLC.

The command should be synchronized and take into account the travel of the item on the conveyor during the period from when the camera captures the frame to when the ejection is performed. This is the solution that our team has been developing during the Phase 2 of the OpenCV Spatial AI Contest.

What is your team origin story? How did you get together?

The B-AROL-O Bottling Systems Team takes his name from a few facts:

- First of all, all the Team Members have a full-time job at a leading Italian company specialized in packaging machinery for the Food and Beverage industry;

- We are all based in Piedmont, which is one of the most important regions of Italy and quite famous for its vineyards, wines and spirits;

- “Barolo” is a fine red wine made of grapes which are grown in a small area in Piedmont close to our hometown, but is well known and its bottles are exported all over the world.

To summarize, we choose an iconic name of a wine, which embeds our Italian origins but also our experience in the packaging industry. By the way, the “Arneis” (white) and “Barolo” (red) wines look like the Yin and Yang which gives us the energy to burn on the Contest!

How did you decide what problem to solve?

Honestly we did not spend much time to select the problem, also because we learned about the OpenCV Spatial AI Contest only a few days before the Phase 1 deadline. Instead, we chose something we are familiar with because of our everyday job, trying to fit the project constraints on materials and the timeline of the project.

What is the most exciting part of #OAKDLiteContest to you?

We would list the following:

- The technology: OAK-D-Lite is an awesome piece of hardware; plus, AI and CV are exciting topics we will have the chance to learn while working in this project

- The community around OpenCV and the strong support by Luxonis and all the involved parties

- Play with LEGO sets – and here Gianpaolo would add: without stealing them from my children 🙂

What do you think / feel upon learning you were selected as a Finalist?

Two opposite feelings: Joy and Fear.

Initially we couldn’t believe we made it, given the short time we had when preparing the submission to Phase 1.

Why Joy? The news was really exciting, we would have never believed we could have LEGO sets as a Christmas present 🙂

Moreover, being selected between so many competitors meant that our idea was appealing to a few experienced persons within the OpenCV community – which is already a big achievement to us.

Why Fear?: We want to deliver according to the ARNEIS project plan but we know we have so much to do and we are only three people with little spare time. However we are confident than the Community will help us achieve our goal. By the way, ARNEIS is an Open Source project from the very beginning, so everybody may benefit from what we will create.

What if anything has surprised you so far about the other projects you’ve seen?

When we submitted our proposal to Phase 1, luckily we did not dig too much into the projects developed in the previous editions of the OpenCV contests, otherwise we would have been ashamed and wouldn’t have submitted it 😉

Since we were selected to continue with Phase 2, we have been watching videos and reading blog posts to learn from, and we found so much value and competence there which is a stimulus for us to do the best we can.

Do you have any words for your fellow competitors?

Don’t be afraid, we are playing only with the motivation of learning from each other and having fun with LEGO and OpenCV.

We wish you the same fun we had so far!

Where should readers follow you, to best keep up with your progress? (Twitter, LinkedIn, etc)

We would recommend to follow https://twitter.com/baroloteam where we regularly post news and weekly updates about the ARNEIS project.

If you are more interested on the software side of the project, you may subscribe to https://github.com/B-AROL-O/ARNEIS or have a look at the ARNEIS project roadmap.

In case – as we hope – you have any suggestions or feedbacks to give us, please DM @baroloteam on Twitter, or submit issues or Pull Requests on GitHub.

That’s All For Now!

Thanks for reading this first in our series of highlights and team profiles. You can expect to learn much more about the teams in our contest as we continue through the build phase. If you want to see the latest before anyone else, subscribe to the OpenCV Newsletter, and follow the #OAKDLiteContest hashtag on Twitter and LinkedIn. See you next time!

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning