Phase 2 of OpenCV AI Competition 2021 is winding down, with teams having to submit their final projects before the August 9th deadline. But that doesn’t mean we’re slowing down with the highlights and team profiles! The action has kept up on social media and you truly love to see it.

In this post we’re featuring a short interview with some of the teams who have posted videos and pics online as part of OpenCV AI Competition 2021 using the #OAK2021 hashtag. Thanks a bunch to Team UTAR4Vision and the Dong Lao Rangers for talking with us this time! If you’d like to see your team here, send exactly one email to phil @ opencv.org. If you missed the previous editions of this series featuring teams and cool videos from the Competition, go back and read Part 1, Part 2, Part 3, Part 4, and Part 5!

Some Highlights From The #OAK2021 Hashtag

- Team Cortic showed their implementation of fall detection using OAK-D

- Team Dong Lao Rangers demonstrated their autonomous guided vehicle project’s use of semantic segmentation

- Team Kauda with another weekly update, showing their digital twin robot arm in a new environment

- Team Spectacular AI demonstrates the fusion of GPS data with OAK-D for self-driving vehicles

- Team Re-Drawing Campinas has released a python library called “Redrawing” which is used in their art project, Disc-Rabisco

- Team Kauda demonstrates emotion recognition using a 2-stage pipeline from Modelplace.AI

- Dong Lao Rangers also gave us a peek at the user interface for their guided vehicle project

- The quadrupedal Agri-Bot became more stable due to integrating OAK-D’s IMU to adjust for sudden shifts

- Team Grandplay shows off their robot companion, Elsa

- The self-driving ads bot, SEDRAD, learned semantic segmentation and more

- Team Getafe Musarañas’ Spatial Vision Poka-yoke shown detecting a Hand .vs. Safety Glove and detecting correctly assembled parts

- Vecno Team put together a nice video showing an overview of their project, Cyclist Guardian

- Team Cortic also showed off measuring vital signs with OAK-D!

A note to team leaders: Stay tuned for instructions on where to submit your final project PDF, demo video, and/or source code. Now, onto the newest highlights!

Team Profile: Team UTAR4Vision

What is your project? Briefly describe your problem statement and proposed solution

Our project title is Visually Impaired Assistance in COVID-19 Pandemic Outbreak. We are dedicated to developing a visually impaired assistance device that can better assist and serve visually impaired or blind people in their daily lives, especially in the COVID-19 pandemic outbreak. Current assistive tools such as simple canes and guide dogs are functional, but they cannot help blind person to solve the problem in pandemic. This is because social distancing is one of the measures that have been taken to slow the spread of diseases and the current assistive tools cannot do this job. So, we proposed a system that can help blind people maintain physical distance from other people by using a combination of RGB and depth cameras. The real-time semantic segmentation algorithm on the RGB camera can detect the location of the person and use the depth camera to evaluate the distance to the person; if the person is under 2 m, we will provide audio feedback through the headset. Of course, there are more features!

Does your team have a funny “origin story?” How did you get together?

We formed the team 7 days before the phase one’s submission deadline. Yes, It was 24th of January, 2021! Most of us did not know each other. The team leader (Mr Ho Wei Liang) shared this competition details in the WhatsApp group (This group is a platform to serve Universiti Tunku Abdul Rahman (UTAR in short) Robotics Society’s members). Most of us are from this society. After that, the team leader shared this news to the Head of department in UTAR and tried to get more UTAR students to join the team.

How did you decide what problem to solve?

It was very difficult for us to decide, especially since the team had formed in such a short time. After the first discussion, the team had 3 days to decide what problem to solve. We reviewed significant amounts of research papers, watched a lot of videos on YouTube and eventually came out two directions – Visually impaired assistance and Miscellaneous. This is because we think that “vision” is important for visually impaired people, especially during the ongoing COVID-19 pandemic. We believe that we can make a huge difference with OAK-D for them. Thankfully, we were able to submit both proposals by the deadline and got shortlisted!

What is the most exciting part of #OAK2021 to you?

That #OAK2021 brought our team together, and thanks to it we could get 10 sets of OAK-D to carry out this fascinating idea into reality. This project would not be possible for us without the competition!

What do you think / feel upon learning you were selected for Phase 2?

Thanks to OpenCV for giving us access to the online course – OpenCV Crush Course. Because of the course, we know how to set up the OAK-D in our PC easily and learned some new applications throughout the course. Also, we learned a lot on the Discord channel. Fellow Discord members were helpful and willing to answer our questions and provide more information.

What, if anything, has surprised you so far about the competition?

The DepthAI documentation is fantastic! We think that the project cannot be done this far without it. Huge shortout to the documentation team for their effort!

Do you have any words for your fellow competitors?

We saw number of inspiring projects in the Highlights blog posts. We look forward to learning more from them in near future. Lastly, we wish them all the best!

Where should readers follow you, to best keep up with your progress? (Twitter, LinkedIn, etc)

Follow the UTAR4Vision Facebook page for more videos or information.

The members of UTAR4Vision are: Wong Yi Jie, Haw Chun Yuan, Wee Lian Jie, Lim Zhi Jian, Chong Yee Aun, Jamie Lee Ye Ker, Kelvin Chieng Kim Seng, Lau Kin Hoong, and Ho Wei Liang.

Team Profile: Dong Lao Rangers

What is your project? Briefly describe your problem statement and proposed solution

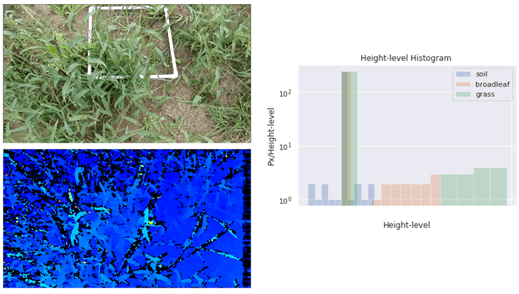

Accurate road segmentation is critical for mobile robots to perceive the safe road before planning to drive. Our project is to build a system for automatically generating labels for segmenting road obstacles, then train a detector with semantic segmentation with our self-supervised labels. We utilize the depth information of the intelligent camera OpenCV AI Kit with Depth (OAK-D) in collecting data and running real-time predictions. Our solution is to save time in labeling data and perform high-speed real-time segmentation for mobile robots.

Does your team have a funny “origin story?” How did you get together?

Dai-Dong, Thien-Phuc, and I have been friends for a long time since we were in Vietnam. Moreover, we are a team that both come from IVAM Laboratory at Electrical Engineering Department, National Taiwan University of Science and Technology (NTUST). Dai-Dong and Thien-Phuc are currently Ph.D. candidates with research interests include robotics and image/video processing. I am a master’s student with research fields include computer vision and autonomous vehicles. The same interests brought us together to join the OpenCV Spatial Competition 2021. Moreover, we appreciate the guidance and advisor from Professor Chung-Hsien Kuo and significant supports from our laboratory to complete our project.

How did you decide what problem to solve?

Automated Guided Vehicles (AGVs) are developed for various applications based on their capacity to navigate independently through their environments, such as material delivery, forklifts, and port automation. Furthermore, especially during the COVID-19 epidemic, AGV robots in restaurants, hospitals, and quarantine sites are essential. As a result, a system for avoiding static and dynamic road obstacles is urgently needed for AGV robots to improve autonomous navigation. With the fast advancement of deep learning, mobile robots may now perform autonomous navigation based on training data. Thus, in this challenge, we consider creating an automatic system for labeling ground truth, then train a detector to perform real-time predictions in localizing the obstacles in three dimensions X, Y, Z in real-world coordinates to enhance the navigation performance of AGV robots in practical applications.

What is the most exciting part of #OAK2021 to you?

Thanks to OpenCV Spatial AI Contest 2021, we witnessed great works and applications from many fantastic teams. In addition, it is an excellent opportunity for our team to cooperate and develop a practical application from various perspectives.

What do you think / feel upon learning you were selected for Phase 2?

We were very delighted and proud to be selected for Phase 2. It means our proposed idea is interesting and beneficial to practical applications. We were also excited to try the upcoming OAK-D camera with many applications powered by deep learning techniques.

What, if anything, has surprised you so far about the competition?

Our first idea is about taking advantage of depth information from the OAK-D camera. However, the first versions of the DepthaiAI library were not good in outputting quality disparity maps and alignment between RGB image and depth image. In that situation, we had to do many pieces of research to find another solution and posted our issues to DepthAI GitHub. Surprisingly, we quickly got active responses and support from the DepthAI team. In particular, we would like to say thank you to Brandon Gilles and his team for their contributions to society.

Do you have any words for your fellow competitors?

We want to thank other teams for their innovative applications. All of them are deserved to win. We wish them the best of luck and keep moving forward on their ideas with great enthusiasm. Be patient, great things take time.

Where should readers follow you, to best keep up with your progress? (Twitter, LinkedIn, etc)

Our updates about the project is on our LinkedIn pages:

More To Come!

Thank you for reading this sixth post in our series of highlights and team interviews. These are just a few of the over 250 teams participating in the competition- we wish them all the very best of luck! If you’re an AI creator who wants to join in on the fun, why not buy yourself an OAK-D from The OpenCV Store?

Stay tuned as Phase 2 winds down, and follow the #OAK2021 tag on Twitter and LinkedIn for real-time updates from these great teams. Don’t forget to sign up for the OpenCV Newsletter to be notified when new posts go live, and get exclusive discounts and offers from our partners.

If you’re in the Competition and would like to see your team highlighted or interviewed here, reach out to Phil on LinkedIn! See you next time!

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning