It’s been a few weeks since our last post, but things have definitely not slowed down in OpenCV AI Competition 2021! We’ve got a slew of highlights in this post, as well as interviews with two more of the amazing teams in this worldwide competition.

In this post we’re featuring a short question and answer session with some of the teams posting cool stuff online as part of OpenCV AI Competition 2021 using the #OAK2021 hashtag. Many thanks to teams EyeCan and Clara for agreeing to be interviewed this time! If you’d like to see your team here, drop me a line at phil @ opencv.org. If you missed the previous editions of this series featuring teams and cool videos from the Competition, go back and read Part 1, Part 2, and Part 3!

Some Highlights From The #OAK2021 Hashtag

So many teams are posting for the first time, and we love to see it! This update has the most new stuff we’ve seen at once so far. Thanks for sharing, all.

- Tracking and pose estimation for cows from Team Roc4t

- Big Orange the robot uses SLAM and YOLO v4 to deliver an item to a person identified by name, in another room

- Realtime display of 3D objects with OAK-D and Jetson Nano from Team Calgary Storm

- Team Kauda demonstrates Spatial MoveNet + Blaze Hands for collaboration and worker safety

- Team Cortic shows multiple OAK-Ds working together for real time driving feedback

- A magic mirror concept from Team Cortic, they’ve been busy!

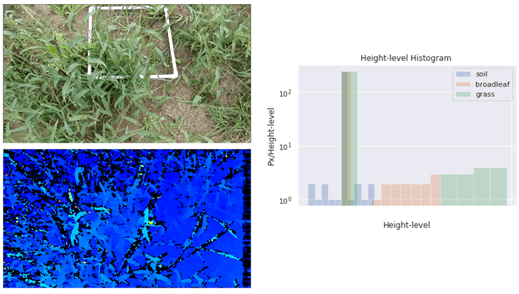

- Semantic segmentation being shown with SHL Robotics self-driving robot and its 2D navigation markers

- Team Deepflow Tesseract’s quadrupedal agriculture bot gains an OAK-D robot arm

- Real-time yoga pose recognition and estimation from team Calcutta Devs

- Multi-camera left/right + Color + Disparity by Team Getafe Musarañas

Q&A With Team EyeCan

What is your project? Briefly describe your problem statement and proposed solution

Labeling data is one of the main problems, today, for tuning AI-based vision systems. eyecan.ai deals with this very thing, and has a patented solution to label data fully automatically using robots. In this challenge, however, we wondered if it was possible to do it also with a simple conveyor exploiting the motion of objects and an RGB-D sensor, so as to produce labels to train Object Detectors without human intervention. Our solution is based on a TrinocularStereo network, directly deployed on the OAK chip in order to obtain a dense 3D reconstruction of objects moving on a conveyor belt. With the 3D reconstruction we are able to segment objects individually and generate labels (Bounding Boxes and/or masks) to then train a detector to recognize them. Thanks to the practicality of the OAK (small size and versatile optics) we can use a sensor of this type in a dynamic way (move it at will on the scene) rather than a classic industrial camera that once fixed can not be moved.

Does your team have a funny “origin story?” How did you get together?

We are a team that comes from both eyecan.ai, a spin-off of the university of Bologna, and the computer vision research group of the same university. We have always dealt with both object detection and stereo vision and in OAK-D we have found the perfect embodiment of the two worlds!

How did you decide what problem to solve?

Our problem comes from the needs of the marketplace. AI adoption is low, especially in the industrial world, because the time to collect and label data is so high. Although there are many startups doing data tagging, the industrial world is very conservative and does not accept to disclose their images externally to have them annotated. Another problem is that if there is a human in the data annotation loop, the time is much longer and the final system will surely contain errors that will affect its performance.

What is the most exciting part of #OAK2021 to you?

This challenge introduced us to some wonderful Computer Vision teams and applications that we hadn’t imagined. It was very inspiring to see how despite the competition, many teams released some of their code open during the challenge, and this motivated us to do the same!

What do you think / feel upon learning you were selected for Phase 2?

We were very proud to be selected because it meant that our idea had been appreciated and we couldn’t wait to try the device because we know many companies that would love to own it instead of us 🙂

What, if anything, has surprised you so far about the competition?

We were very surprised by the creativity of the other teams and the spread of computer vision in almost every field

Do you have any words for your fellow competitors?

There are so many teams that deserve to win, I wish them the best of luck, for us the real win was being part of this community and being able to share it.

Where should readers follow you, to best keep up with your progress? (Twitter, LinkedIn, etc)

On LinkedIn at: https://www.linkedin.com/company/eyecan-ai

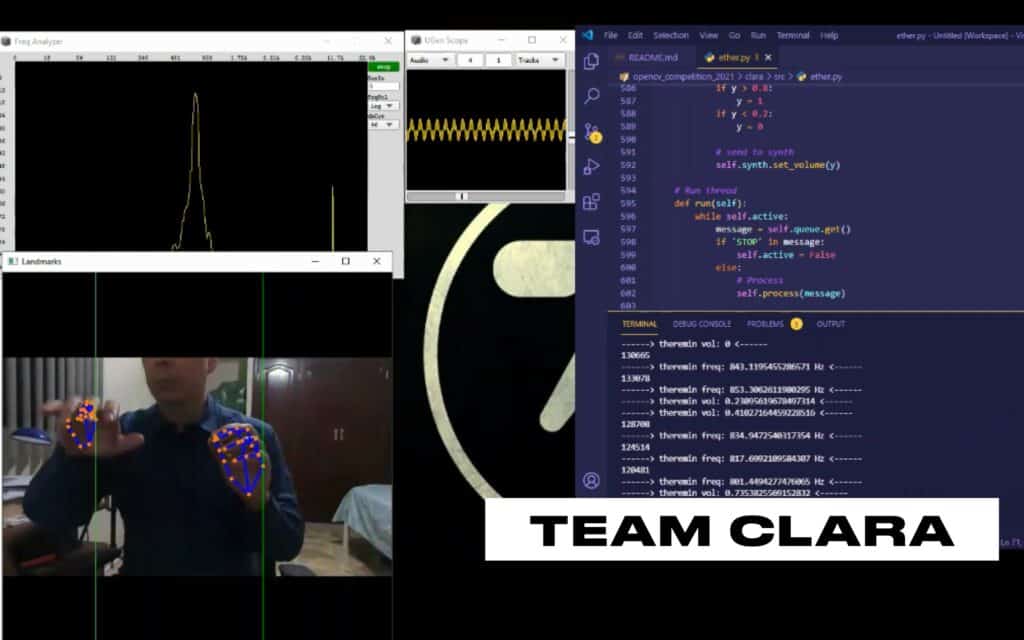

Q&A With Team Clara

What is your project? Briefly describe your problem statement and proposed solution

Our project is to set the foundations for a system that helps in performing/learning the Theremin. The problem with the Theremin, at least from a beginner’s standpoint, is that it provides no physical feedback about where the “notes” are (compare that with piano, guitar, even string instruments like violin). This makes it a bit hard to figure out where to place the hand in order to produce notes. What if, assisted by AI, we could get a hint about how to play the instrument. And this is where CV enters into our project and the idea we proposed for the competition. We have found out that it is a bit harder than we thought originally.

Does your team have a funny “origin story?” How did you get together?

Elisa and I have been friends for a long time. We met at University but cursory. After some years we met again. She is a professional musician, and she has directed bands and choirs and I was part of one of those choirs. I am not a musician but my interest in computer music as a hobby, brought us together.

How did you decide what problem to solve?

We are both interested in music. Elisa is a Musician and Music Educator. I have interests in computer music. In particular I’ve always been frustrated about the fact I never managed to play the piano despite years of trying. At first when I saw the competition announcement I thought about this idea I’ve been pondering about for a while. It is about measuring the performance of piano learners (fingering, motion, expressiveness, etc) using computer vision. Nonetheless, I did some research and the problem is way too hard for a 3 months competition. I was going to give up but then I thought about the Theremin, an instrument I always wanted to experiment with. That is when I told Elisa about my idea and together we managed to change the scope and nature of the project and post it for participation.

What is the most exciting part of #OAK2021 to you?

This has brought me the opportunity (and the excuse) to learn more about the OpenCV library, learn how to use the OAKD device and at the same time, realize all the possibilities it opens for hobbyists. During the course of the competition I came out with some ideas I want to develop further after the competition. We are learning a bit of Supercollider (a computer music framework) and I want to test the OAKD for user interface experimentation in sound design.

What do you think / feel upon learning you were selected for Phase 2?

This was the first time I heard about an OpenCV organized competition, and also I’ve never submitted an idea for any competition. So I didn’t know what to expect in terms of results. It was surprising we managed to get to Phase 2. When our kits arrived we were excited about winning something.

What, if anything, has surprised you so far about the competition?

First of all it is surprising the number of submissions to the competition. This reveals how AI and CV are bringing more attention and momentum specially for the masses who want to start experimenting about it. On the other hand I’ve discovered that some things in CV are harder than originally conceived. This is when a supporting community (through the discord channel mainly) is really key for developing a strong group of adopters. I think we all have been learning and experimenting with something really new at the same time, and that is really amazing.

Do you have any words for your fellow competitors?

Sometimes things don’t work as desired, and you spend hours trying to debug or figuring out a hack. My piece of advice is: don’t give up. If you are stuck, stop thinking about the project for some time, and you will see after a while you bring new ideas. Also develop the ideas that complement your personal interests and passions. For us, it is about music and education.

Where should readers follow you, to best keep up with your progress?

- For news regarding progress please follow us at https://instagram.com/stories/elisa.andrade.fernandez/ (we will post soon)

- The project source code, so far is at https://github.com/fortachong/clara

More To Come

Thanks for reading this fourth post in our series of team profiles. These are just a few of the over 200 teams participating in this huge competition- we wish them all the very best of luck! If you’re an AI creator who wants to join in on the cool stuff, why not buy yourself an OAK-D from The OpenCV Store?

Stay tuned for more profiles, and follow the #OAK2021 tag on Twitter and LinkedIn for a steady stream of awesome stuff from these amazing teams. Don’t forget to sign up for the OpenCV Newsletter to be notified when new posts go live, and get exclusive discounts and offers from our partners.

See you next time!

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning