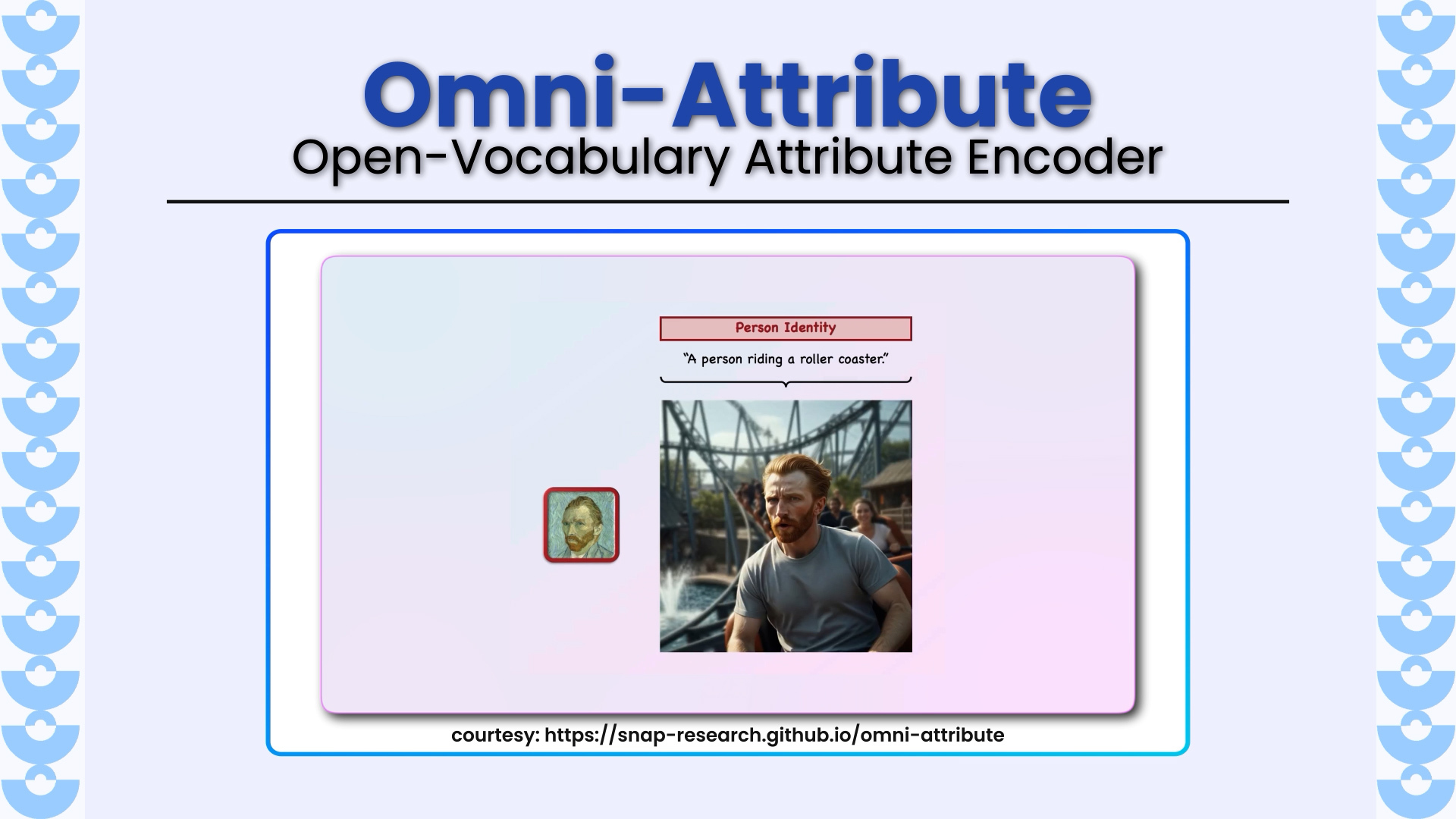

Omni-Attribute introduces a new paradigm for fine-grained visual concept personalization, solving a long-standing problem in image generation: how to transfer only the desired attribute (identity, hairstyle, lighting, style, etc.) without leaking irrelevant visual details. Developed by researchers from Snap Inc., UC Merced, and CMU, this work proposes the first open-vocabulary image attribute encoder explicitly designed for disentangled, composable, and controllable generation.

Key Highlights:

Open-Vocabulary Attribute Encoder (First of Its Kind)

Omni-Attribute jointly processes an image + textual attribute description to extract attribute-specific embeddings, unlike CLIP/DINO-style holistic encoders that entangle multiple visual factors. This enables precise control over what is transferred and what is suppressed.

Positive–Negative Attribute Supervision

A novel data annotation strategy uses semantically linked image pairs annotated with:

- Positive attributes (shared concepts to preserve)

- Negative attributes (differing concepts to suppress)

- This explicitly teaches the model attribute disentanglement and prevents “copy-and-paste” artifacts common in personalization.

Dual-Objective Training (Generative + Contrastive)

Training balances:

- Generative loss → preserves high-fidelity attribute details

- Contrastive loss → repels embeddings of irrelevant attributes

- Together, this produces clean, discriminative, attribute-level representations.

Composable Attribute Embeddings

Attribute embeddings from multiple reference images (e.g., identity from one image, lighting from another, style from a third) can be linearly composed to generate a single coherent image thereby enabling powerful multi-attribute synthesis.

LoRA-Tuned MLLM + Frozen Generator Design

Built on a LoRA-tuned multimodal LLM (Qwen2.5-VL) with a lightweight connector and frozen diffusion generator + IP-Adapter, preserving pretrained knowledge while enabling strong personalization control.

State-of-the-Art Results

Omni-Attribute outperforms CLIP, DINOv2, Qwen-VL, OmniGen2, FLUX-Kontext, and Qwen-Image-Edit across:

- Attribute fidelity

- Image naturalness

- Text–image alignment

It shows especially strong gains on abstract attributes (hairstyle, expression, lighting, artistic style), where prior methods struggle most.

Why It Matters

Omni-Attribute represents a foundational shift from holistic image embeddings to explicit, controllable, attribute-level representation.

This unlocks:

- High-precision image personalization

- Multi-concept compositional generation

- Cleaner editing without identity or background leakage

- Interpretable visual representation learning

It bridges vision–language understanding and controllable diffusion generation in a principled, scalable way.

Explore More

- Paper: arXiv:2512.10955

- Project Page: https://snap-research.github.io/omni-attribute

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning