In this Deep Learning era, we have been able to solve many Computer Vision problems with astonishing speed and accuracy.

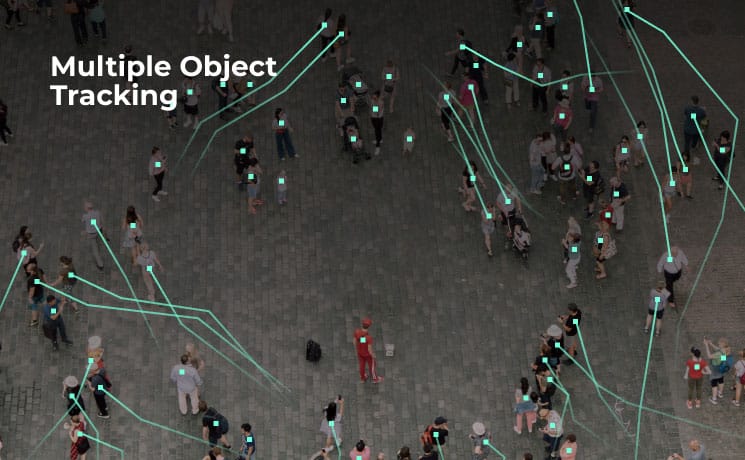

Yet, multiple object tracking remains a challenging task. Only a few of the current methods provide a stable tracking at reasonable speed.

In this post, we’ll discuss how to track many objects on a video – and we’ll use a combination of Neural Networks for this.

We’re not sharing code for implementing a tracker but we provide the technical pieces one to put together a good tracker, the challenges, and a few applications.

Some Applications of Object Tracking

Tracking is applied in a lot of real-life use cases.

Imagine you are responsible for office occupancy management, and you would like to understand how the employees use it: how they typically move throughout the building during the day, whether you have enough meeting rooms, and are there under- and overused spaces. Moreover, you may want to analyze whether the employees keep social distancing. For all there tasks, you’ll need to detect and track the people and analyze how they mode the space.

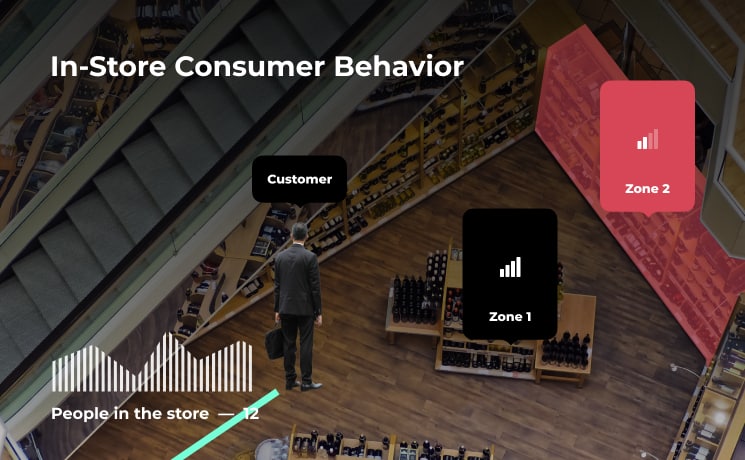

Another usecase is retail space management: to optimize the way people shop in your grocery store, you may want to build the track for every visitor and analyze them. You could also analyze why different space layouts lead to changes in sales: for example, if the shelves are moved, some areas of the shop may become less visited because they are off the main track.

In video surveillance and security, you would want to understand if unauthorized people or vehicles visit the restricted areas. For example, you may forbid walking in specific places or directions, or running on the premises. These usecases are widely applicable at facilities like construction sites.

The approach for multi-object tracking

For now, let’s only focus on people tracking and counting – but the same techniques can be applied to a variety of other objects.

A common way to solve the multi-object tracking is to use tracking by detection paradigm. To understand it, we’ll need to be familiar with two other Computer Vision algorithms: object detection and person re-identification.

Object Detection

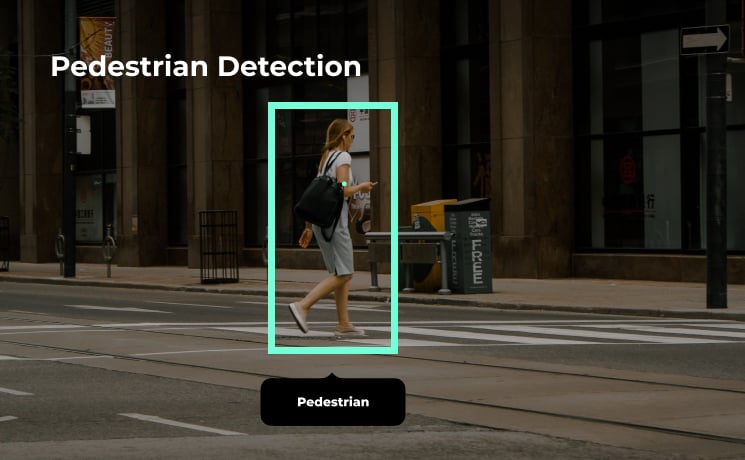

Object Detection is one of the most popular Computer Vision algorithms out there. Its goal is to find all the objects of interest on the image and output their bounding boxes. It is applied to a really wide range of objects – all the way from cars to bacteria. In our case, however, we are interested in people – so we’ll do pedestrian detection.

For many years now, pedestrian detection is almost exclusively solved by Deep Learning algorithms. And for a good reason – even despite this problem is a tough one, Neural Nets are great at it. They significantly advanced the state-of-the-art in detection and thus enabled so many real-world applications – including autonomous driving where there is absolutely no error margin in pedestrian detection.

Even despite this algorithm family is very advanced, we cannot say that pedestrian detection is a solved problem. Typically, detectors still have errors – like false positive detections for objects that are not people, or missed detections for people. Later, we will discuss how much these errors affect the tracking. Spoiler: a lot.

Person re-identification

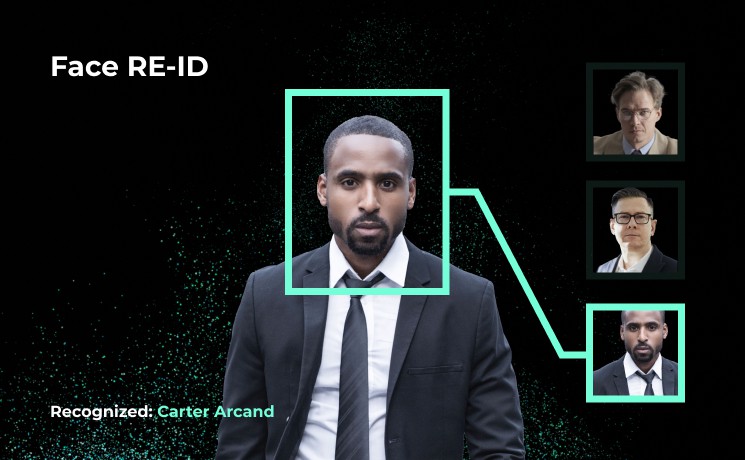

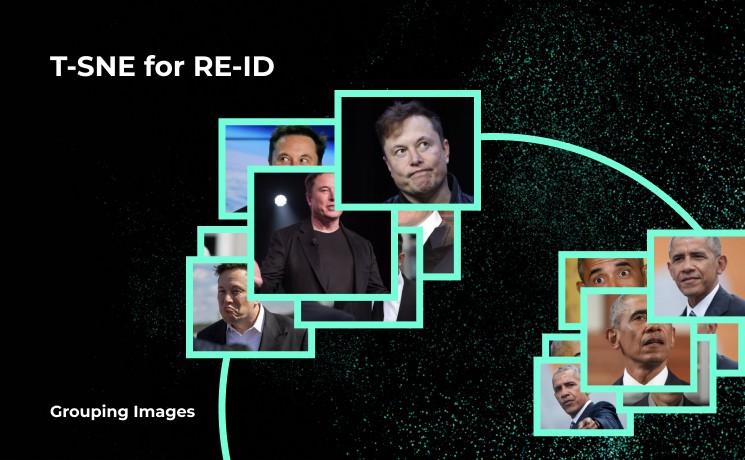

Re-identification algorithms, or re-id, need to be able to recognize the same person on different images. The task of this network is to build a vector of numbers that somehow describes the person visually. For different photos of the same person, these vectors should be similar, and as different as possible compared to the vectors describing the appearances of other people.

There are two branches of this approach:

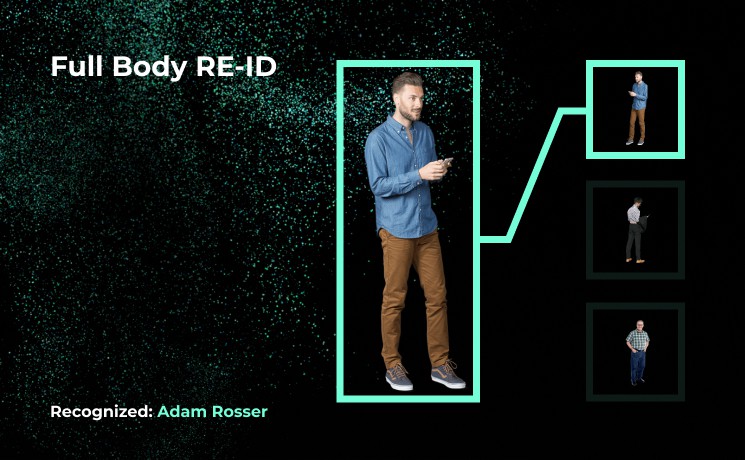

- Face re-id relies on, well, the face. It works exactly like in detective movies: given a headshot, it finds the person on a video from, say, airport. There are some downsides to this approach though: for example, it cannot detect someone from the back.

- Full-body re-id uses a full image of the person. This way it has more information and can recognize someone even from the back. A downside though is that it only can be used within a day – because it by design heavily relies to the outfit and does not work when one changes it.

The vectors Re-ID produces for each image can be treated as points in a multi-dimensional space. For a good Re-ID network, the points corresponding to the different photos of each person would form a separate cluster.

For us it’s important that with Re-ID, we can quantitatively compare how similar do the detections look. In multiple object tracking, we need to track the person within their visit of one specific location. Because of this, we’ll use full-body re-id: we’ll get more information, and we don’t want to track someone for several days.

Tracking by detection

Now let’s look into the tracking – and understand how we combine detection and re-id there.

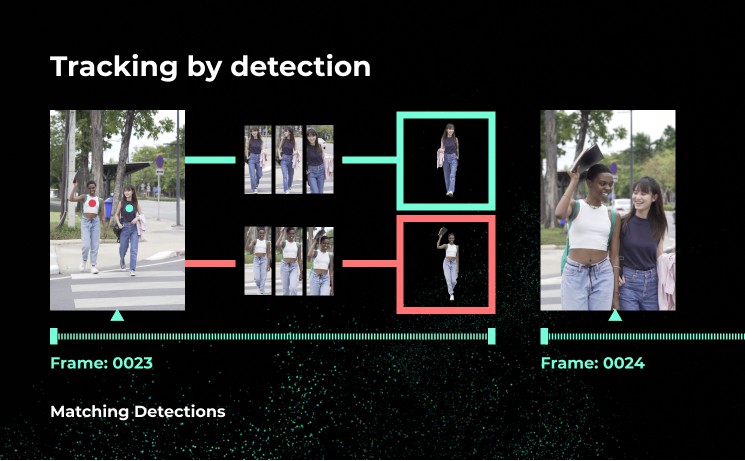

We’ll treat a video as a collection of consecutive frames.

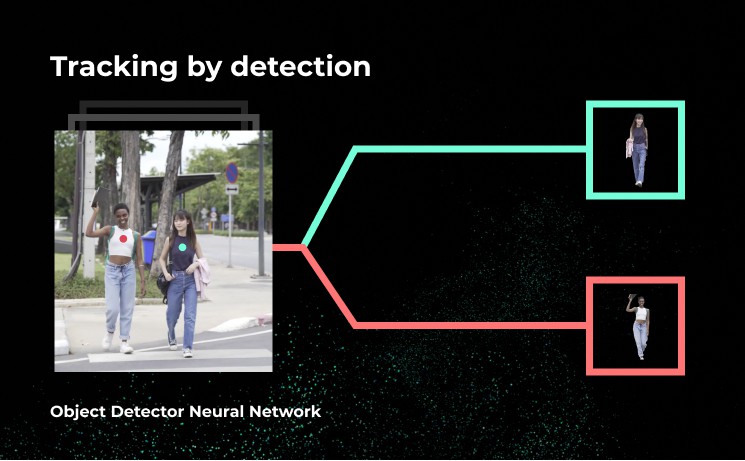

Imagine we have two consecutive frames of a video. On every frame, we’ll first detect people using an object detection neural network. This way we’ll get the bounding boxes for the people on each frame.

Then we’ll match the detections from the second frame to the ones on the first.

Now imagine that we followed the same process for many frames of a video. This way, we’ll have a track corresponding to every person we saw.

Matching tracks to detections

Now matching is the tricky part here. For two detections from consecutive frames we need to decide whether they correspond to the same person. To do that, we’ll use three pieces of information about both boxes:

- How close their appearance is. Here we’ll compare the vectors produced by the re-id network for both images.

- How close their centers are on the consecutive frames. Given a decent framerate of the video, we can assume that the person cannot suddenly move from one corner of the image to another – which means that the centers of the detections of the same person on consecutive frames must be close to each other.

- The sizes of the boxes. Again, the sizes should be consistent for consecutive frames.

In fact, many tracking algorithms use an internal movement prediction model. It remembers how the person moved previously and predicts the next location based on a movement model. People typically do not move randomly but rather go in a consistent direction – so these models really help match the detections to the right track.

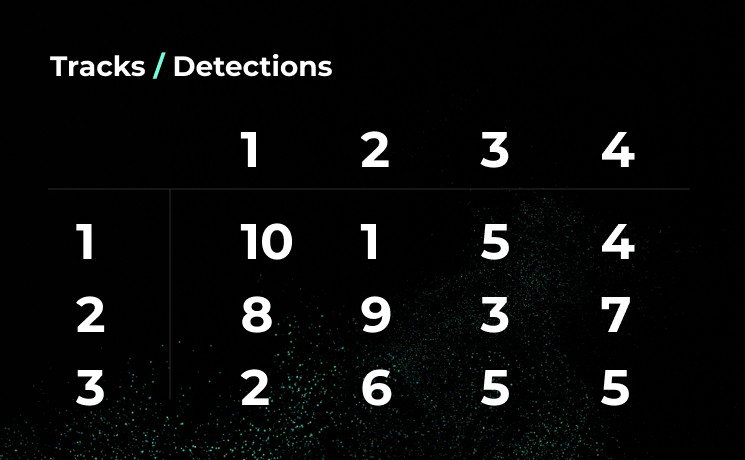

Now we’ll combine these three numbers into a measure of how likely is it that two boxes represent a person. If we do this for every possible combination of detections on the first and the second frames, we’ll get a matrix of combined distances between the boxes:

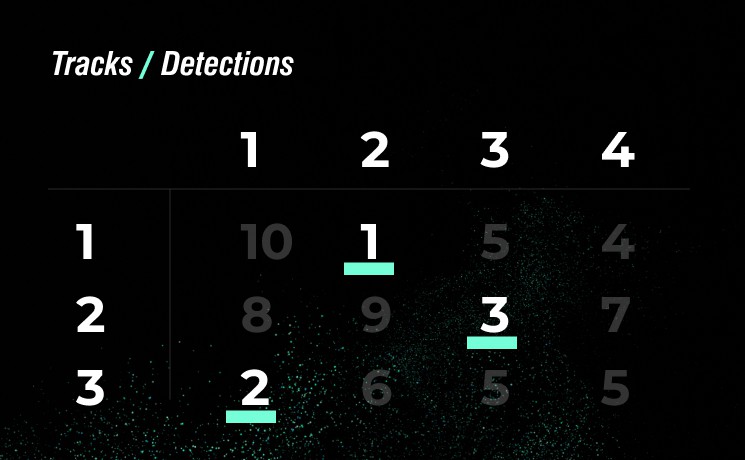

Now we need to assign new detections to old tracks in the best possible way. This is essentially an optimization problem – and to solve it, researchers typically use Hungarian algorithm. It finds the combination of the assignments in this matrix that would be an optimal solution in terms of our combined metric.

Tracking by Detection approach works well in a wide range of tasks, and is pretty fast. Its performance is mostly limited to the speed of the detector and re-id nets. With the rise of the smart boards like OpenCV AI Kit, it becomes possible to run the tracking in realtime even on the edge devices.

Of course, there are other methods for multiple object tracking out there. The most prominent group is graph-based approaches. These can be accurate, and often win benchmarks like MOTChallenge, but are slow – and don’t allow real-time inference. Moreover, they typically need to look into the “future” to build the correspondences, which again does not allow tracking on-the-fly.

OpenCV AI People Tracking Engine

At OpenCV.AI, we have created a state-of-the-art engine for object tracking and counting.

To do this, we engineered an optimized neural net that uses 370x less computations than commodity ones. Because of this, our tracking works on small edge devices, as well as in the cloud setup. It is fast, accurate and stable – and thus allows a huge variety of business applications.

If you would like to learn more about it, please write us at contact@opencv.ai.

Why Multiple Object Tracking is Hard

You may have noticed that the process we described is not exactly bulletprooof. There are so many things that can go wrong:

- The detector sometimes can miss people or create false positives.

On the one hand, we need a fast detector to be able to work in realtime. On the other hand, the detector needs to be very accurate to be able to track everyone and not to create excess false positive tracks. To create a nice tracker, we need a tradeoff between the accuracy and speed – as it often happens in Computer Vision.

- People can be occluded for some time and then get visible again. Tracking needs to “remember” this person from the past and continue tracking them in the same track.

To solve this one, we need to introduce a memory to the tracking. But we cannot remember everyone forever – not only because of the memory consumption, but also because it will make the matching part more complex.

Again, we need a tradeoff here! For example, we can remember a person for several minutes, and if they return later, the tracking will think it’s a new person.

- People in uniform are a tough case for tracking.

Indeed, there often are people in uniform or just similarly looking people – for example, attendants in shops or pharmacies, or people in black office suits. Re-identification will only be able to rightfully say that they look similarly. In this case, we’ll have to only rely to the positions and the sizes of the boxes.

Typically multiple object tracking algorithms are built on tradeoffs like these. Because of this, they are complex system with tens or hundreds of parameters. On the one hand, this allows customization for specific usecases – but on the other hand, it makes tracking systems complex and hard to build.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning