In the fast-paced world of artificial intelligence, a new model is making waves for its innovative approach and impressive performance: MOLMO (Multimodal Open Language Model), developed by the Allen Institute for AI (Ai2). Unlike many of today’s powerful vision-language models, which are often proprietary and closed-source, MOLMO stands out as a shining example of open-source innovation. It proves that achieving state-of-the-art results doesn’t have to mean relying on a black-box system.

So, what makes MOLMO so special? It’s not just another VLM; it’s a statement. MOLMO’s core philosophy is built on three key pillars: a meticulously crafted dataset, a well-tuned architecture, and a commitment to openness that is pushing the entire field forward.

The Secret Ingredient: The PixMo Dataset

Most open-source VLMs have a dirty little secret: they rely on synthetic data generated by larger, proprietary models like GPT-4 or Claude. This creates a dependency and limits the community’s foundational knowledge of how to build performant models from the ground up. MOLMO breaks this cycle with its fully open and meticulously curated dataset, PixMo (Pixels for Molmo).

The data collection for PixMo is a fascinating innovation in itself. Instead of having annotators type out captions, a process that often leads to shallow descriptions, the Ai2 team asked them to describe images using speech for 60 to 90 seconds. This simple but ingenious “trick” resulted in incredibly detailed and “dense” captions, averaging over 200 words per image. This rich data is the secret sauce that enables MOLMO’s exceptional performance.

The PixMo dataset isn’t just about captions. It’s a comprehensive collection of diverse data, including:

- PixMo-Cap: The dense, speech-based captions for pre-training the model.

- PixMo-AskModelAnything: Human-authored image-question-answer triplets for instruction tuning.

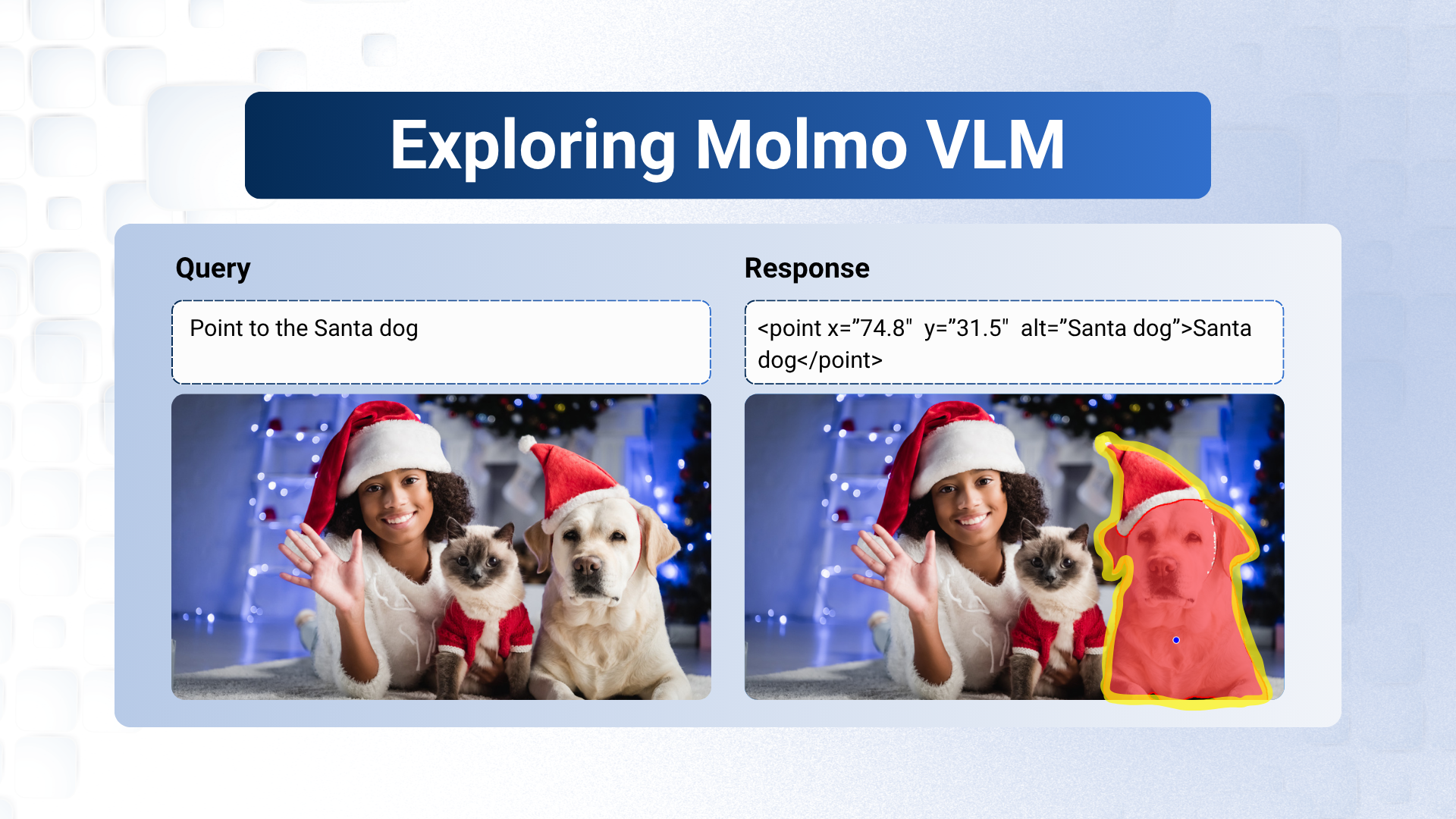

- PixMo-Points: A novel dataset that teaches the model to “point” to specific objects in an image. This capability is a game-changer for tasks like counting, grounding, and even enabling future VLM-powered agents to interact with their environment.

MOLMO Architecture

MOLMO’s architecture is simple yet highly effective, combining advanced components to seamlessly bridge vision and language. Developed by the Allen Institute for AI (Ai2), it stands out for its open-source approach, providing state-of-the-art performance without relying on proprietary systems.

Here’s a breakdown of its components and training process:

Key Components of MOLMO:

- Pre-processor:

- Processes the input image by splitting it into multi-square crops at different scales, optimizing the image for further encoding.

- Processes the input image by splitting it into multi-square crops at different scales, optimizing the image for further encoding.

- Image Encoder (CLIP):

- Uses CLIP’s vision transformer (ViT) to encode image patches into feature embeddings, capturing visual details from the image.

- Uses CLIP’s vision transformer (ViT) to encode image patches into feature embeddings, capturing visual details from the image.

- Connector Layer:

- Projects the image embeddings into the LLM embedding space.

- Utilizes pooling on each 2×2 patch window, reducing dimensionality while maintaining spatial context.

- Trained with a high learning rate and a warm-up phase to allow fast adjustments in the connector parameters.

- Projects the image embeddings into the LLM embedding space.

- LLM Decoder:

- Takes tokenized input queries and concatenates them with the image embeddings.

- Passes the combined information to the LLM decoder, which generates meaningful responses.

- Takes tokenized input queries and concatenates them with the image embeddings.

Training Pipeline:

- The training process involves two stages:

- Pre-training: MOLMO is first trained on the dense PixMo-Cap dataset.

- Supervised Fine-tuning: The model is then fine-tuned using additional PixMo datasets.

- Pre-training: MOLMO is first trained on the dense PixMo-Cap dataset.

- This approach avoids the complexity of freezing and unfreezing model components, resulting in an efficient and streamlined training pipeline.

MOLMO’s architecture and training process highlight the power of well-designed, open-source AI systems capable of combining vision and language seamlessly for a variety of real-world applications.

More Than Just a Model: A New Standard for Openness

The MOLMO family of models comes in various sizes, from the efficient 1B parameter Mixture-of-Experts model to the best-performing 72B model. All of them are built on the same principle: full openness.

Ai2 has not only released the model weights and code, but also the entire PixMo dataset and evaluation artifacts. This level of transparency is rare and invaluable for the research community. It allows others to reproduce results, build upon the work, and accelerate the development of open-source multimodal AI.

Applications and the Future

MOLMO’s unique capabilities, particularly its ability to “point,” open up exciting new possibilities. Beyond traditional VLM tasks like image captioning and visual question answering, MOLMO’s potential applications include:

- Embodied AI: Enabling robots and other agents to interact with the physical world by pointing to objects, navigation waypoints, or user interface elements.

- Detailed Analysis: Providing rich, detailed descriptions for complex images, documents, and charts.

- Advanced Counting: Accurately counting objects in a scene by pointing to each one.

In a landscape dominated by proprietary models, MOLMO VLM stands out as a powerful and much-needed example of how open science can lead to breakthroughs. By demonstrating that top-tier performance is achievable with open data and careful design, MOLMO is not only a fantastic model in its own right but also a vital catalyst for the future of open-source AI.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning