Imagine you’re using an AI writing assistant to draft an email. It’s excellent at creating clear and concise text, but you need the email to reference specific updates from a recent team meeting and attach a relevant report. While the assistant is impressive in crafting polished content, it falters when accessing real-time context, like your meeting notes or files. Without direct access to this information, you’re left to manually provide the details—defeating the purpose of seamless assistance.

This limitation underscores a broader challenge with large language models (LLMs). While they are remarkable at processing vast amounts of data, they struggle with real-time context outside their frozen training set. For AI agents to truly thrive, they need more than raw intelligence; they require dynamic access to files, tools, knowledge bases, and the ability to act on this information—like automating tasks or updating documents. Historically, integrating models with external sources was a messy process, relying on fragile custom implementations that were hard to scale.

Enter the Model Context Protocol (MCP). Introduced by Anthropic in late 2024, MCP is a groundbreaking open standard designed to bridge the gap between AI assistants and the data-rich ecosystems they need to navigate. MCP eliminates patchy integrations by providing a universal framework for connecting tools and data sources, empowering AI systems with seamless access to diverse contexts. With MCP gaining traction among major AI players and open-source communities. It’s clear—MCP is paving the way for the future of truly connected and agentic AI.

The Rise of the Model Context Protocol (MCP)

In November 2024, Anthropic introduced the Model Context Protocol (MCP). At first, it was overshadowed by discussions on advanced language models, but by early 2025, MCP took center stage. Teams realized building “intelligent agents” was simpler than connecting them to real-world data, making MCP’s approach a long-overdue solution.

Today, Block (Square), Apollo, Zed, Replit, Codeium, Sourcegraph, and others have implemented MCP. Over 1,000 open-source connectors emerged by February 2025, expanding its ecosystem with each addition. Anthropic has continued refining MCP’s specification, documentation, and workshops, speeding up adoption. By remaining open, model-agnostic, and reminiscent of open standards (like USB or HTTP), MCP eliminates barriers to broad collaboration.

Why MCP Matters

Enthusiasm for MCP isn’t just about novelty; it’s about solving real pain points in machine learning and AI deployment. Here’s a closer look at the core benefits.

1. Reproducibility

- Complete Model Context: All the necessary details—datasets, environment specifications, and hyperparameters- live in one place. All the relevant data is fetched from these sources and fed as context to the LLM for a better grounded answer

2. Standardization & Collaboration

- Inter-organizational sharing: Many companies build specialized AI tools or maintain custom data sources. If a model is packaged with an MCP file, another organization can plug it into their own system with fewer roadblocks.

- Open-Source Integration: Communities like Hugging Face or GitHub rely on consistent metadata standards to streamline how models are shared and discovered. MCP’s open nature and model-agnostic design make it an ideal candidate to unify these ecosystems.

How MCP Works

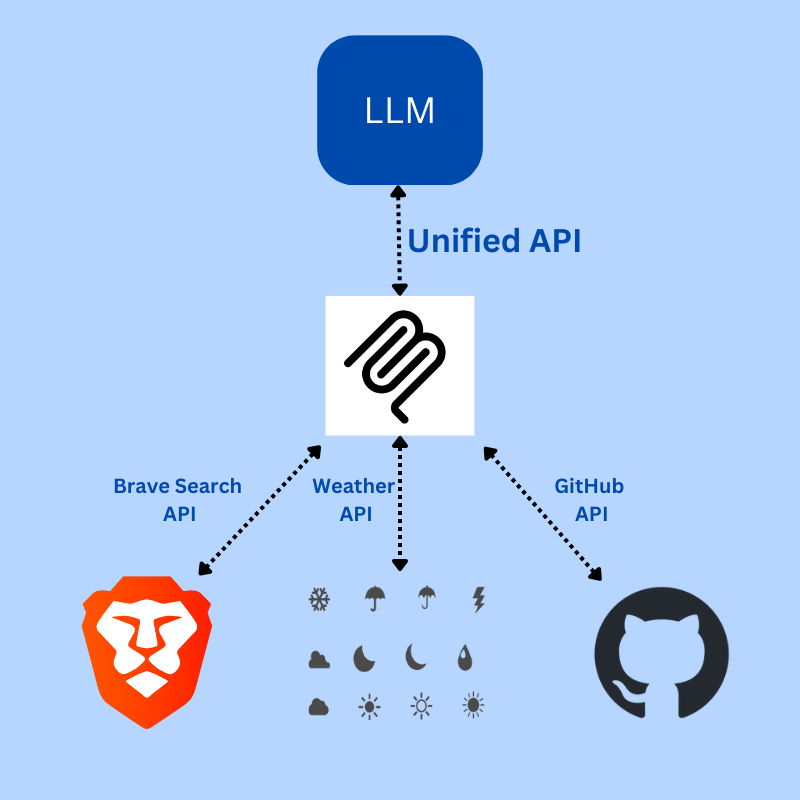

The Model Context Protocol (MCP) is a universal standard that enables seamless interaction between AI-powered applications and external data sources. It works by establishing a secure and efficient client-server architecture, where AI systems (clients) request relevant context from data repositories or tools (servers). MCP eliminates the need for fragmented integrations by providing a standardized framework for accessing real-time context, such as files, databases, or APIs. Through this protocol, AI assistants can not only retrieve information but also take meaningful actions, such as updating documents or automating workflows – bridging the gap between isolated intelligence and dynamic, context-aware functionality.

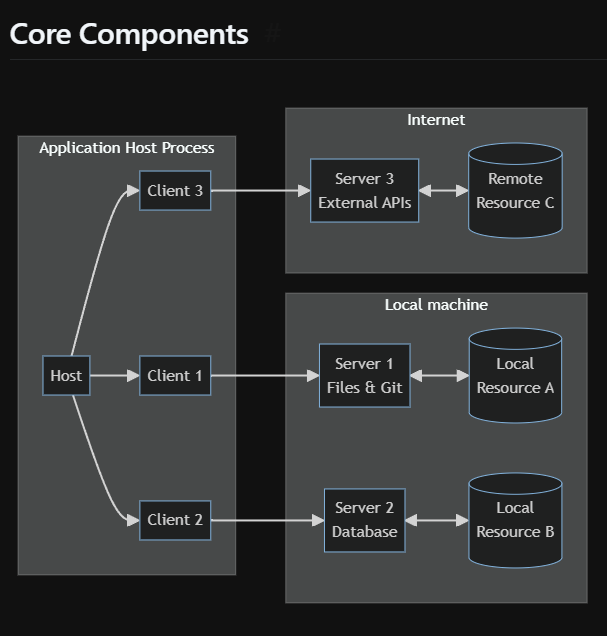

MCP: Architecture & Key Roles

The Model Context Protocol (MCP) is designed to bridge AI applications (or “agents”) with external systems, data sources, and tools, all while maintaining secure boundaries. At its core, MCP adopts a client–host–server pattern, aiming to standardize how different components communicate and share “context.” This approach is built atop JSON-RPC and emphasizes stateful sessions that coordinate context exchange and sampling.

- Host Process

- Acts as a “container” or coordinator for multiple client instances.

- Manages lifecycle and security policies, e.g., permissions, user authorization, and enforcement of consent requirements.

- Oversees how AI or language model integration happens within each client, gathering and merging context as needed.

- Acts as a “container” or coordinator for multiple client instances.

In plain terms, the host is like the control tower for incoming AI “flights.” It decides which AI client gets to fly, who checks them in, and which runways (servers) they connect to.

- Client Instances

- Each client runs inside the host. It establishes a one-to-one session with a specific MCP server using a stateful connection.

- Handles capability negotiation and orchestrates messages between itself and the server.

- Maintains security boundaries—one client shouldn’t snoop on resources belonging to another client.

- Typically, these clients represent AI applications or agents (like Anthropic’s Curser or Windsurf) that can “talk” to servers using the MCP’s standardized interfaces.

- Each client runs inside the host. It establishes a one-to-one session with a specific MCP server using a stateful connection.

Think of the client as an AI agent wanting to access external tools or data. Once it’s “MCP-compatible,” it can easily connect to any MCP server—no extra glue code is needed.

- Servers

- Provide specialized capabilities or resources in a standardized way so that any MCP client can use them.

- It could be a local process or a remote service, each wrapping data sources, APIs, or other utilities (like CRMs, Git repos, or file systems).

- Define “tools,” “prompts,” and “resources” that the client can invoke or retrieve.

- Must adhere to security constraints and user permissions enforced by the host.

- Provide specialized capabilities or resources in a standardized way so that any MCP client can use them.

Essentially, servers are the AI ecosystem’s “toolboxes.” By exposing consistent interfaces, they solve the classic “N times M problem,” where you’d otherwise need separate integrations for every AI app and every data source. Build a server once, and any MCP-compatible client can tap into it.

For official specification details, see the MCP Architecture Reference (2024-11-05)

Features of MCP Server

When building MCP servers, you’re effectively creating the building blocks that add crucial context to language models. In MCP’s framework, these building blocks are referred to as “primitives” and include:

- Prompts

- Resources

- Tools

These primitives act as the interface between client-side AI applications (agents) and the specialized environments or data sources the server manages. By properly implementing each primitive, server developers enable rich interactions and enhanced capabilities for language models.

Primitives and Their Control Hierarchy

| Primitive | Control | Description | Example |

| Prompts | User-controlled | Pre-defined templates or instructions triggered by user actions | Slash commands, menu selections |

| Resources | Application-controlled | Contextual data attached and managed by the client | File contents, Git history logs |

| Tools | Model-controlled | Executable functions that the LLM can decide to invoke | API calls, file writing operations |

Use Cases

1. AI Assistant Managing Calendars

Imagine you have an AI assistant—let’s call it Claude Desktop—that needs to schedule a meeting. Under the hood, Claude Desktop serves as an MCP host, which relies on an MCP client to connect with a calendar service. The calendar service itself runs as an MCP server. Here’s the workflow:

- AI Request: The assistant sends a request (e.g., to retrieve free time slots).

- Server Response: The MCP server fetches the user’s calendar data and returns it to the assistant.

- AI Output: With those available slots in hand, the AI crafts a suitable response or automatically schedules the meeting.

2. Secure AI in Healthcare

A medical AI system must securely access patient records. Leveraging MCP:

- Consent & Privacy: The server checks user permissions and enforces encryption and logging.

- Data Sharing: The MCP client fetches patient records only after ensuring compliance with healthcare data regulations.

- AI Interaction: The AI interprets that data (e.g., to draft a consultation summary or schedule follow-ups).

Both scenarios highlight MCP’s emphasis on user control, data privacy, tool safety, and LLM sampling controls—key pillars for trustworthy, real-world AI solutions.

Related Article: https://newsletter.maartengrootendorst.com/p/a-visual-guide-to-llm-agents

Conclusion

The Model Context Protocol (MCP) marks a significant leap forward in the journey toward seamless AI integration. By providing an open, standardized way to connect large language models to external tools and data sources, MCP tackles the longstanding challenges of interoperability, scaling, and security head-on.

As AI systems mature, the ability to interface with dynamic, real-world data becomes ever more critical. MCP paves the way for robust, secure, and highly modular AI architectures—much like TCP/IP once did for computer networking. With its growing ecosystem of adopters, SDKs, and off-the-shelf connectors, MCP stands poised to become the go-to standard for building responsive, intelligent applications across virtually every domain.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning