In the bustling landscape of deep learning frameworks, PyTorch stands out as a versatile and dynamic tool loved by researchers and developers. But what exactly is PyTorch, what sets it apart, and why should you learn Pytorch in 2025 to get into AI?

What Is PyTorch, and How Does It Work?

PyTorch is an open-source library particularly suited for deep learning applications. Stemming from the Torch library, which was written in Lua, PyTorch brought the power of Torch to the Python community, fusing the simplicity of Python with robust deep learning capabilities. Pytorch research paper.

At its core, PyTorch provides two essential features:

1. Tensor Computing

Just like NumPy provides multidimensional arrays, PyTorch offers tensors. These are generalizations of matrices to N-dimensional space and serve as the basic building blocks of many Deep learning algorithms. However, unlike NumPy arrays, PyTorch tensors can be used on GPUs for accelerated computing.

2. Automatic Differentiation

In deep learning, we often need to figure out how much to adjust things (called gradients). PyTorch has a tool called Autograd that does this for us automatically. Plus, with PyTorch’s dynamic approach, you can make changes as you work, which is great for some models and research situations.

To put it in simpler terms, imagine having a canvas where you can sketch, modify, and erase parts of your drawing in any order. That’s the kind of flexibility PyTorch offers when building and tweaking neural network models.

But flexibility isn’t the only selling point. PyTorch’s intuitive interface and its alignment with Python programming paradigms make it an appealing choice for those who want a seamless blend of coding and deep learning.

As we go deeper into the world of PyTorch, it will become evident why it has gained such immense popularity and how it might be the right tool for your next machine-learning project.

Evolution of PyTorch

Understanding its evolution will shed light on its design decisions and highlights its trajectory in the AI realm.

Torch and Lua

Before PyTorch, there was Torch – a scientific computing framework with wide support for machine learning algorithms. Torch used Lua, a lightweight scripting language known for its fast execution. While Torch was powerful, the synergy of deep learning and Python’s rich ecosystem was looming on the horizon.

Birth of PyTorch

In 2016, researchers at Facebook’s AI Research lab (FAIR) decided to bring the power of Torch to the ever-growing Python community, leading to the creation of PyTorch. The goal was straightforward: Provide a flexible tool that maintains Torch’s capabilities but is deeply integrated with the Python experience.

Rapid Adoption

From its inception, PyTorch found favor among the research community. Its dynamic computational graph made experimentation easier. Researchers could tweak models on-the-fly, enabling a more iterative and organic development process.

TorchScript and Production

Recognizing the gap between research and production, PyTorch introduced TorchScript in its 1.0 version. TorchScript allowed for the conversion of PyTorch models into a format that could be optimized and run in a non-Python environment, bridging the gap between research prototypes and production deployment.

Community and Ecosystem Growth

PyTorch’s design resonated with many, leading to a thriving community. This widespread adoption meant more libraries, tools, and integrations around PyTorch. Tools like Captum for model interpretability and integration with platforms like ONNX strengthened PyTorch’s position in the ecosystem.

Continued Innovations

With regular updates, Pytorch continually embraced newer technologies, algorithms, and methods. Features like quantization and support for various hardware accelerators ensured that PyTorch remained at the forefront of the deep learning wave.

The journey of PyTorch is proof of its commitment to flexibility, user-centric design, and innovation – from its Torch ancestry to its current omnipresence in AI labs worldwide.

PyTorch Ecosystem

Its core functionalities do not just determine the strength of any framework but also the ecosystem that surrounds it. PyTorch’s rise can be attributed to its intrinsic features and tools, libraries, and extensions developed by its active community. Let’s explore the crucial components that form the PyTorch ecosystem:

TorchVision

An essential part of the PyTorch universe, TorchVision offers datasets, models, and transforms for computer vision. Whether you’re looking to utilize pre-trained models or need standard datasets like CIFAR-10 or ImageNet, TorchVision has you covered.

TorchText

Tailored for natural language processing tasks, TorchText provides data loaders, vocabularies, and common text transformations, simplifying the preprocessing pipeline for text-based applications.

TorchAudio

Recognizing the significance of audio processing in AI, TorchAudio comes equipped with popular datasets, model architectures, and audio transformations.

ONNX Integration

The Open Neural Network Exchange (ONNX) format ensures interoperability between AI frameworks. PyTorch’s smooth integration with ONNX allows users to transition their models to other platforms easily.

Captum

As models grow in complexity, interpretability becomes paramount. Captum is PyTorch’s response to this need, offering model interpretability and understanding tools for deep learning.

Ecosystem Tools

Beyond these primary libraries, PyTorch boasts an array of ecosystem tools like Albumentations for image augmentations, Lightning for lightweight PyTorch wrappers, and many more.

Community Contributions

A dynamic community continually contributes extensions, tools, and libraries to the PyTorch ecosystem. These contributions, from domain-specific tools to general-purpose utilities, ensure that PyTorch remains equipped for various challenges.

Education and Resources

PyTorch’s commitment to its users isn’t limited to just tools. An array of tutorials, courses, forums, and documentation ensures that beginners and experts have the resources they need to succeed.

The PyTorch ecosystem is diverse, adaptable, and responsive to the needs of its user base. Each tool and library provides a comprehensive platform where researchers and developers can innovate, experiment, and deploy with minimal friction.

Dive deep into the realm of Artificial Intelligence with our curated selection of FREE courses.

Whether you’re passionate about Computer Vision, Python, or deep learning, our beginner bootcamps are your launchpad. Start your AI journey now!

PyTorch is Based on Python – PyTorch is Python

Python’s simplicity and versatility have firmly established it as the language of data science, machine learning, and artificial intelligence. When PyTorch surfaced, its seamless integration with Python was one of its standout features. Let’s find out why this is significant:

Intuitive Syntax

PyTorch code is essentially Pythonic. If you’re familiar with Python, diving into PyTorch becomes significantly easier. This reduces the learning curve and lets developers write neural networks and training loops easily.

Seamless Integration with Python Libraries

PyTorch works well with popular Python libraries like NumPy. You can effortlessly convert PyTorch tensors to NumPy arrays and vice versa, making data manipulation and analysis easy.

Python’s Rich Ecosystem

Beyond the AI-specific libraries, Python offers a wide array of tools for data wrangling, visualization, and web deployment. This ensures you can manage your entire AI project under the Python umbrella, from data collection to deployment.

Interactive Development with Jupyter

Jupyter notebooks work harmoniously with PyTorch. This allows for interactive experimentation, visualization, and step-by-step debugging, making the development process more iterative and insightful.

Dynamic Computation Graphs

Python’s dynamic nature aligns perfectly with PyTorch’s dynamic computation graphs. This means that the graph is built on-the-fly, offering flexibility and making debugging more intuitive, akin to regular Python debugging.

Broad Community Support

Since Python boasts one of the largest programming communities worldwide, PyTorch users benefit from the shared knowledge, resources, and tools. Solutions to challenges, best practices, or implementations are often just a forum thread or GitHub repository away.

In essence, PyTorch’s recognized the strengths of Python and capitalized on them, ensuring that users could leverage the best of both worlds.

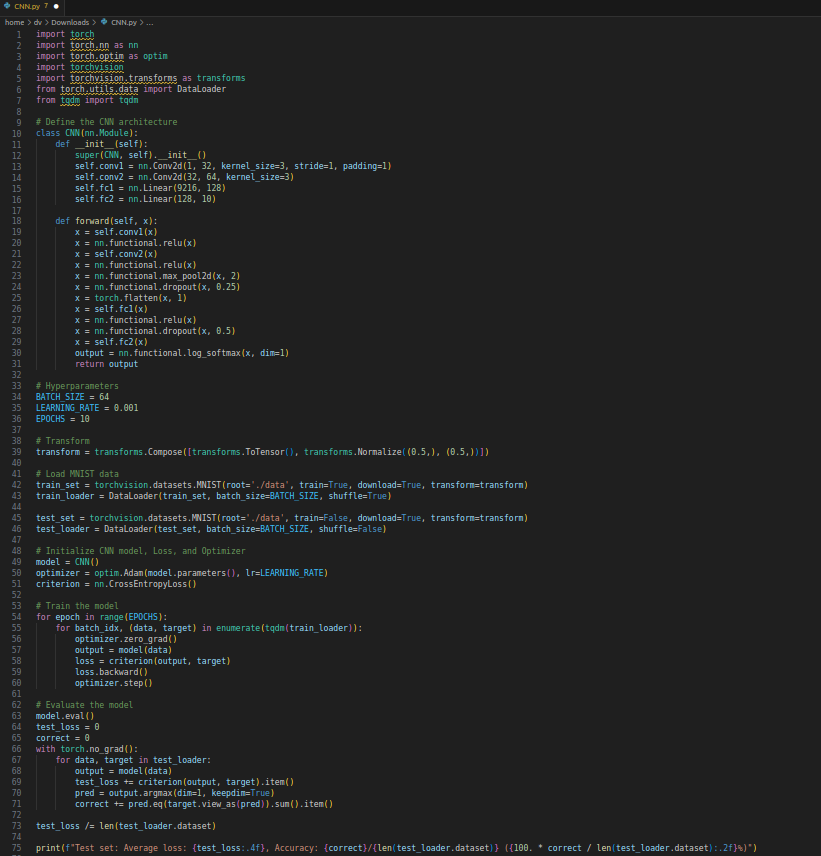

Basics of PyTorch

PyTorch, at its core, is a library designed for deep learning. But before you can train advanced neural networks and transformers, it’s essential to understand the foundational elements that PyTorch offers. Let’s look at some of these basic constructs:

Tensors

Tensors are the fundamental data structures in PyTorch, similar to arrays in NumPy, and can be used on GPUs for faster computation. Whether it’s a scalar, a vector, a matrix, or a higher-dimensional array, it’s a tensor in PyTorch.

Computational Graph

Unlike other frameworks where you define the entire computation graph before running, PyTorch lets you define and modify the graph on the go. This is particularly useful for models that have dynamic flow control, like RNNs.

Autograd Module

It’s a core PyTorch package that provides automatic differentiation for all operations on tensors. When a tensor’s `.requires_grad` attribute is set to `True`, it starts to track all operations on it. This becomes extremely handy during the backpropagation step of neural network training.

Neural Network Module (nn)

PyTorch provides the `torch.nn` module to help users in designing and training neural networks. It offers pre-defined layers, loss functions, and optimization routines, enabling users to stitch together custom neural architectures easily.

Optim Module

Training a neural network requires optimization routines, commonly gradient descent variations. The `torch.optim` module houses these algorithms, like SGD, Adam, and RMSProp. Pairing this with the Autograd module makes training models straightforward.

Utilities

Beyond these, PyTorch provides plenty of utilities, from data handling to performance profiling, ensuring developers have tools to streamline the AI development process.

With these building blocks, PyTorch provides an environment where both beginners can grasp the essentials and experts can do intensive deep-learning research.

Common PyTorch Modules

PyTorch’s success stems from its specialized modules, simplifying neural network operations:

1. torch.nn

The foundation for building and training neural networks. It offers predefined layers, loss functions, and optimization techniques.

2. torch.optim

Houses optimization algorithms like SGD and Adam which are crucial for adjusting network weights during training.

3. torch.autograd

Enables automatic differentiation, tracking operations on tensors, and computing gradients for backpropagation.

4. torchvision

A toolkit for computer vision tasks, providing datasets, models, and image transformation utilities.

Data Loader

PyTorch’s Data Loader efficiently manages data, especially in large-scale scenarios:

Batch Processing

Automates mini-batch creation for frequent model weight updates.

Shuffling

Randomizes data order in each epoch, preventing the model from learning unintended patterns.

Parallel Loading

Uses multiple subprocesses for faster data loading, optimizing multicore CPU usage.

Custom Data Handling

The `Dataset` class allows for the integration of custom datasets into the PyTorch training loop.

These tools and features highlight PyTorch’s comprehensive approach to deep learning, catering to model creation and efficient data management.

Dynamic Approach To Graph Computation

PyTorch employs a dynamic computational graph, often referred to as the “define-by-run” approach. This means the graph is constructed on the fly as operations are performed, offering flexibility during model building. It’s particularly beneficial for models where the architecture changes during runtime, such as recursive neural networks.

Integration with Other Platforms

PyTorch seamlessly integrates with popular platforms and libraries, broadening its utility. For instance, the compatibility with ONNX (Open Neural Network Exchange) allows for model exportation to other deep learning frameworks, facilitating smoother collaboration and deployment. Its integration with libraries such as NumPy further enhances PyTorch’s versatility in data handling and mathematical computations.

Why PyTorch is a Research Favorite

The realm of artificial intelligence research is all about experimentation, innovation, and frequent adjustments to models. PyTorch has, over the years, risen as a favorite in this domain, and here’s why:

Intuitive Design: PyTorch is built in a way that mirrors the natural thought process of researchers. The dynamic computation graph and its “define-by-run” approach allow researchers to alter the network on the go. This means that researchers spend less time wrestling with the nuances of the tool and more time focusing on groundbreaking experiments.

Unparalleled Flexibility: Research often involves trying out novel architectures or tweaking existing ones. PyTorch makes it easy to modify standard networks. This flexibility is particularly crucial when dealing with unknown territories in AI research, like testing a new type of layer or experimenting with unconventional neural network designs.

Transparent Operations: One of PyTorch’s strongest points is its transparency. Researchers can easily understand and modify the inner workings of models and operations with a Pythonic syntax and clear documentation. This transparency ensures that there’s clarity about what’s happening behind the scenes when implementing a new algorithm or model from a paper.

Strong Community Support: PyTorch’s rising popularity has led to a vibrant community. This means a wealth of tutorials, forums, and open-source projects that researchers can leverage. Moreover, if a researcher encounters a problem or needs feedback on an idea, they will likely find someone in the PyTorch community who has faced a similar issue or has insights to share.

Direct Link to Production: With tools like TorchServe, researchers can take their models from research to production more seamlessly, bridging the gap between experimentation and real-world application.

Collectively, these attributes make PyTorch not just a tool but a conducive environment where researchers can push the boundaries of what’s possible in AI.

PyTorch Use Cases

As PyTorch has matured and grown in popularity, it’s been adopted across a wide range of domains and applications. Here’s a glimpse of the diverse areas where PyTorch has been making waves:

Computer Vision: PyTorch’s flexibility and dynamic nature have made it a top choice for building, training, and evaluating deep learning models for tasks such as image classification, object detection, image segmentation, and facial recognition.

Natural Language Processing (NLP): Whether for sentiment analysis, machine translation, or text generation, PyTorch has been at the forefront. Its compatibility with recurrent layers like LSTM and GRU and Transformer-based models ensures state-of-the-art performance in NLP tasks.

Generative Models: For tasks involving Generative Adversarial Networks (GANs) or Variational Autoencoders (VAEs), PyTorch offers the right environment due to its dynamic computation graphs and ease of gradient calculations.

Reinforcement Learning: Researchers and developers working on training agents for games, simulations, or real-world robotics often turn to PyTorch for its ease of use and ability to handle complex neural network architectures.

Audio Processing: From speech recognition to music generation, PyTorch’s comprehensive library support makes it suitable for building models that can understand and generate audio.

Healthcare: Medical image analysis, drug discovery, and predictive analytics are areas within healthcare where PyTorch’s deep learning capabilities are being harnessed.

Autonomous Vehicles: For tasks like perception, planning, and control in self-driving cars, PyTorch has become a preferred choice due to its flexibility and real-time processing abilities.

Finance: In the financial domain, PyTorch aids in fraud detection, credit scoring, and algorithmic trading, among other tasks, using deep learning models.

Recommendation Systems: Companies that need to provide personalized content or product recommendations to their users often employ PyTorch to build and refine their deep learning-based recommendation engines.

Edge Devices: With tools like TorchScript, PyTorch models can be deployed on mobile and edge devices, allowing for AI-driven functionalities even without constant server connections.

In essence, wherever there’s a need for deep learning, from academia to industries, PyTorch has found its use case, offering tools and libraries that make the development process streamlined and efficient.

Benefits of Using PyTorch

Increased Developer Productivity: PyTorch’s syntax and dynamic computation graph allow for quick prototyping. Its Pythonic nature ensures that developers can seamlessly integrate it with other Python libraries, reducing the time spent setting up.

Easier To Learn And Simpler To Code: For those familiar with Python, diving into PyTorch becomes much smoother. Its straightforward and readable code makes it an excellent choice for beginners in deep learning, ensuring a shorter learning curve.

Simplicity and Transparency: PyTorch is known for its clear and open design. The operations are straightforward, and there’s no hidden logic beneath the surface. This transparency ensures that users always clearly understand what’s happening under the hood.

Easy To Debug: Unlike other deep learning frameworks that use static computation graphs, PyTorch’s dynamic nature allows native Python debugging tools. This makes identifying, understanding, and rectifying issues in the code or model architecture is simpler.

Data Parallelism: Handling vast datasets or models can be computationally challenging. PyTorch simplifies this by offering built-in support for data parallelism, allowing models to be easily trained across multiple GPUs. This ensures faster training times and scalability.

Areas in Which PyTorch Shines Over TensorFlow

Dynamic vs. Static Computation Graph: One of the fundamental distinctions between PyTorch and TensorFlow (before the introduction of TensorFlow 2.0) is the dynamic computation graph in PyTorch compared to the static one in TensorFlow. This dynamic nature, also known as define-by-run, allows developers to modify the graph on-the-go. It offers a more intuitive and flexible environment, especially beneficial for specific tasks like dynamic input lengths in NLP or reinforcement learning.

Debugging: PyTorch’s dynamic computation graph makes debugging a more native experience. You can easily use Python’s debugging tools, making it more straightforward to diagnose and fix issues.

Research Friendliness: While both frameworks are used extensively in research, the flexibility offered by PyTorch, combined with its Pythonic nature, makes it a favorite for many researchers. They can easily tweak models, try new architectures, and experiment without much boilerplate.

Performance Enhancements:

PyTorch has continually evolved and improved since its inception. The framework has received regular updates targeting performance optimizations. Some noteworthy enhancements include:

TorchScript: With TorchScript, PyTorch models can be optimized and run independently from the Python runtime, leading to significant speed-ups, especially for deployment.

Native ONNX Support: PyTorch has native support for ONNX (Open Neural Network Exchange), a platform-agnostic format to export models. This allows for efficient deployment on various platforms while retaining optimizations.

Enhanced CUDA Support: PyTorch’s integration with CUDA ensures that computations are rapidly performed on NVIDIA GPUs. The framework is continually optimized for the latest GPU architectures, ensuring that models run at their maximum potential speed.

Distributed Training: PyTorch has made substantial improvements in its distributed training capabilities, allowing models to be trained on multiple GPUs and even across several machines. This speeds up the training process and supports training larger models with vast datasets.

Collectively, these performance enhancements ensure that PyTorch remains competitive, not just as a research tool but also in production environments.

How to Get Started with PyTorch? – Learn Pytorch in 2025

Diving into PyTorch is an exciting journey, and the good news is that the community and resources available make it a smooth experience. If you’re eager to begin, here’s a structured path:

Official Documentation: Begin with PyTorch’s website. It provides an array of resources, including installation guidelines, tutorials, and comprehensive documentation. Make sure to install the version that’s compatible with your system and the CUDA version (if you’re planning on using GPU acceleration).

Tutorials: PyTorch’s official website hosts a series of beginner-friendly tutorials. They cover a range of topics, from the basics to more advanced applications, helping you grasp the fundamentals of tensor operations, autographed, and neural network definitions.

Online Courses: The best resource is the “Free Pytorch Bootcamp“ – For Beginners series on the LearnOpenCV website. This is helpful for people who want to get started in Deep Learning and PyTorch.

Books: Several well-reviewed books focus on deep learning with PyTorch. Some popular options include “Deep Learning with PyTorch” by Eli Stevens and “Programming PyTorch for Deep Learning” by Ian Pointer.

Community: Engage with the PyTorch community. Platforms like the PyTorch Discussion Forum, Stack Overflow, and Reddit have active PyTorch communities. They can be invaluable for troubleshooting, understanding best practices, and keeping up with the latest updates.

Project Building: Building projects is the best way to solidify your understanding. Start small by replicating classical machine learning tasks using PyTorch, and then graduate to more complex endeavors as your confidence grows.

Advanced Learning: Once you’re comfortable with the basics, delve deeper. Explore topics like TorchScript for production-level code, distributed training, and the integration of PyTorch with other platforms and libraries.

Stay Updated: The world of AI and deep learning is ever-evolving. Subscribe to relevant newsletters, follow influential figures in the PyTorch community on social media, and attend webinars or conferences.

Conclusion – PyTorch is the Tool to Master in 2025

Learning PyTorch in 2025 is more than just acquiring a new skill; it’s about positioning yourself at the forefront of machine learning and artificial intelligence innovation. PyTorch offers unparalleled advantages with its Python-based ecosystem, dynamic computation capabilities, and a strong focus on research and development. Its growing community, seamless integration with other platforms, and performance enhancements make it a compelling choice over its competitors.

Whether you’re a seasoned developer, a researcher, or someone looking to break into the field, now is the perfect time to embrace PyTorch. Take the first step, and unlock a world of possibilities this powerful framework offers.