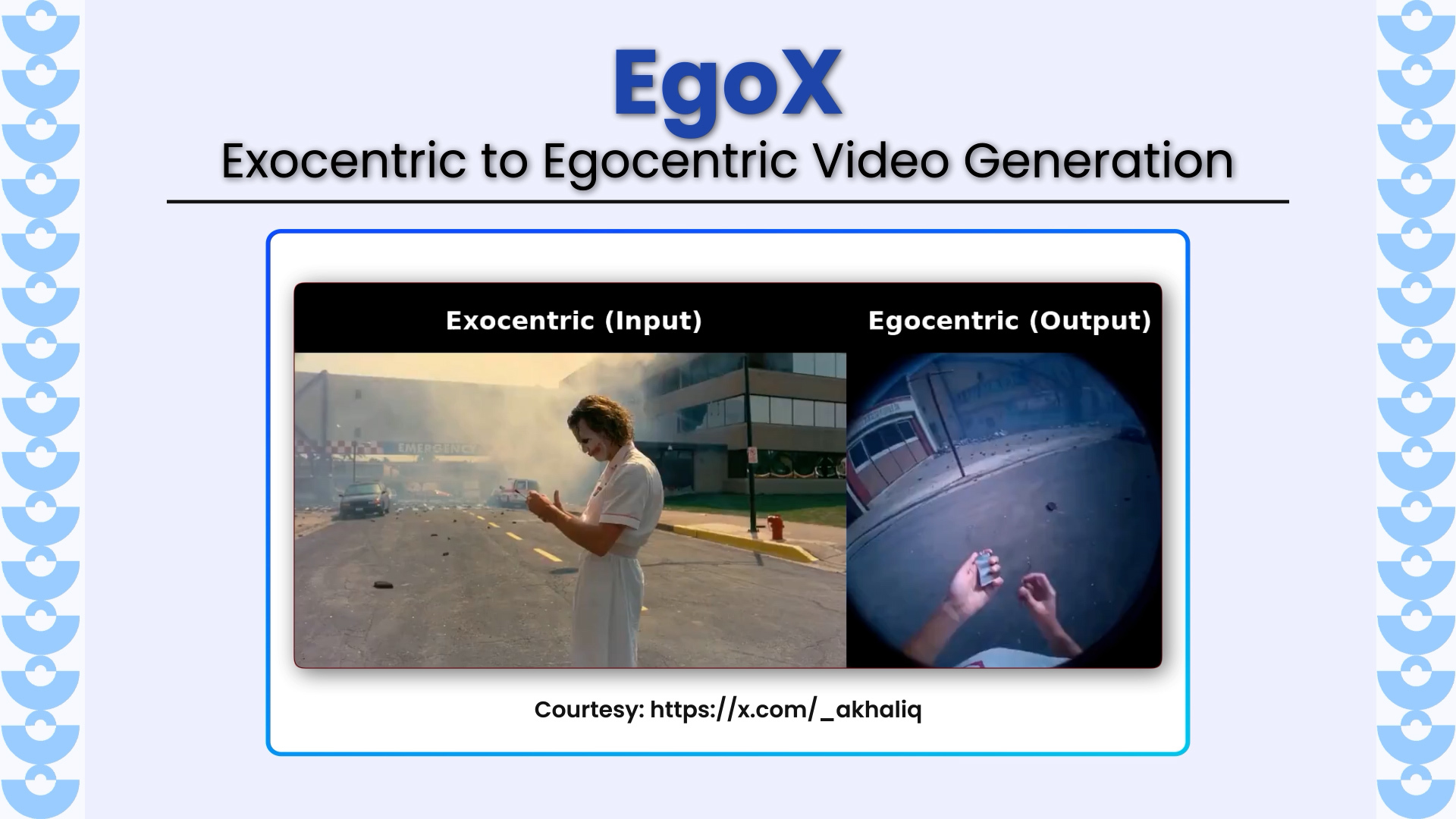

EgoX introduces a novel framework for translating third-person (exocentric) videos into realistic first-person (egocentric) videos using only a single input video. The work tackles a highly challenging problem of extreme viewpoint transformation with minimal view overlap, leveraging pretrained video diffusion models and explicit geometric reasoning to generate coherent, high-fidelity egocentric videos.

Key Highlights

- Single Exocentric → Egocentric Video Generation: EgoX generates first-person videos directly from a single third-person video, eliminating the need for multiple camera views or an initial egocentric frame required by prior approaches.

- Unified Conditioning with Exocentric + Egocentric Priors: The method introduces a unified conditioning strategy that combines exocentric video information and rendered egocentric priors using width-wise and channel-wise concatenation in latent space, enabling effective global context transfer and pixel-aligned guidance.

- Geometry-Guided Self-Attention: A geometry-guided self-attention mechanism incorporates 3D directional cues derived from point clouds, allowing the model to focus on view-relevant regions while suppressing unrelated or misleading content.

- Lightweight LoRA Adaptation on Pretrained Video Diffusion Models: EgoX leverages large-scale pretrained video diffusion models with lightweight LoRA tuning, preserving spatio-temporal knowledge while enabling robust exocentric-to-egocentric generation without heavy retraining.

- Clean Latent Conditioning for Stable Generation: By conditioning on clean exocentric latents throughout the denoising process, the model maintains fine-grained details and avoids degradation caused by noisy conditioning signals.

State-of-the-Art Results

EgoX achieves significant improvements over prior methods across:

Image quality metrics (PSNR, SSIM, LPIPS, CLIP-I)

Object-level geometric consistency (IoU, contour accuracy, location error)

Video-level temporal quality (FVD, motion smoothness, flicker reduction)

It demonstrates strong generalization to unseen scenes and challenging in-the-wild videos.

Why It Matters

EgoX enables realistic first-person video synthesis from existing third-person footage, opening new possibilities for immersive media, robotics, AR/VR, and human-centric AI. It represents a step toward scalable, geometry-aware video generation that bridges viewpoint understanding and high-fidelity diffusion-based synthesis.

Explore More

Paper: arXiv:2512.08269

Github Repository: https://github.com/DAVIAN-Robotics/EgoX

LearnOpenCV OpenCV-related blog posts:

FramePack: https://learnopencv.com/framepack-video-diffusion/

Video Generation: Evolution from VDM to Veo2: https://learnopencv.com/video-generation-models/

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning