Introduction

In our earlier blogs, we discussed the best institutes across the world for computer vision research. In this fun read, we’ll look at the different stages of Computer Vision research and how you can go about publishing your research work. Let us delve into them now.

Looking to become a Computer Vision Engineer? Check out our Comprehensive Guide!

Table of Contents

Different Stages of Computer Vision Research

Computer Vision Research can be put into various stages, one building to the next. Let us look at them in detail.

Identification of Problem Statement

Computer Vision research starts with identifying the problem statement. It is a crucial step in defining the scope and goals of a research project. It involves clearly understanding the specific challenge or task the researchers aim to address using computer vision techniques. Here are the steps involved in identifying the problem statement in computer vision research:

- Problem Statement Analysis: The first step is to pinpoint the specific application domain within computer vision. This could be related to object recognition in autonomous vehicles or medical image analysis for disease detection.

- Defining the problem: Next, we define the precise problem we want to solve within that domain, like classifying images of animals or diagnosing diseases from X-rays.

- Understanding the objectives: We need to understand the research objectives and outline what we intend to achieve through this project. For instance, improving classification accuracy or reducing false positives in a medical imaging system.

- Data availability: Next, we need to analyze the availability of data for our project. Check if existing datasets are suitable for our task or if we need to gather our own data, like collecting images of specific objects or medical cases.

- Review: Conduct a thorough review of existing research and the latest methodologies in the field. This will help you gain insights into the current state-of-the-art techniques and the challenges others have faced in similar projects.

- Question formulation: Once we review the work, we can formulate research questions to guide our experiments. These questions could address specific aspects of our computer vision problem and help better structure our research.

- Metrics: Next, we define the evaluation metrics that we’ll use to measure the performance of our vision system. Some common metrics include accuracy, precision, recall, and F1-score.

- Highlighting: Highlight how solving the problem will have an effect in the real world. For instance, improving road safety through better object recognition or enhanced medical diagnoses for early treatment.

- Research Outline: Finally, outline the research plan, and detail the methodology employed for data collection, model development, and evaluation. A structured outline will ensure we are on the right track throughout our research project.

Let us move to the next step, data collection and creation.

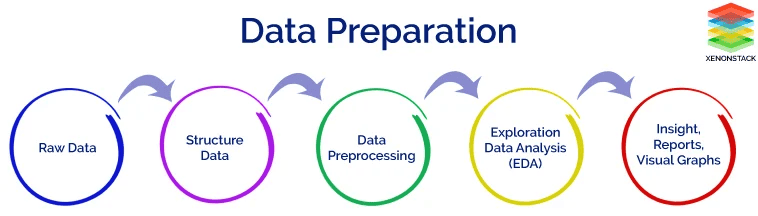

Dataset Collection and Creation

Creating and gathering datasets is one of the key building blocks in computer vision research. These datasets facilitate the algorithms and models used in vision systems. Let us see how this is done.

- Firstly we need to know what we are trying to solve. For instance, are we training models to recognize dogs in photos or identify anomalies in medical images?

- Now, we’ll need images or videos. Depending on the research needs, we can find them on public datasets or collect our own.

- Next, we mark up the data. For instance, if you’re teaching a computer to spot dogs in pictures, you’ll draw boxes around the cars and say, “These are dogs!”

- Raw data can be a mess. We may need to resize images, adjust colors, or add more examples to ensure our dataset is neat and complete.

- Divide the dataset into parts,

- 1-part for training your model

- 1-part for fine-tuning

- 1-part for testing how well your model works

- Next, ensure the dataset fairly represents the real world and doesn’t favor one group or category too much.

One can also share their dataset and research with others for inputs and improvements. Dataset collection and creation are vital in computer vision research.

Exploratory Data Analysis

Exploratory Data Analysis (EDA) briefly analyzes a dataset to answer preliminary questions and guide the modeling process. For instance, this could be looking for patterns across different classes. This is not only used by Computer Vision Engineers but also Data Scientists to ensure that the data they provide are aligned with different business goals or outcomes. This step involves understanding the specifics of image datasets. For instance, EDA is used to spot anomalies, understand data distribution, or gain insights to further model training. Let us look at the role of EDA in model development.

- With EDA, one can develop data preprocessing pipelines and choose data augmentation strategies.

- We can analyze how the findings from EDA can affect the choice of model architecture. For instance, the need for some convolutional layers or input images.

- EDA is also crucial for advanced Computer Vision tasks like object detection, segmentation, and image generation backed by studies.

Now let us dive into the specifics of EDA methods and preparing image datasets for model development.

Visualization

- Sample Image Visualization involves displaying a random set of images from the dataset. This is a fundamental step where we get an idea of the data like lighting conditions or variations in image quality. From this, one can infer the visual diversity and any challenges in the dataset.

- Analyzing the pixel distribution intensities offers insights into the brightness and contrast variations across the dataset if there is any need for image enhancement techniques.

- Next, creating histograms for different color channels gives us a better understanding of the color distribution of the dataset. This is a crucial step for tasks such as image classification.

Image Property Analysis

- Another crucial part is understanding the resolution and the aspect ratio of images in the dataset. It helps make decisions like resizing the image or normalizing the aspect ratio, which is crucial in maintaining consistency in input data for neural networks.

- Analyzing the size and distribution of annotated objects can be insightful in datasets with annotations. This influences the design layers in the neural network and understanding the scale of objects.

Correlation Analysis

- With some advanced EDA processes like high dimensional image data, analyzing the relation between different features is helpful. This would aid with dimensionality reduction or feature selection.

- Next, it is crucial to understand the spatial correlations within images, like the relationship between different regions in an image. It helps in the development of spatial hierarchies in neural networks.

Class Distribution Analysis

- EDAs are important in understanding the imbalances in class distribution. This is key in classification tasks where imbalanced data can lead to biased models.

- Once the imbalances are identified, we can adopt techniques like undersampling majority classes or oversampling minority classes during model training.

Geometric Analysis

- Understanding geometric properties like edges, shapes, and textures in images offers insights into the features important for the problem at hand. We can make informed decisions on selecting specific filters or layers in the network architecture.

- It’s important to understand how different morphological transformations affect images for segmentation and object detection tasks.

Sequential Analysis

The sequential analysis applies to video data.

- For instance, analyzing changes between frames can offer information like motion, temporal consistency, or the need for temporal modeling in video datasets or video sequences.

- Identifying temporal variations and scene changes gives us insights into the dynamics within the video data that are crucial for tasks like event detection or action recognition.

Now that we’ve discussed Exploratory Data Analysis and some of its techniques let us move to the next stage in Computer Vision research, defining the model architecture.

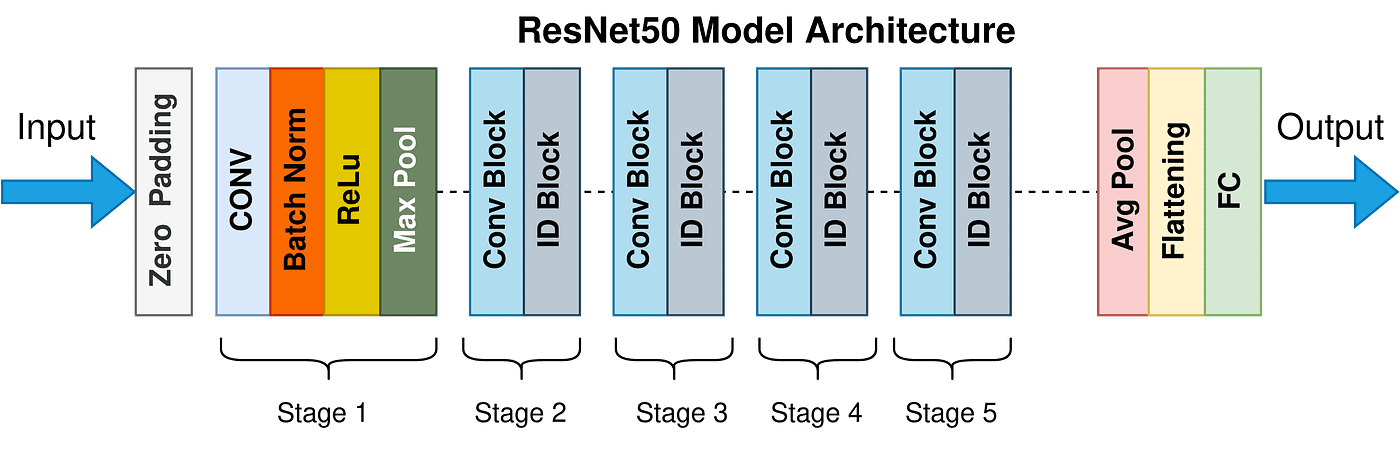

Defining Model Architecture

Defining a model architecture is a critical component of research in computer vision, as it lays the foundation for how a machine learning model will perceive, process, and interpret visual data. We analyze a model that impacts the ability of the model to learn from visual data and perform tasks like object detection or semantic segmentation.

Model architecture in computer vision refers to the structural design of an artificial neural network. The architecture defines how the model processes input images, extracts features, and makes predictions and classifications.

What are the components of a model architecture? Let’s explore them.

Input Layer

This is where the model receives the image data, mostly in the form of a multi-dimensional array. For colored images, this could be a 3D array where color channels show RGB values. Preprocessing steps like normalization are applied here.

Convolutional Layers

These layers apply a set of filters to the input. Every filter convolves across the width and height of the input volume, computing the dot product between the entries of the filter and the input, producing a 2D activation map for each filter. Preserving the relationship between pixels captures spatial hierarchies in the image.

Activation Functions

Activation functions facilitate networks to learn more complex representations by introducing them to non-linear properties. For instance, the ReLU (Rectified Linear Unit) function applies a non-linear transformation (f(x) = max(0,x)) that retains only positive values and sets all negative values to zero. Other functions include sigmoid and tanh.

Pooling Layers

These layers are used to perform a down-sampling operation along the spatial dimensions (width, height), reducing the number of parameters and computations in the network. For instance, Max pooling, a common approach, takes the maximum value from a set of values in the filter area, is a common approach. This operation offers spatial variance, making the recognition of features in the input invariant to scale and orientation changes.

Fully Connected Layers

Here, the layers connect every neuron in one layer to every neuron in the next layer. In a CNN, the high-level reasoning in the neural network is performed via these dense layers. Typically, they are positioned near the end of the network and are used to flatten the output of convolutional and pooling layers to form a single vector of features used for final classification or regression tasks.

Dropout Layers

Dropout is a regularization technique where randomly selected neurons are ignored during training. This means that the contribution of these neurons to activate the downstream neurons is removed temporally on the forward pass and any weight updates are not applied to the neuron on the backward pass. This helps in preventing overfitting.

Batch Normalization

In batch normalization, the output from a previous activation layer is normalized by subtracting the batch mean and then dividing it by the standard deviation of the batch. This technique helps stabilize the learning process and significantly reduces the number of training epochs required for deep network training.

Loss Function

The difference between the expected outcomes and the predictions made by the model is quantified by the loss function. Cross-entropy for classification tasks and mean squared error for regression tasks are some of the common loss functions in computer vision.

Optimizer

The optimizer is an algorithm used to minimize the loss function. It updates the network’s weights based on the loss gradient. Some common optimizers include Stochastic Gradient Descent (SGD), Adam, and RMSprop. They use backpropagation to determine the direction in which each weight should be adjusted to minimize the loss.

Output Layer

This is the final layer, where the model’s output is produced. The output layer typically includes a softmax function for classification tasks that converts the outputs to probability values for each class. For regression tasks, the output layer may have a single neuron.

Frameworks like TensorFlow, PyTorch, and Keras are widely used for designing and implementing model architectures. They offer pre-built layers, training routines, and easy integration with hardware accelerators.

Defining a model architecture requires a good grasp of both the theoretical aspects of neural networks and the practical aspects of the specific task.

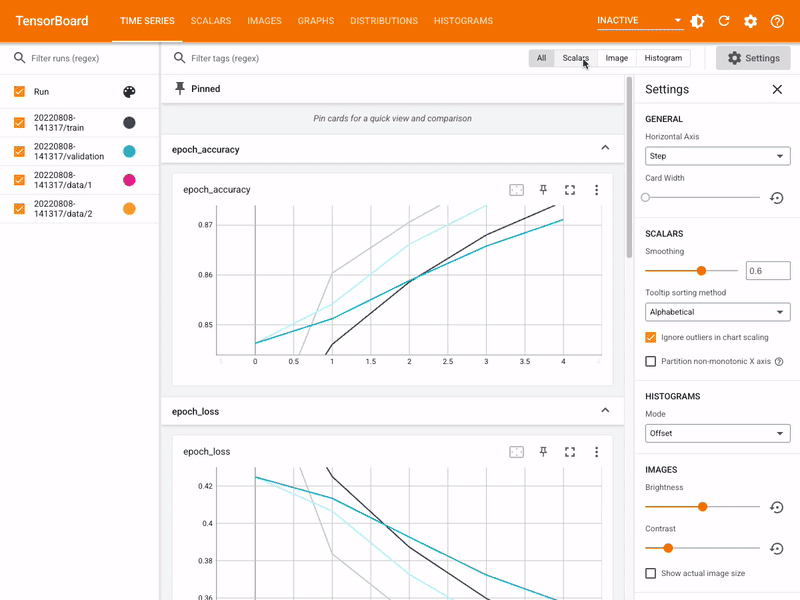

Training and Validation

Training and validation are crucial in developing a model. They help evaluate a model’s performance, especially when dealing with object detection or image classification tasks.

Training

In this phase, the model is represented as a neural network that learns to recognize image patterns and features by altering its internal parameters iteratively. These parameters are weights and biases related to the network’s layers. Training is key for extracting meaningful features from raw visual data. Let us see how one can go about training a model.

- Acquiring a dataset is the first step. It could be in the form of images or videos for model learning purposes. For robustness, they cover various environmental conditions, variations, and object classes.

- The next step is data preprocessing. This involves resizing, normalization, and augmentation.

- Resizing is where all the input data has the same dimensions for batch processing.

- In Normalization, pixels are standardized to zero mean and unit variance, aiding convergence.

- Augmentation applies random transformations to increase the size of the dataset artificially, thereby improving the model’s ability to generalize.

- Once data preprocessing is done, we must choose the appropriate neural network architecture catering to the specific vision task. For instance, CNNs are widely used for image-related tasks.

- Next, we initialize the model parameters, usually weights, and biases, using random values or pre-trained weights from a model trained on a simple dataset. Transfer learning can significantly improve performance, especially when data is limited.

- Then we can optimize the algorithm to adjust its parameters iteratively with stochastic gradient descent (SGD) or RMSprop. Gradients in relation to the model’s parameters are computed through backpropagation which are used to update the parameters.

- Once the algorithm is optimized, the data is trained in mini-batches through the network, computing the loss for each mini-batch and performing gradient updates. This happens until the loss falls below a predefined threshold.

- Next, we must optimize the training performance and convergence speed by fine-tuning the hyperparameters. This could done by optimizing learning rates, batch sizes, weight regulation terms, or network architectures.

- We need to assess the model’s performance using validation or test datasets and eventually deploy the model in real-world applications through software integrations or embedded devices.

Now let us move to the next step- Validation.

Validation

Validation is fundamental for the quantitative assessment of performance and generalization capabilities of algorithms. It ensures the reliability and effectiveness of the models when applied to real-world data. Validation evaluates the ability of a model to make accurate predictions of previously unseen data hence being able to gauge its ability for generalization.

Now let us explore some of the key techniques involved in validation.

Cross-Validation Techniques

- K-Fold Cross-Validation is the method where the dataset is partitioned into K non-overlapping subsets. The model is trained and evaluated K times, with each fold taking turns as the validation set while the rest serve as the training set. The results are averaged to obtain a robust performance estimate.

- Leave-One-Out Cross-Validation or LOOCV is an extreme form of cross-validation where each data point is used as the validation set while the remaining data points constitute the training set.LOOCV offers an exhaustive evaluation of model performance.

Stratified Sampling

In some imbalanced datasets where a few classes have significantly fewer instances than others, stratified sampling ensures the balance between training and validation sets for the distribution of classes.

Performance Metrics

To assess the model’s performance, a range of performance metrics specified for computer vision tasks are deployed. They are not limited to the following.

- Accuracy is the ratio of the correctly predicted instances to the total number of instances.

- Precision is the proportion of true positive predictions among all positive predictions.

- Recall is the proportion of true positive predictions among all positive instances.

- F1-Score is the harmonic mean of precision and recall.

- Mean Average Precision (mAP)is commonly used in object detection and image retrieval tasks to evaluate the quality of ranked lists of results.

Hyperparameter Tuning

Validation is closely integrated with hyperparameter tuning, where the model’s hyperparameters are systematically adjusted and evaluated using the validation set. Techniques such as grid search, random search, or Bayesian optimization help identify the optimal hyperparameter configuration for the model.

Data Augmentation

Data augmentation techniques are applied to test the model’s robustness and the ability to handle different conditions or transformations during validation to simulate variations in the input data.

Training is where the model learns from labeled data, and Validation is where the model’s learning and generalization capabilities are assessed. They ensure that the final model is robust, accurate, and capable of performing well on unseen data, which is critical for computer vision research.

Hyperparameter Tuning

Hyperparameter tuning refers to systematically optimizing hyperparameters in deep learning models for tasks like image processing and segmentation. They control the learning algorithm’s performance but did not learn from the training data. Fine-tuning hyperparameters are crucial if we wish to achieve accurate results.

Let us look at some of the crucial hyperparameters for model training.

Batch Size

It is the number of training examples used in every forward and backward pass. Large batch sizes offer smoother convergence but need more memory. On the contrary, small batch sizes need less memory and can help escape local minima.

Number of Epochs

The Number of epochs defines how often the entire training dataset is processed during training. Too few epochs can lead to underfitting, and too many can lead to overfitting.

Learning Rate

This determines the step size during gradient-based optimization. If the learning rate is too high, it can lead to overshooting, causing the loss function to diverge, and if the learning rate is too short, it can cause slow convergence.

Weight Initialization

The training stability is affected by the initialization of weights. Techniques such as Glorot initialization are designed to address the vanishing gradient problems.

Regularization Techniques

Some techniques like dropout and weight decay aid in preventing overfitting. The model generalization is enhanced through random rotations using data augmentation.

Choice of Optimizer

The updates during training for model weights are determined by the optimizer. They have their parameters like momentum, decay rates and epsilon.

Hyperparameter tuning is usually approached as an optimization problem. Few techniques like Bayesian optimization efficiently explore the hyperparameter space balancing computational costs and do not slack on the performance. A well-defined hyperparameter tuning includes not just adjusting individual hyperparameters but also also considers their interactions.

Performance Evaluation on Unseen Data

In the earlier section, we discussed how one must go about doing the training and validation of a model. Now we’ll discuss how to evaluate the performance of a dataset on unseen data.

Training and validation dataset split is paramount when developing and evaluating models. This is not to be confused with the training and validation we discussed earlier for a model. Splitting the dataset for training and validation aids in understanding the model’s performance on unseen data. This ensures that the model generalizes well to new data. Let us look at them.

- A training dataset is a collection of labeled data points for training the model, adjusting parameters, and inferring patterns and features.

- A separate dataset is used for evaluating the model during development for hyperparameter tuning and model selection. This is the Validation dataset.

- Then there is the test dataset, an independent dataset used for assessing the final performance and generalization ability on unseen data.

Splitting datasets is needed to prevent the model from training on the same data. This would hinder the model’s performance. Some commonly used split ratios for the dataset are 70:30, 80:20, or 90:10. The larger portion is used for training, while the smaller portion is used for validation.

Research Publications

You have put so much effort into your research paper. But how do we publish it? Where do we publish it? How do I find the right computer vision research groups? That is what this section covers, so let’s get to it.

Conferences

There are some top-tier computer vision conferences happening across the globe. They are among the best places to showcase research work, look for future collaborations, and build networks.

Conference on Computer Vision and Pattern Recognition (CVPR)

Also called the CVPR, it is one of the most prestigious conferences in the world of Computer Vision. It is organized by the IEEE Computer Society and is an annual event. It has an amazing history of showcasing cutting-edge research papers in image analysis, object detection, deep learning techniques, and much more. CVPR has set the bar high, placing a strong emphasis on the technical aspects of the submissions. They must meet the following criteria.

Papers must possess an innovative contribution to the field. This could be the development of new algorithms, techniques, or methodologies that can bring advancements in computer vision.

If applicable, the submissions must have mathematical formulations of their methods, like equations and theorem proofs. This offers a solid theoretical foundation for the paper’s approach.

Next, the paper should include comprehensive experimental results involving many datasets and benchmarking against existing models. These are key to demonstrating the effectiveness of your proposed approach.

Clarity – this is a no-brainer; the writing and presentation must be clear and concise. The writers are expected to explain the algorithms, models, and results in a technically sound manner.

CVPR is an amazing platform for networking and engaging with the community. It’s a great place to meet academics, researchers, and industry experts to collaborate and exchange ideas. The acceptance rate for papers is only 25.8% hence the recognition within the vision community is impressive. It often leads to citations, greater visibility, and potential collaborations with renowned researchers and professionals.

International Conference on Computer Vision (ICCV)

The ICCV is another premier conference held annually once, offering an amazing platform for cutting-edge computer vision research. Much like the CVPR, the ICCV is also organized by the IEEE Computer Society, attracting worldwide visionaries, researchers, and professionals. Topics range from object detection and recognition all the way to computational photography. ICCV invites original papers offering a significant contribution to the field. The criteria for submissions are very similar to the CVPR. They must possess mathematical formulations, algorithms, experimental methodology, and results. ICCV adopts peer review to add a layer of technical rigor and quality to the accepted papers. Submissions usually undergo multiple stages of review, giving detailed feedback on the technical aspects of the research paper. The acceptance rates at ICCV are typically low at 26.2%.

Besides the main conference, the ICCV hosts workshops and tutorials that offer in-depth discussions and presentations in emerging research areas. It also offers challenges and competitions associated with computer vision tasks like image segmentation and object detection.

Like the CVPR, it offers excellent opportunities for future collaborations, networking with peers, and exchanging ideas. The papers accepted at the ICCV are typically published in the IEEE Computer Society and made available to the vision community. This offers significant visibility and recognition to researchers for papers that are accepted.

European Conference on Computer Vision (ECCV)

The European Conference on Computer Vision, or ECCV, is another comprehensive conference if you are looking for the top computer vision conferences globally. The ECCV lays a lot of emphasis on the scientific and technical quality of the paper. Like the above two conferences we discussed, it emphasizes how the researcher incorporates the mathematical foundations, algorithms, and detailed derivations and proofs with extensive experimental evaluations.

According to the ECCV formatting guidelines, the research paper ideally ranges from 10 to 14 pages. It adopts a double-blind peer review, where the researchers must make their submissions anonymous to curb any discrepancies.

ECCV also offers huge opportunities for collaborations and establishing connections. With an acceptance rate of 31.8%, a researcher can benefit from academic recognition, high visibility, and citations.

Winter Conference on Applications of Computer Vision (WACV)

WACV is a top international computer vision event with the main conference and a few workshops and tutorials. Much like the other conferences, it is held annually. With an acceptance rate below 30%, it attracts leading researchers and industry professionals. The conference usually takes place in the first week of January.

Journals

As a computer vision researcher, one must publish one’s works in journals to show your findings and give more insights into the field. Let us look at a few of the computer vision journals.

Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

Also called the TPAMI, this journal focuses on the various aspects of machine intelligence, pattern recognition, and computer vision. It offers a hybrid publication permitting traditional or author-paid open-access manuscript submissions.

With open-access manuscripts, the paper has unrestricted access to it through the IEEE Xplore and Computer Society Digital Library.

Regarding traditional manuscript submissions, the IEEE Computer Society has various award-winning journals for publication. One can browse through the different topics that fit their research. They often publish special sections on emerging topics. Some factors you need to consider are submission to publications time, bibliometric scores like impact factor, and publishing fees.

International Journal of Computer Vision (IJCV)

The IJCV offers a platform for new research results. With 15 issues a year, the International Journal of Computer Vision offers high-quality, original contributions to the field of computer vision. The length of the articles ranges from 10-page regular articles to up to 30 pages for survey papers that offer state-of-the-art presentations and results. The research must cover mathematical, physics, and computational aspects of computer vision, like image formation, processing, interpretation, machine learning techniques, and statistical approaches. Researchers are not charged to publish on IJCV. It is not only a journal that opens doors for researchers to showcase their papers but also a goldmine of information in deep learning, artificial intelligence, and robotics.

Journal of Machine Learning Research (JMLR)

Established in 2000, JMLR is a forum for electronic and paper publications of comprehensive research papers. This platform covers topics like machine learning algorithms and techniques, deep learning, neural networks, robotics, and computer vision. JMLR is freely available to the public. It is run by volunteers, and the papers undergo rigorous reviews, which serve as a valuable resource for the latest updates in the field.

You’ve invested weeks and months into this paper. Why not get the recognition and credibility your work deserves? The above Journals and Conferences offer the ultimate gateway for a researcher to showcase their works and open up a plethora of opportunities for academic and industry collaborations.

Conclusion

In conclusion, our journey through the intricate world of computer vision research has been a fun one. From the initial stages of understanding the problem statements to the final steps of publication in computer vision research groups, we’ve comprehensively delved into each of them.

There is no research, big or small; each offers its own contributions to the ever-evolving field of the Computer Vision domain.

We’ve more detailed posts coming your way. Stay tuned! See you guys in the next one!!

Related Blog Posts

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning