Research Papers

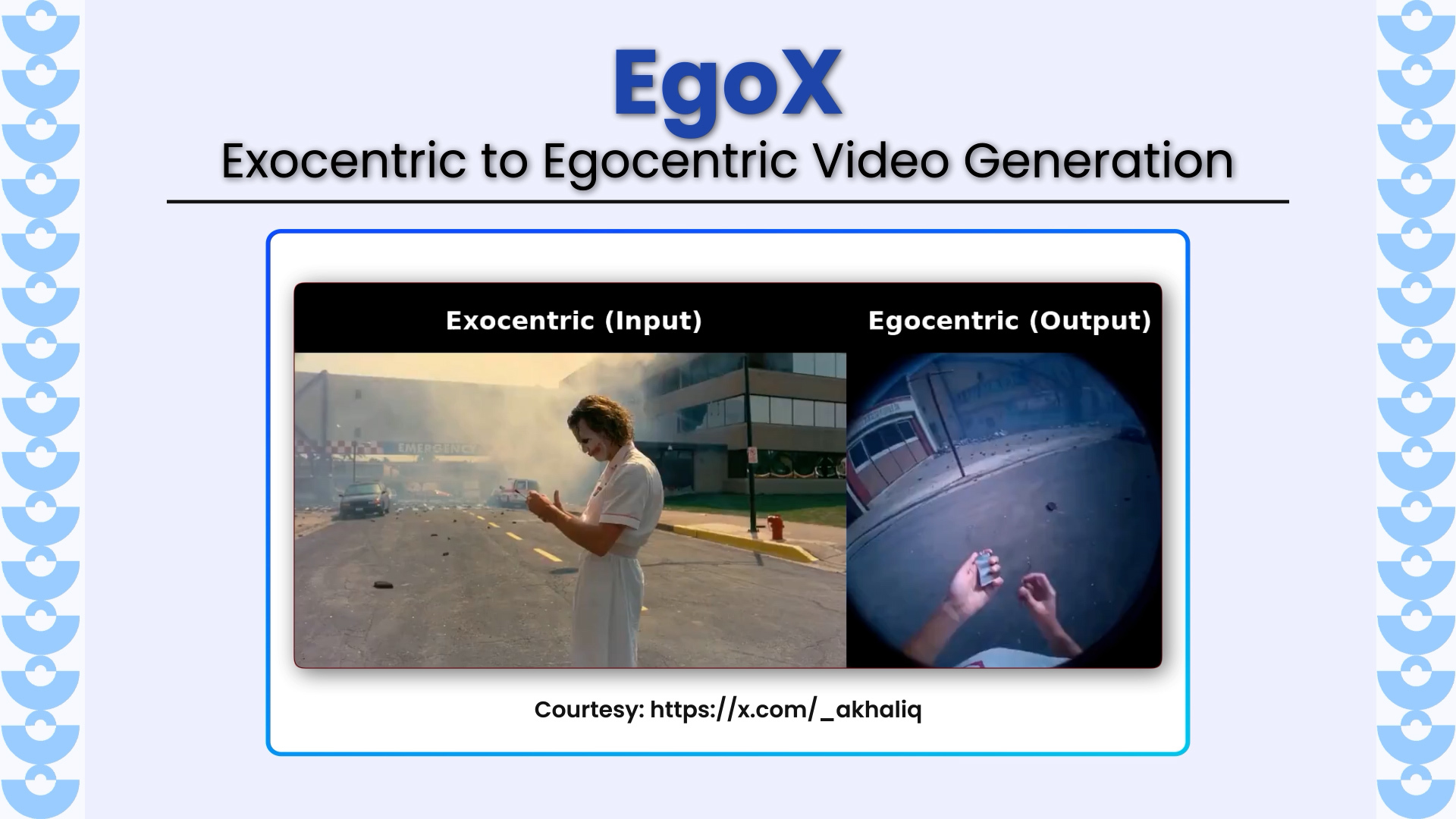

EgoX introduces a novel framework for translating third-person (exocentric) videos into realistic first-person (egocentric) videos using only a single input video. The work tackles a highly challenging problem of extreme

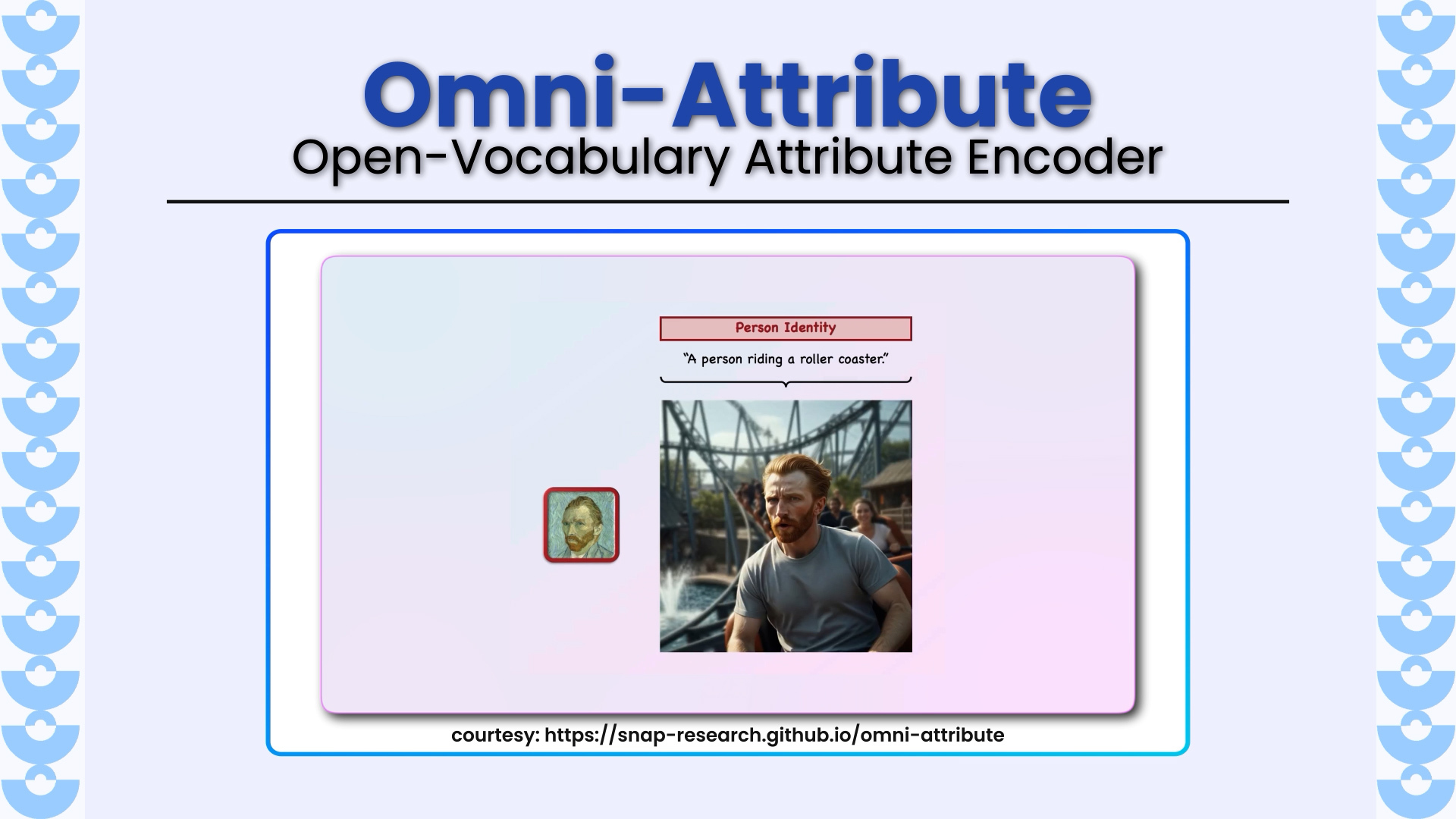

Omni-Attribute introduces a new paradigm for fine-grained visual concept personalization, solving a long-standing problem in image generation: how to transfer only the desired attribute (identity, hairstyle, lighting, style, etc.) without

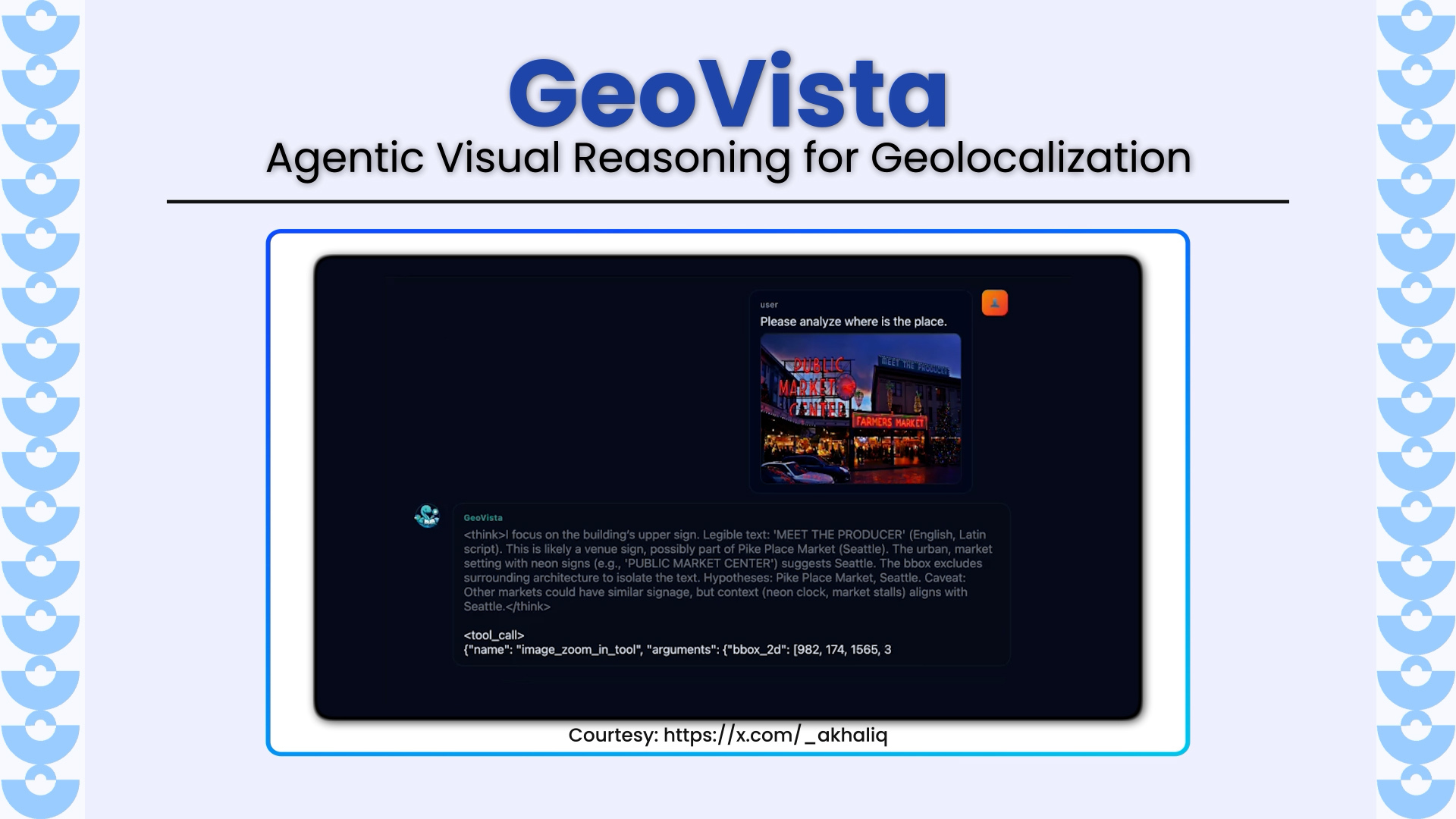

GeoVista introduces a new frontier in multimodal reasoning by enabling agentic geolocalization, a dynamic process where a model inspects high-resolution images, zooms into regions of interest, retrieves web information in

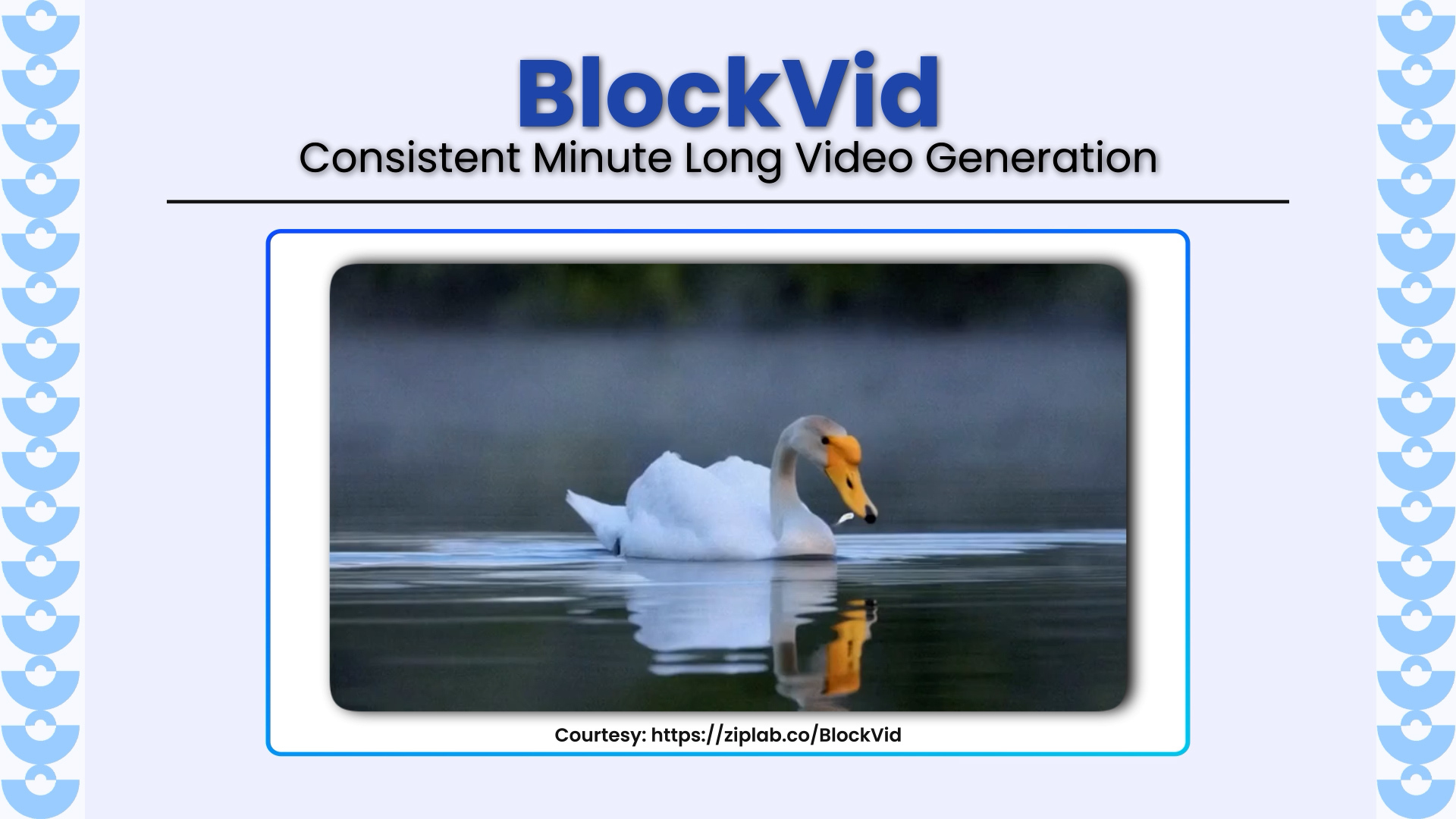

BlockVid represents a major leap forward in long-video generation, tackling one of the hardest open problems in video generation, i.e, producing coherent, high-fidelity, minute-long clips without collapse, drift, or degradation

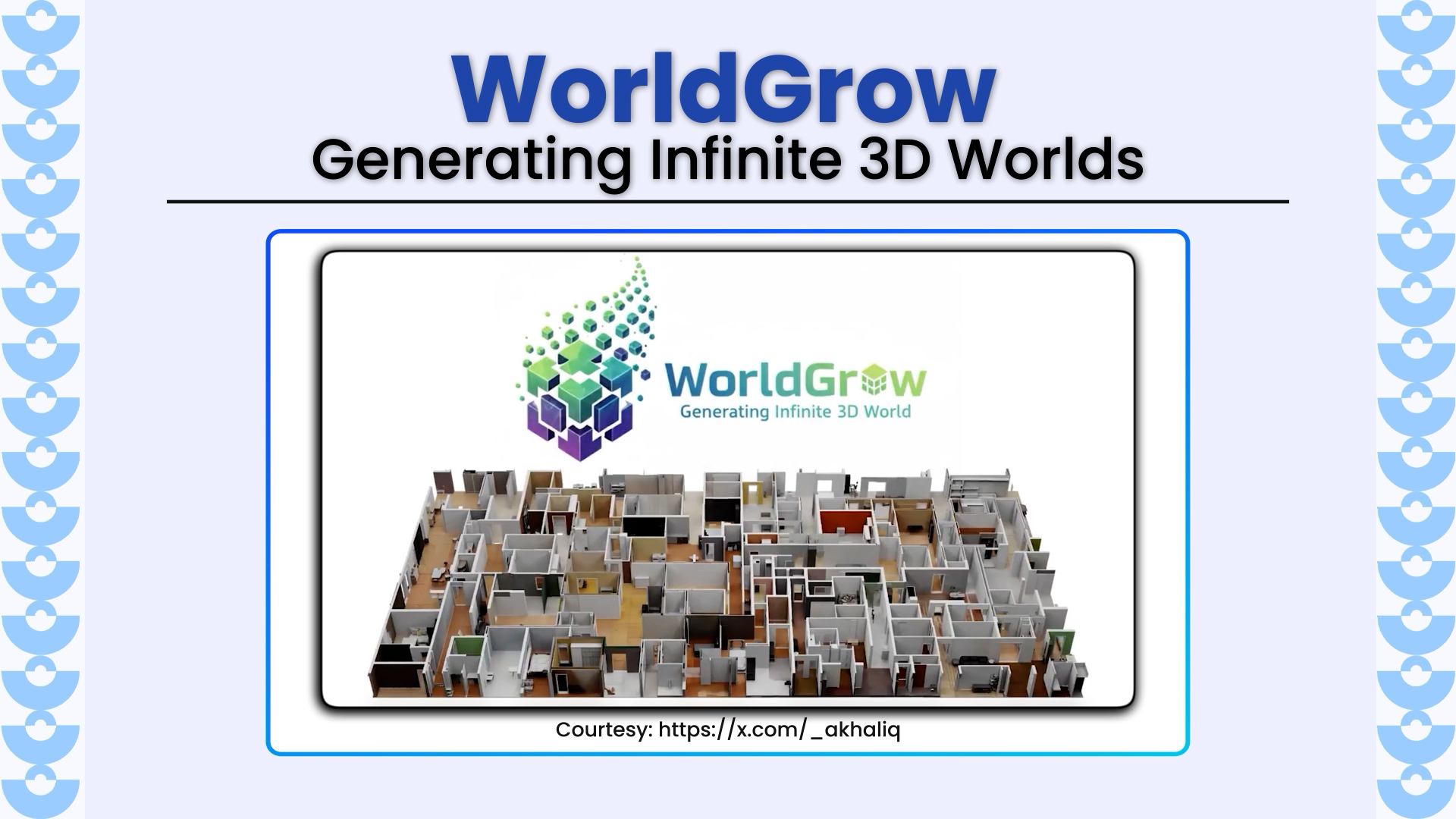

WorldGrow redefines 3D world generation by enabling infinite, continuous 3D scene creation through a hierarchical block-wise synthesis and inpainting pipeline. Developed by researchers from Shanghai Jiao Tong University, Huawei Inc.,

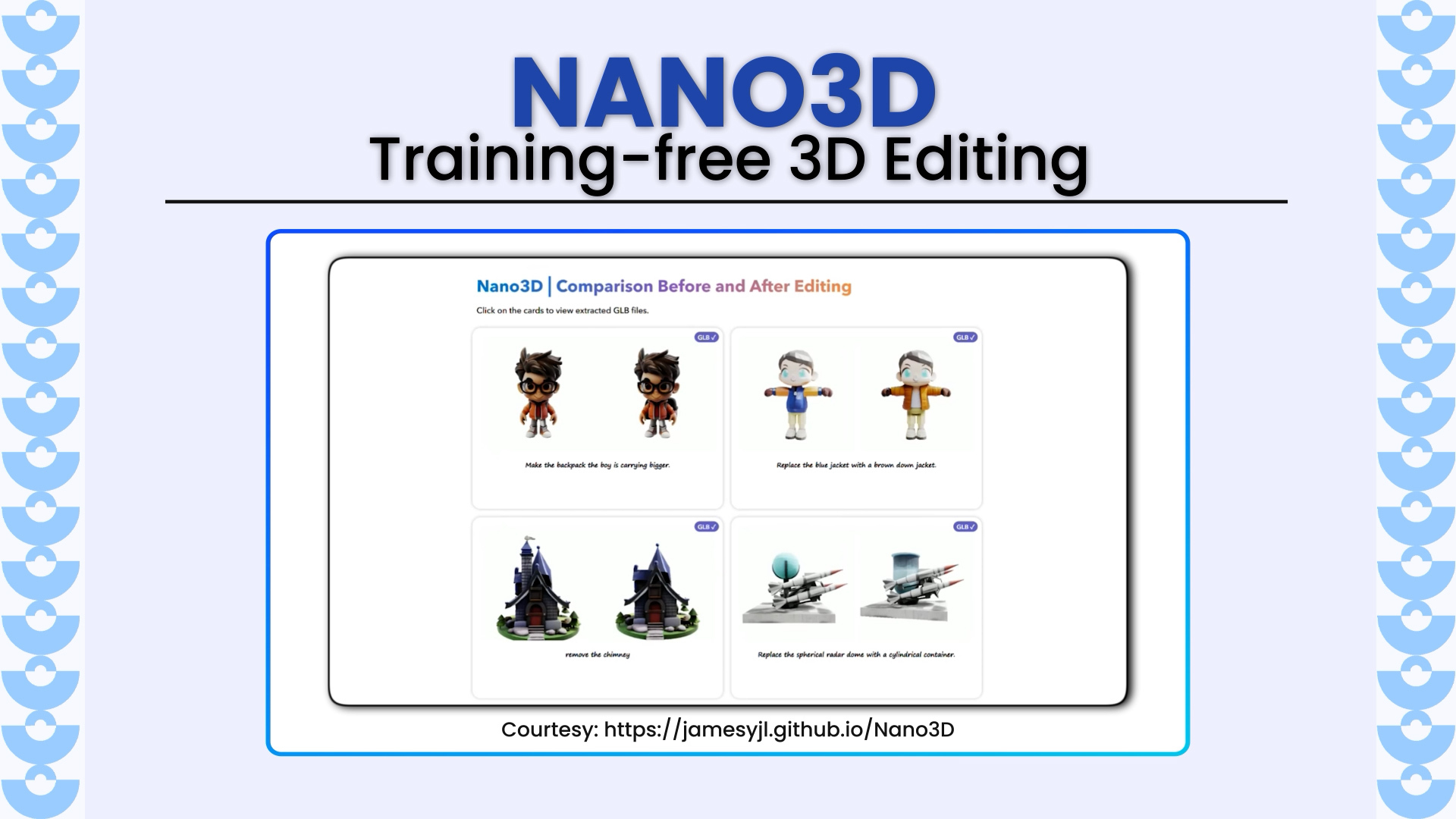

Nano3D revolutionizes 3D asset editing by enabling training-free, part-level shape modifications like removal, addition, and replacement without any manual masks. Developed by researchers from Tsinghua University, Peking University, HKUST, CASIA,

Triangle Splatting+ redefines 3D scene reconstruction and rendering by directly optimizing opaque triangles, the fundamental primitive of computer graphic, in a fully differentiable framework. Unlike Gaussian Splatting or NeRF-based approaches,

Code2Video introduces a revolutionary framework for generating professional educational videos directly from executable Python code. Unlike pixel-based diffusion or text-to-video models, Code2Video treats code as the core generative medium, enabling

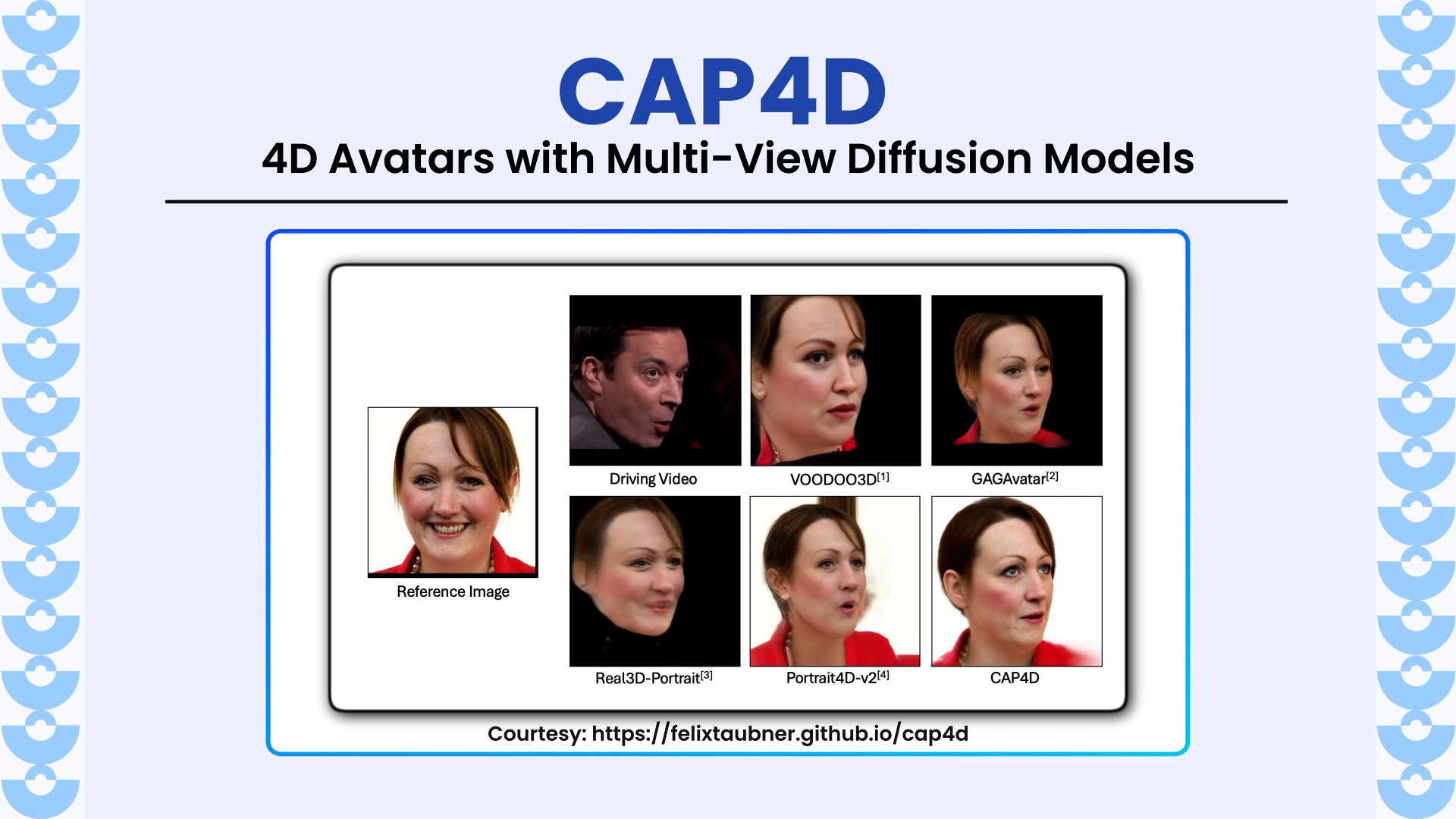

CAP4D introduces a unified framework for generating photorealistic and animate style rendering 4D portrait avatars from any number of reference images as well as even a single image. By combining

Test3R is a novel and simple test-time learning technique that significantly improves 3D reconstruction quality. Unlike traditional pairwise methods such as DUSt3R, which often suffer from geometric inconsistencies and poor generalization,

BlenderFusion is a novel framework that merges 3D graphics editing with diffusion models to enable precise, 3D-aware visual compositing. Unlike prior approaches that struggle with multi-object and camera disentanglement, BlenderFusion

LongSplat is a new framework that achieves high-quality novel view synthesis from casually captured long videos, without requiring camera poses. It overcomes challenges like irregular motion, pose drift, and memory