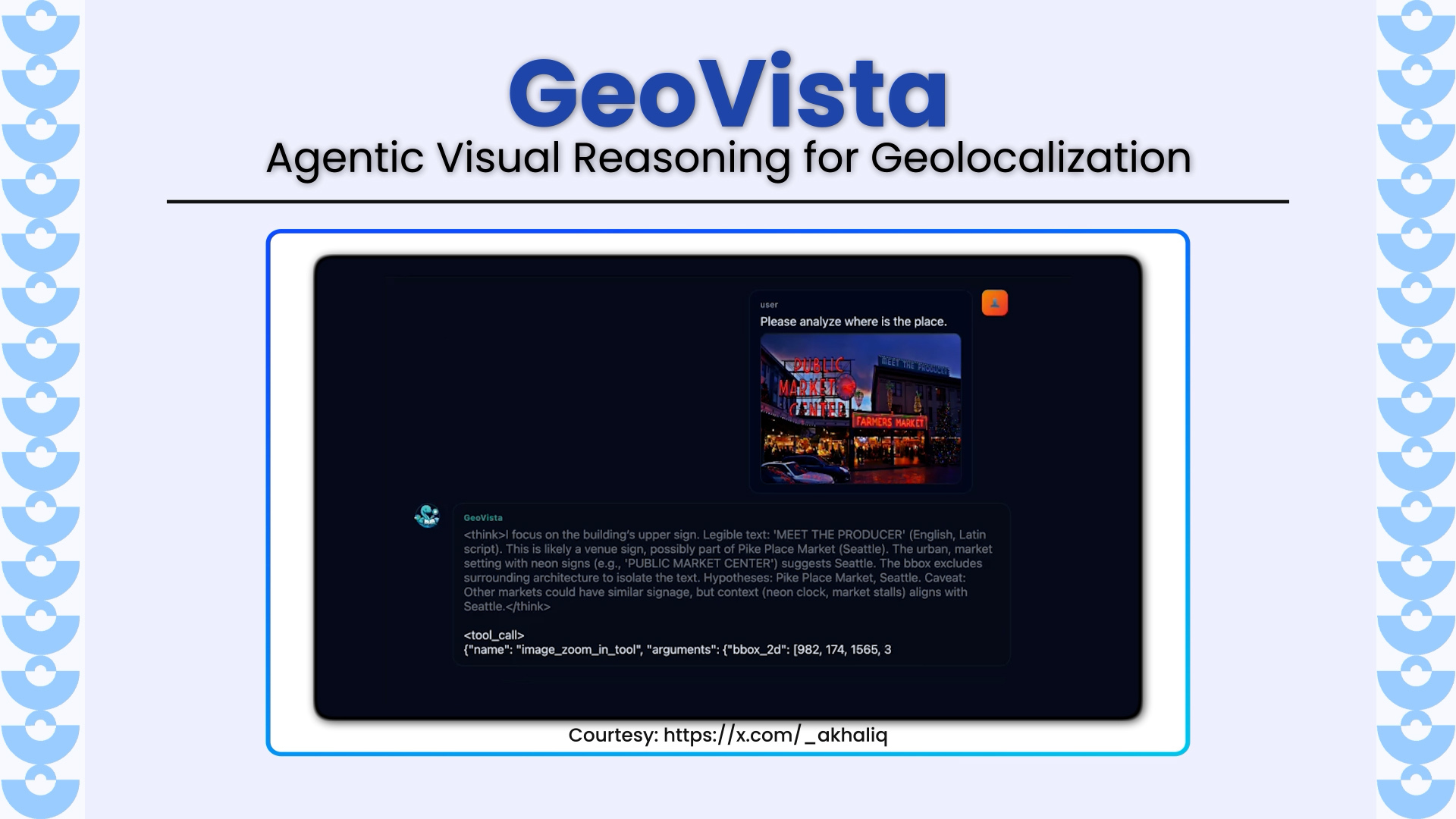

GeoVista introduces a new frontier in multimodal reasoning by enabling agentic geolocalization, a dynamic process where a model inspects high-resolution images, zooms into regions of interest, retrieves web information in real time, and iteratively reasons toward pinpointing a location. Developed by researchers from Fudan University, Tencent Hunyuan, Tsinghua University, and Shanghai Innovation Institute, GeoVista addresses the long-standing gap between visual reasoning and web-augmented hypothesis refinement.

Key Highlights

- Agentic Visual Reasoning Loop: Integrates image zoom-in tools and web search directly into the model’s reasoning steps, enabling a smooth “think → act → observe” loop similar to OpenAI o3-style multimodal reasoning.

- GeoBench: A New High-Resolution Global Benchmark: Introduces a rigorously curated benchmark containing 1,142 images across 6 continents, spanning photos, panoramas, and satellite images filtered for localizability and excluding trivial landmark images for a fair, reasoning-only test.

- Tool-Aware Training Pipeline: Uses a cold-start SFT stage to teach tool-use patterns, followed by reinforcement learning (GRPO) with multi-turn trajectories. Thought processes, tool calls, and observations are serialized into rich training examples.

- Hierarchical Reward Design: Reward models for correct predictions at country, state, and city levels, enabling fine-grained control and significantly improving multi-turn geolocalization accuracy.

- SOTA Performance Among Open-Source Models: On GeoBench, GeoVista surpasses all open-source baselines such as Mini-o3, DeepEyes, and Thyme-RL, achieving:

- 72.68% city-level accuracy

- 79.60% state-level accuracy

- 92.64% country-level accuracy

- and performs on par with GPT-5 and Gemini-2.5-Flash on several metrics.

- Nuanced Distance Evaluation (<3 km Accuracy): Achieves 52.83% within 3 km and a median geodetic error of 2.35 km, far outperforming all open-source counterparts.

Why It Matters

GeoVista shows how multimodal models become true agents, not just perceivers.

It demonstrates a missing capability in current VLMs:

The fusion of vision + tool use + external knowledge retrieval.

Real-world geolocation demands context like:

- language on signs,

- architectural styles,

- vehicle types,

- terrain,

- and confirmatory web evidence.

GeoVista’s design marks a shift from static perception to interactive, tool-mediated intelligence, offering a blueprint for future research on embodied agents, travel assistants, security intelligence systems, and long-context multimodal reasoning.

Explore More

Paper: arXiv:2511.15705

Project Page: https://geo-vista.github.io

Related LearnOpenCV Posts (contextually relevant):

- Browser Automation using LangGraph: https://learnopencv.com/langgraph-building-a-visual-web-browser-agent/

- Self-Correcting Agent for Code Generation: https://learnopencv.com/langgraph-self-correcting-agent-code-generation/

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning