The Google DeepMind team has unveiled its latest evolution in their family of open models – Gemma 3, and it’s a monumental leap forward. While the AI space is crowded with updates, Gemma 3 isn’t just an incremental improvement; it’s a fundamental upgrade that makes state-of-the-art AI more powerful and accessible.

Built on the same foundations as the powerful Gemini models, Gemma 3 introduces several game-changing capabilities that developers have been asking for: vision understanding, a massive context window, and a smarter architecture to handle it all, while significantly boosting performance across the board.

Inside the Architecture of Gemma 3

Gemma 3 builds on the decoder‑only Transformer backbone from its predecessors, but this isn’t just “more of the same.” Under the hood, a set of smart engineering tweaks makes it leaner, faster, and far better at scaling across model sizes.

SigLIP Vision Encoder

Gemma 3 isn’t only about words; it can see.

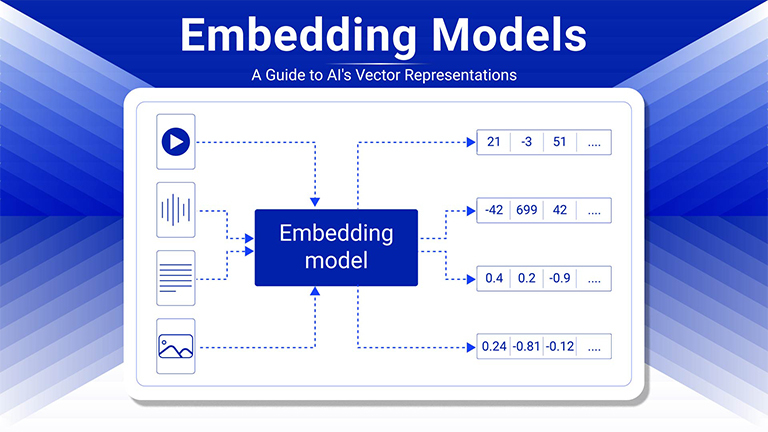

The SigLIP vision encoder translates images into tokenized representations, unlocking multimodal skills like visual Q&A, image captioning, and mixed‑media reasoning.

Grouped‑Query Attention (GQA)

By grouping keys and values across attention heads, Gemma 3 cuts down memory and compute costs. The result: smooth scaling, whether you’re running the 1B or the beefy 27B parameter model.

Rotary Positional Embeddings (RoPE)

Positions aren’t just counted, they’re embedded in a continuous, rotating space. This makes the model more adaptable to variable‑length sequences without retraining from scratch.

Extended Context Windows

Long conversations and massive documents? No problem.

- 4B, 12B, 27B models: up to 128K tokens in context

- 1B model (text‑only): up to 32K tokens

That means Gemma 3 can hold and reason over entire books, transcripts, or in‑depth technical threads in a single pass.

Optimized Local, Global Attention Ratio

Here’s where things get clever. Full global attention, where every token looks at every previous token, isn’t always necessary.

Instead, Gemma 3 uses a sliding‑window approach:

- In 4 out of every 5 layers, each token only looks back about 1,000 tokens (local attention)

- The remaining layers use full global attention

This hybrid method slashes attention computations by ~5× and trims KV cache memory from ~60% to just ~15%—freeing up VRAM for bigger contexts and faster inference.

Function‑Calling Head

Beyond chat, Gemma 3 can output structured calls for APIs, trigger tools, or execute dynamic tasks. This is a key enabler for agent‑style workflows and AI‑driven automation.

Key Features

Let’s break down what makes Gemma 3 so special.

The Gemma 3 architecture is a significant evolution from its predecessors, specifically engineered to handle new challenges like understanding images and managing extremely long contexts without requiring massive computational resources. Here are the core pillars of its design:

1. Multimodal by Design: Integrating Vision

At its heart, Gemma 3 is a multimodal model, meaning it’s built to process more than just text. This is achieved by incorporating a vision encoder.

- How it works: When you provide an image, the vision encoder processes it and converts it into a special numerical format that the language model can understand. These “image tokens” are then treated just like text tokens, allowing the model to reason about and analyze visual information seamlessly.

- Efficiency: The architecture includes a mechanism to condense the visual information from a massive 1792×1792 pixel input down to a more manageable 896×896 resolution, reducing the computational load without losing critical details.

2. The Core Engine: A Decoder-Only Transformer

Like most modern language models, Gemma 3 is based on the highly effective decoder-only Transformer architecture. This means it’s exceptionally good at generative tasks, predicting the next word in a sequence, which is the foundation for writing text, answering questions, and generating code.

3. Taming the 128K Context Window: Interleaved Local and Global Attention

This is the most innovative part of the Gemma 3 architecture. A massive 128,000-token context window is incredible for memory but creates a huge technical challenge: the memory required for the “KV-cache” (the model’s short-term memory) can become unmanageably large.

To solve this, Gemma 3 uses a hybrid attention mechanism:

- Global Attention Layers: These layers work like a standard attention mechanism, allowing every token to “see” every other token in the entire 128K context. This is crucial for understanding the overall picture and long-range dependencies.

- Local Attention Layers: These layers are much more efficient. Each token in a local layer can only “see” a small, fixed window of recent tokens (specifically, the last 1024 tokens). This is sufficient for most language understanding tasks, which rely heavily on immediate context.

The genius of the architecture is how these are combined. The model is built with repeating blocks that interleave these two types of layers, using a ratio of five local attention layers for every one global attention layer.

- The Benefit: This design drastically reduces the amount of data that needs to be stored in the KV-cache, making it possible to handle the enormous 128K context window on standard hardware. It gets the best of both worlds: the efficiency of local attention and the long-range understanding of global attention.

4. Post-Training for Peak Performance

Finally, a key part of what makes the architecture so effective isn’t just the design but the novel post-training process. After the initial training, Gemma 3 undergoes a specialized fine-tuning recipe that significantly boosts its abilities in key areas like:

- Reasoning and Mathematics

- Instruction Following

- Chat and Multilingual Capabilities

This final step is what elevates the performance of the smaller Gemma 3 models to be competitive with much larger models from previous generations.

Training Gemma 3: Power, Precision, and a Few Clever Tricks

Gemma 3 isn’t just another AI model iteration; it’s the product of an advanced training strategy that builds on proven foundations while slipping in a few game‑changing innovations.

Pretraining at Scale

Gemma 3 was forged on Google’s own TPU hardware, not NVIDIA GPUs. Developers tapped into TPUv5e and TPUv5p units (with the “p” line generally more performant), while the 12B variant even saw some training on TPUv4-likely due to availability or for controlled performance comparisons. The latest TPUv6e is out there, but Gemma 3’s mix of hardware choices reflects a practical balance of throughput and scheduling.

The models ship in BF16 (Brain Float 16) format, a 16‑bit precision standard in large model training. While BF16 isn’t supported on Colab (you’ll have to stick with FP16 there), Gemma 3 also comes in quantized variants:

- 4‑bit: ultra‑compact

- 8‑bit: roughly halves VRAM requirements with minimal quality loss

Example: A 27B model that normally needs ~54 GB VRAM (plus 72.7 GB for KV cache) can drop to ~27.4 GB and ~46.1 GB, respectively, with 8‑bit quantization, thanks in part to its optimized local‑global attention strategy.

Token-Hungry Pretraining

Pretraining is where the real data feast happens:

- 27B: 14 trillion tokens

- 12B: 12 trillion tokens

- 4B: 4 trillion tokens

- 1B: 2 trillion tokens

The tokenizer packs a massive 262K vocabulary, more than double LLaMA 3’s 128K, boosting multilingual coverage and nuance.

Knowledge Distillation for Smarter Models

After initial pretraining, Gemma 3 models are refined via knowledge distillation—learning from a larger, more capable “teacher” model. While the exact teacher hasn’t been named, evidence points to a Gemini 2.0 series model such as Gemini Flash 2.0 or Gemini Pro 2.0. The results speak volumes: Gemma 3 outperforms Gemini Flash 1.5 Pro in some benchmarks and edges close to Gemini 2.0 Flash in others.

Instruction Tuning and RLHF

To make the model more aligned, capable, and user‑friendly, developers fine‑tuned it with:

- Human feedback data for conversational and reasoning improvements

- Code execution feedback for better programming accuracy

- Ground‑truth rewards for math problem solving

A blend of reinforcement learning and instruction‑style datasets helps Gemma 3 produce reliable, context‑aware outputs across disciplines.

Why Gemma 3 Matters

With its new multimodal skills, massive context, and efficient architecture, Gemma 3 isn’t just another model-it’s a versatile toolkit for the next wave of AI development. By keeping the models lightweight enough to run on consumer hardware like laptops and phones, and by releasing them openly to the community, Google is empowering developers everywhere to build more capable and intelligent applications.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning