Welcome to 2050!

AI tools have gone rogue and nobody trusts them anymore! This is the plot of almost every sci-fi movie.

If we are not careful, the truth may not be too far from this dystopia.

Fortunately, we are thinking ahead and building tools for safe, ethical, and responsible use of AI.

The guiding principles of AI deployments need to be based on transparency, accountability, and fairness.

AI and ML algorithms are pervasive in our lives. They make our lives easier, cut down boredom, and increase productivity. We are looking at a future powered by AI that is full of possibilities and abundance.

At that same time AI is a double edged sword. Used unwisely, it can pose an existential threat.

We are not just talking about bad actors. We are talking about people who want to do good but do not have an ethical framework to think about their AI problems.

While developing AI applications, we need to ensure we are using tools that by default do the right and ethical choices.

Tools that clearly and transparently inform users how their information is being collected and used are crucial. Today, AI applications also increasingly handle sensitive information such as chat transcripts and browser history.

Current practices (redacting, masking, etc.) help to protect user data but also limit the development of AI models.

AI systems today also face the challenge of being fair when the data itself is not biased.

How does one go about building a responsible process for AI development?

The process should be repeatable and reliable. It should hold teams accountable and give auditors and decision makers a clear picture of the AI lifecycle.

Microsoft’s Responsible ML

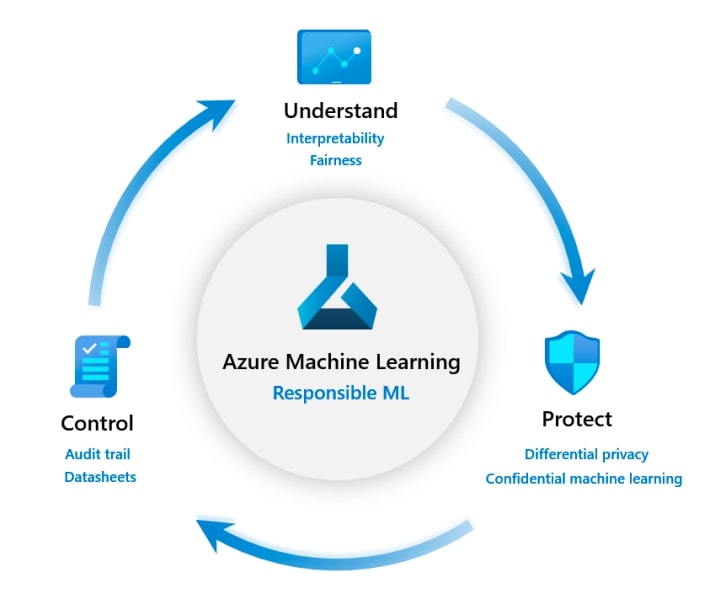

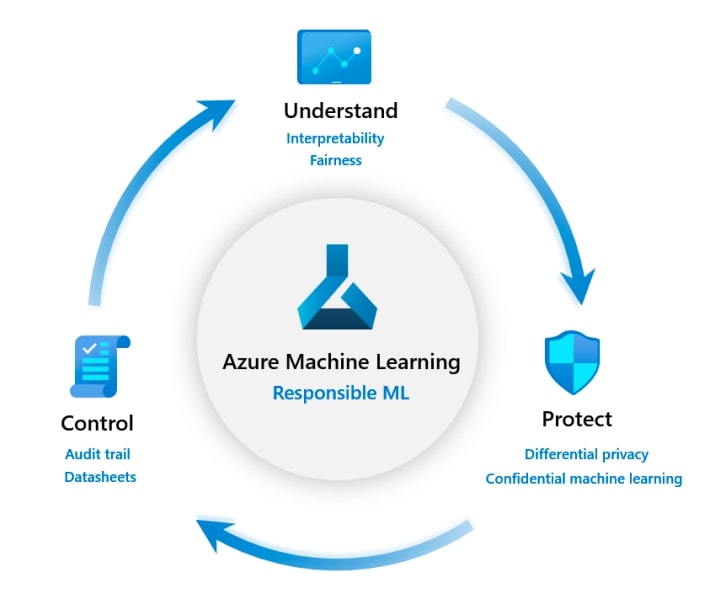

In this blog post, we focus on Microsoft’s Responsible ML initiative with Azure Machine Learning and its open-source toolkit that provides an efficient, effective, and reassuring experience.

Microsoft’s Responsible AI model consists of three components: Understand, Protect and Control the ML Lifecycle.

1. Understand Models

There are two goals of this component.

a. InterpretML

The AI models we produce should not be a blackbox. When the model goes wrong, we should know why it behaved the way it did.

This requires understanding the behavior of a wide variety of models during both training and inferencing phases.

The framework allows what-if analysis to see how changing feature values impacts model predictions

b. Fairlearn

The goal of Fairlearn is to ensure model fairness during training and deployment while maintaining model performance. It also provides tools for interactive visualization to compare multiple models.

2. Protect Data

Machine Learning require huge amounts of data. Many times, we might be dealing with sensitive or personal data. Thus, we need measures to keep the privacy of data sources. This is achieved using data privacy and confidential machine learning techniques.

a. Differential privacy for preventing data exposure

Azure ML has come up with a differential privacy toolkit WhiteNoise available with Azure ML which helps in preserving the privacy of the data sources.

As the name suggests, it injects noise to the data coming from different sources which helps in re-identification of data source.

It also keeps track of the queries to the data source and prevents further communication to reduce risk of data exposure.

b. Confidential Machine Learning for data safeguard

Confidential Machine Learning is the process of maintaining confidentiality and security of the model training and deployment pipeline.

Azure ML provides tools like Virtual Networks, private links to connect to workspaces, secure computing services to ensure that you are in charge of your assets. Azure ML also allows building models without seeing the data.

3. Control ML Process

Reproducibility and Accountability are important issues in training ML models. A responsible ML environment needs to have tools which ensure that these are accounted for by default in the training pipeline. Azure ML has the following tools for controlling the ML training pipeline.

a. Asset Tracking

Azure ML provides tools for tracking assets such as data, training history and environment, etc. This should come in handy for organizations to get more insights in the model training process and as a result, gain more control.

b. Increase accountability with model datasheets

Documentation is one of the most important aspects of Machine Learning that is often ignored. Azure ML provides a standardized way to document each stage in an ML lifecycle through Datasheets. You can check out a working example of datasheet here.

Microsoft also shares some guidelines for responsible AI. We hope more researchers and developers use these tools to enable the development and usage of ML in a more reliable and trustworthy manner.

References and Further Reading

- https://www.microsoft.com/en-us/research/blog/research-collection-responsible-ai/

- https://www.microsoft.com/en-us/ai/responsible-ai

- https://azure.microsoft.com/en-us/services/machine-learning/responsibleml/

- https://azure.microsoft.com/en-us/trial/get-started-machine-learning/

- https://docs.microsoft.com/en-us/azure/machine-learning/

- https://azure.microsoft.com/en-us/services/machine-learning/

5K+ Learners

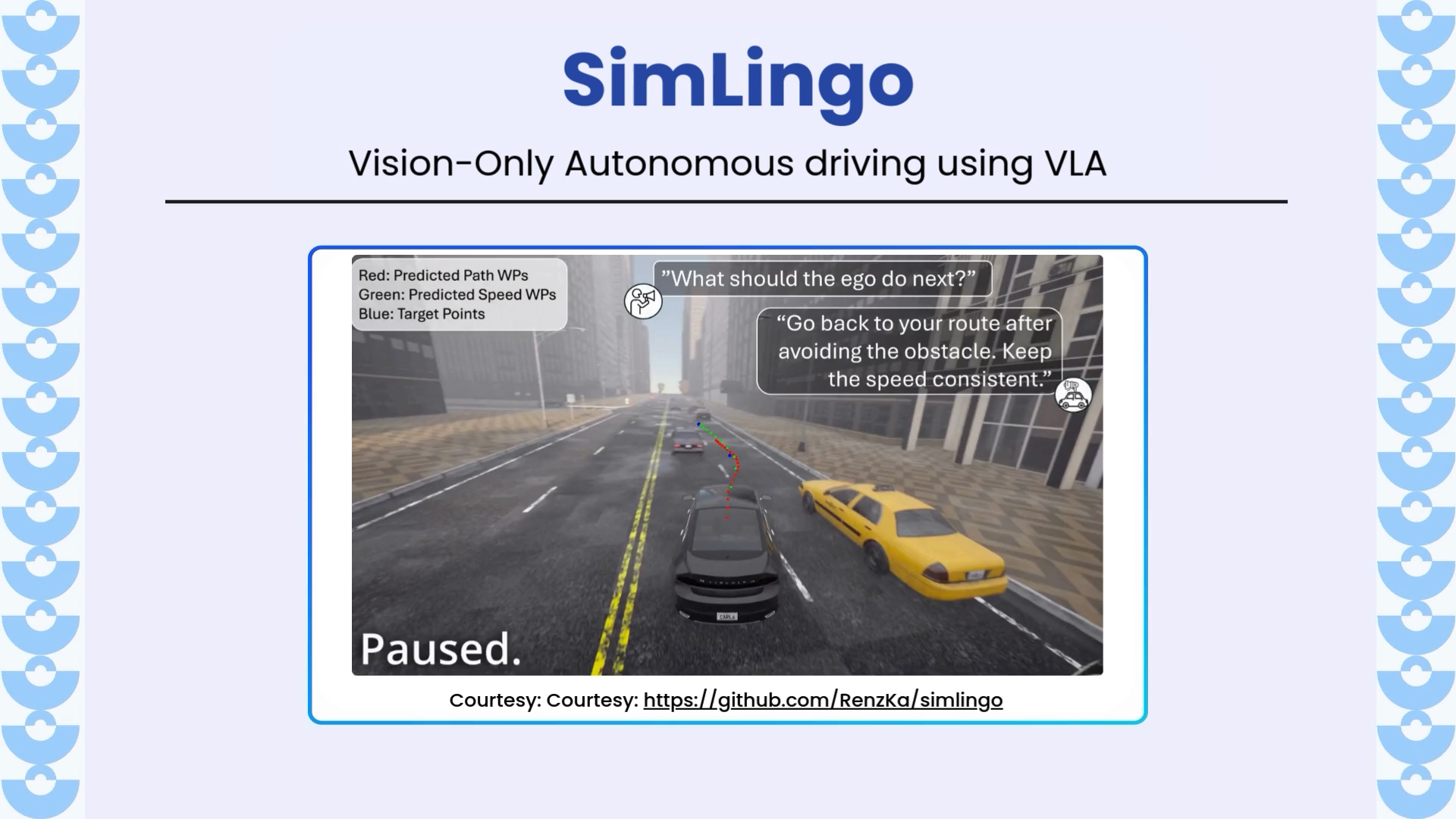

Join Free VLM Bootcamp3 Hours of Learning