Imagine this! A video of a world leader giving a speech they never actually delivered, or a celebrity appearing to endorse a product they’ve never even heard of. These aren’t simple photo edits—deepfakes are hyper-realistic, AI-generated videos, images, or audio clips that manipulate reality in ways previously reserved for science fiction. For example, a recent deepfake of actor Tom Cruise on social media left viewers stunned as they watched him perform incredible magic tricks, all while appearing completely lifelike. What’s more unsettling is that the deepfake wasn’t even created by a production studio—it was crafted by an AI with nothing more than publicly available footage. This new frontier in digital creation is revolutionizing how we think about media, authenticity, and trust in the online world.

Courtesy: https://x.com/vfxchrisume

How Are Deepfakes Made?

At the heart of deepfake creation are powerful AI techniques, mainly involving neural networks like Autoencoders and Generative Adversarial Networks (GANs).

- Autoencoders work like a skilled artist and their apprentice. One part (the encoder) learns to capture the essential features of a face—like its structure and expression—into a compressed “sketch.” The other part (the decoder) learns to reconstruct the face from that sketch. For face-swapping, you train a shared encoder on two faces (say, yours and a celebrity’s) but give each face its own decoder. Then, you feed your facial sketch into the celebrity’s decoder, and voilà—the celebrity’s face takes on your expression.

- GANs involve a duel between two AIs: a “Generator” and a “Discriminator.” The Generator tries to create fake images that look real, while the Discriminator tries to tell the fakes from genuine images. They constantly compete, pushing each other to get better until the Generator can create fakes that fool the Discriminator—and often, us.

Why Do Deepfakes Matter?

This technology is a double-edged sword. It has exciting uses in entertainment (like de-aging actors ), education (bringing history to life ), accessibility (realistic dubbing ), and even healthcare (training simulations ).

However, the potential for misuse is vast. Deepfakes can fuel misinformation campaigns, create non-consensual pornography, enable sophisticated financial fraud, and fundamentally erode trust in what we see and hear online.

Computer Vision Techniques for Detection

Detecting deepfakes is fundamentally a computer vision challenge. We use algorithms to analyze the pixels, patterns, and movements within media, looking for signs of artificial manipulation. Key computer vision principles applied here include:

- Feature Extraction: This is where the computer vision system identifies and pulls out meaningful characteristics (features) from the visual data. Instead of just seeing pixels, it looks for things like edges (boundaries of objects) , corners , textures (surface patterns) , colors , and shapes. Extracting these features helps simplify the complex visual information.

- Pattern Recognition: Once features are extracted, computer vision algorithms look for specific patterns. In deepfake detection, these algorithms are trained to recognize patterns known to be associated with fake content, like the specific artifacts left by a certain GAN or unnatural facial movements.

- Anomaly Detection: This technique focuses on spotting things that deviate from the norm. Computer vision systems learn what “normal” looks like from vast amounts of real video and image data. They then flag visual elements or movements in a suspected deepfake that seem unusual or inconsistent with that learned reality.

Applying Computer Vision: Spotting the Clues

Computer vision systems are trained to hunt for specific telltale signs that often appear in deepfakes:

- Visual Artifacts: AI generation isn’t always perfect. Computer vision algorithms can detect subtle visual glitches that humans might miss, such as:

- Inconsistent lighting, shadows, or reflections (especially in eyes/glasses).

- Unnatural blurring or sharpness along the edges of a swapped face.

- Skin texture that looks too smooth or has inconsistent details.

- Slight color mismatches between the face and body.

- Unnatural Biological Signals: Mimicking human biology perfectly is hard for AI. Computer vision techniques analyze movement and timing to find inconsistencies, like:

- Odd eye blinking patterns (too frequent, too rare, or absent).

- Head movements that don’t quite sync with facial expressions.

- Lip movements that don’t perfectly match the audio track (poor lip-sync).

- Incorrect number of fingers or unnatural finger movements (like distorted or fused fingers).

Deep Learning: The AI Detectives Using Computer Vision

The most powerful deepfake detectors today use advanced computer vision models, primarily deep learning architectures:

- Convolutional Neural Networks (CNNs): These are the workhorses of image analysis. CNNs excel at automatically learning spatial features directly from pixels. They use layers of filters to detect edges, textures, shapes, and complex patterns indicative of fakes. Think of them as learning the visual “grammar” of real vs. fake images.

- Recurrent Neural Networks (RNNs): Often used alongside CNNs, RNNs analyze sequences. In computer vision, they process the sequence of visual features extracted by CNNs from video frames over time, spotting temporal inconsistencies like flickering or unnatural motion.

- Transformers: Increasingly popular in computer vision, Transformers use “attention” mechanisms to weigh the importance of different parts of an image or video sequence, effectively capturing complex spatial and temporal relationships that might signal a fake.

These deep learning models are trained on huge datasets (like FaceForensics++ , Celeb-DF , and DFDC ) containing millions of real and fake examples, allowing their computer vision capabilities to become highly refined.

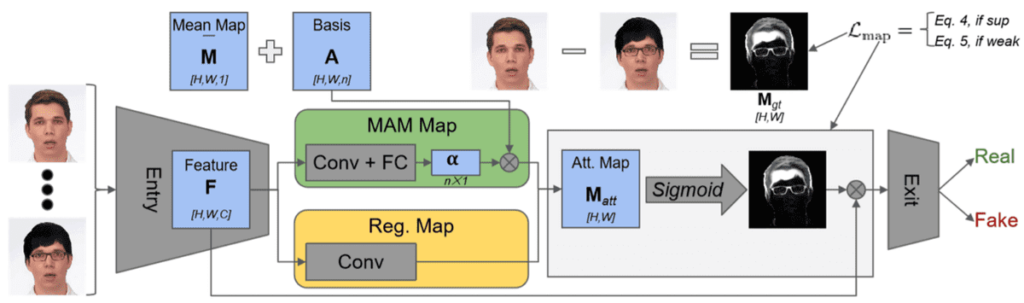

Image Source: https://theaisummer.com/deepfakes/

Why Detection Remains a Computer Vision Challenge

Despite these powerful tools, detection isn’t foolproof. Key computer vision-related challenges include:

- The Arms Race: Deepfake creation methods constantly improve, eliminating the visual artifacts that current detectors look for.

- Generalization Gap: A detector trained to spot visual patterns from one AI generator might fail on fakes from a new, unseen generator. Recent tests show performance drops significantly on new, “in-the-wild” fakes compared to older datasets.

- Compression: Videos online are compressed, which degrades image quality and can erase the subtle visual clues computer vision systems need.

The Future: Advancing Computer Vision for Detection

Research continues to push the boundaries:

- Multimodal Detection: Combining computer vision analysis with audio analysis to find inconsistencies between what we see and hear.

- Real-Time Systems: Developing faster computer vision algorithms for live detection.

- Provenance: Using digital watermarks or secure metadata (like C2PA’s Content Credentials) to track media origin, complementing visual analysis.

- Explainable AI (XAI): Creating computer vision systems that can explain why they flagged something, showing the visual evidence.

Conclusion: Seeing Through the Deception

Deepfakes challenge our trust in digital media. While the technology to create them advances rapidly, so does the computer vision science used to detect them. By training algorithms to analyze visual data for artifacts, unnatural movements, and statistical anomalies, we can build tools to identify manipulations. However, technology is only part of the solution. A combination of better computer vision detectors, proactive measures like content provenance, public awareness, and critical thinking is needed to navigate the complex landscape of synthetic media and safeguard digital authenticity.

Ready to put theory into practice?

Sign up for our free PyTorch Bootcamp today and start building your own deepfake detection models from scratch—no payment required! Enroll now and take the first step toward mastering deep learning with PyTorch.

Frequently Asked Questions

A deepfake is an AI-generated image, video, or audio clip that convincingly swaps, alters, or synthesizes a person’s likeness or voice. By leveraging neural networks—especially Autoencoders and GANs—deepfakes can produce forgeries that are often indistinguishable from genuine content.

Computer vision-based detectors first preprocess frames (e.g., with Gabor filters) and then extract hand-crafted features (like edge density, color histograms, SSIM) via fuzzy clustering. These features feed into a Deep Belief Network (DBN) enhanced with pairwise (contrastive) learning, enabling the model to learn subtle inconsistencies that reveal forgeries.

Autoencoder face-swap detection: Learns to encode facial features into a “latent sketch” and reconstruct real vs. swapped faces.

GAN-based authenticity checks: A Generator creates fakes while a Discriminator learns to distinguish them—training both in tandem yields robust detection.

Feature-based analysis: Statistical and structural cues (MSE, PSNR, DCT coefficients, etc.) highlight unnatural artifacts in synthetic media.

OpenCV for image/video processing

PyTorch and TensorFlow for building and training detection networks

scikit-image and NumPy for feature extraction

Streamlit or Flask for deploying real-time demos

Absolutely. By optimizing inference on GPUs and using efficient pre- and post-processing pipelines (e.g., batch feature extraction, lightweight DBN architectures), you can run detectors at 30+ FPS on modern hardware.

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning