Authors: WU Jia, GAO Jinwei

NPU, short for neural processing unit, is a specialized processor designed to accelerate the performance of common machine learning tasks and typically of neural networks applications. Besides acceleration, NPU frees the CPU and it is pretty power efficient.

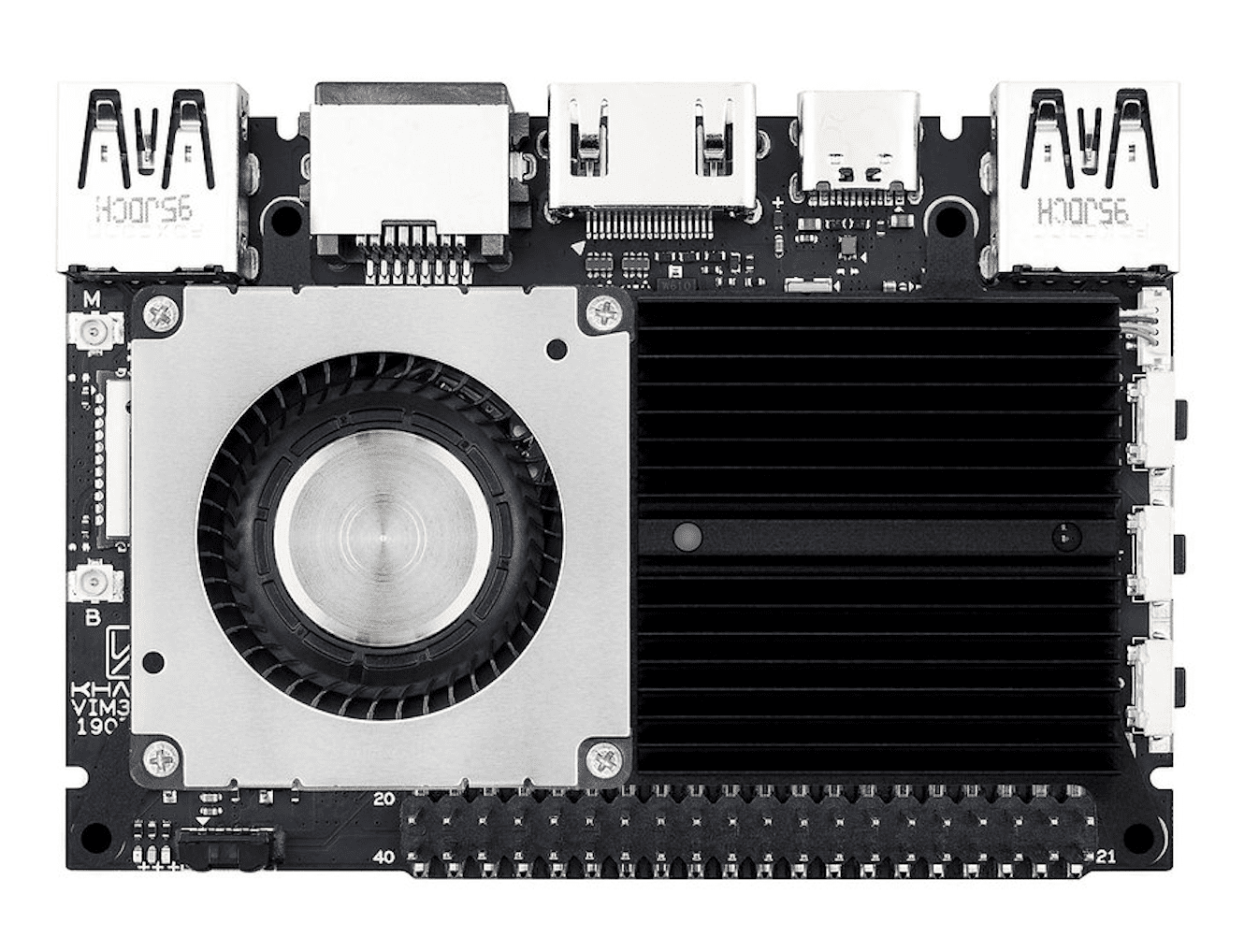

OpenCV’s Dynamic Neural Network (DNN) module is a light and efficient deep learning inference engine. It’s highly optimized and can deploy deep learning models on a wide range of hardwares. Since the release of 4.6.0 last June, OpenCV now supports running models on NPU! Khadas VIM3 is the first dev board officially supported by OpenCV to run quantized deep learnings models on NPU via the DNN module. Not only that, OpenCV DNN works with various dev boards which use the A311D System-on-Chip (SoC), the same as that on VIM3.

Let’s first take a look at how VIM3 NPU accelerates the inference (Tests below use the benchmark in OpenCV Model Zoo with OpenCV 4.6.0-pre).

| DL Model | Khadas VIM3 CPU(ms) | Khadas VIM3 NPU(ms) |

|---|---|---|

| YuNet | 5.42 | 4.04 |

| SFace | 82.22 | 46.25 |

| CRNN-EN | 181.89 | 125.30 |

| CRNN-CN | 238.95 | 166.79 |

| PP-ResNet | 543.69 | 75.45 |

| PP-HumanSeg | 82.85 | 31.36 |

| YoutuReID | 486.33 | 44.61 |

As mentioned above, models can run on other dev boards’ NPU as well, as long as they have the A311D SoC. Here’s the test on another dev board.

| DL Model | EAIS-750E CPU(ms) | EAIS-750E NPU(ms) |

|---|---|---|

| YuNet | 5.99 | 4.20 |

| SFace | 85.70 | 52.00 |

| CRNN-EN | 237.40 | 158.00 |

| CRNN-CN | 310.50 | 210.00 |

| PP-ResNet | 541.00 | 67.70 |

| PP-HumanSeg | 92.20 | 36.20 |

| YoutuReID | 502.70 | 45.50 |

We can see from the above table that NPU can bring as much as 10+ times speedup to the inference. What an exciting news! What’s more, it takes little effort to enable NPU via OpenCV DNN. The only thing required is to set the backend and the target device.

import cv2 as cv # some preprocessing # ... # load model net = cv.readNet(PATH_TO_MODEL) # settings net.setPreferableBackend(cv.dnn.DNN_BACKEND_TIMVX) # set backend net.setPreferableTarget(cv.dnn.DNN_TARGET_NPU) # set target device # infer output = net.forward() # the postprocessing # ...

A litter bit more work needs to be done to enable the OpenCV DNN NPU backend though. The instructions are available on GitHub. If you are using dev boards other than VIM3 but with the same A311D chip, you can refer to this blog post to get more tips.

Khadas is a Shenzhen-based single board computer (SBC) manufacturer for the open source community and streaming media player industry. It is one of the OpenCV Development Partners. The VIM3 SBC is a powerful dev board with the 12nm Amlogic A311D SoC which has x4 Cortex A73 performance-cores (2.2GHz) and x2 Cortex A53 efficiency-cores (1.8GHz) merged into a hexa-core configuration and an onboard 5.0 trillion operations per second (TOPS) NPU for neural network applications.

To end this post let’s do something simple but cool with the VIM3: Control the robot arm using ‘vision’, tracking the face in front of its camera by running deep learning face detection model on the NPU in real time. See the GIF below:

5K+ Learners

Join Free VLM Bootcamp3 Hours of Learning